Explore 7 Types of Prompting to Maximize AI Potential

Learn about the 7 types of prompting techniques to craft effective AI prompts. Unlock the full potential of language models with our expert guide.

Unlocking AI's Potential: A Guide to Prompting Techniques

Large language models (LLMs) are powerful, but effective communication with them hinges on crafting the right prompts. This guide explores seven types of prompting, equipping you with the skills to unlock the full potential of LLMs and generate desired outputs. Whether you're a seasoned AI professional or just starting out, understanding these types of prompting—from zero-shot and few-shot prompting to chain-of-thought (CoT) and tree of thoughts (ToT) prompting—will significantly improve your AI interactions. Learn how to use role-based, instruction following, and iterative refinement prompting techniques for optimal results.

1. Zero-Shot Prompting

Zero-shot prompting is a foundational technique in the world of prompt engineering and represents the most basic form of interacting with large language models (LLMs). It's a core concept within the various types of prompting, allowing you to task an AI model with a specific job without providing any examples or demonstrations. Think of it like asking a highly skilled individual to complete a task based purely on their existing knowledge and expertise. You rely on the model's pre-trained knowledge base to interpret your instructions and deliver the desired output. This straightforward approach stands in contrast to other methods like few-shot prompting or chain-of-thought prompting, which incorporate examples or intermediate reasoning steps respectively.

How does zero-shot prompting work? It leverages the vast amount of data the model has been trained on. During its training, the model has absorbed patterns, relationships, and information from diverse sources, allowing it to generalize and perform tasks it hasn't explicitly been trained on. When you provide a zero-shot prompt, the model searches its internal knowledge base to find relevant information and generate a response. The success of zero-shot prompting relies heavily on the model's pre-existing understanding of the task and the clarity of the instructions.

Zero-shot prompting excels in its simplicity. It's quick and easy to implement, requiring minimal prompt engineering compared to more intricate methods. This makes it a popular choice for common tasks where the model's general knowledge is sufficient. For instance, if you want a quick translation of a simple phrase or a brief summarization of a straightforward article, zero-shot prompting is often the most efficient choice. It eliminates the need to meticulously craft examples or demonstrations, saving time and resources.

Here are some examples of successful zero-shot prompting:

- Translation: "Translate this text to French: Hello, how are you?" The model directly translates the phrase based on its language training data.

- Summarization: "Summarize this article in 3 sentences:" (followed by the article text). The model leverages its understanding of language and summarization techniques to condense the article.

- Question Answering: "What are the causes of climate change?" The model draws upon its knowledge base to provide an answer.

Zero-shot prompting, while simple, has its limitations. Its performance can be inconsistent, especially with complex or novel tasks that require specific nuances or deep understanding. Because you provide no guidance beyond the initial prompt, you have less control over the output format, and the model may not adhere to specific formatting requirements. Furthermore, the results heavily depend on the quality and breadth of the model's pre-training data.

Here's a summary of the pros and cons:

Pros:

- Quick and easy to implement

- Requires minimal prompt engineering

- Works well for common tasks

- No need to find or create examples

Cons:

- May produce inconsistent results

- Limited performance on complex or novel tasks

- Less control over output format

- May not follow specific formatting requirements

When considering using zero-shot prompting, assess the complexity of your task and the model's capabilities. For simpler tasks where general knowledge suffices, zero-shot is a strong choice. However, for nuanced or intricate requests, consider exploring other prompting methods like few-shot or chain-of-thought prompting for better control and performance.

Here are some actionable tips for effective zero-shot prompting:

- Be clear and specific in your instructions: Avoid ambiguity and use precise language.

- Use simple, direct language: Complex sentence structures can confuse the model.

- Include context when necessary: Provide enough background information for the model to understand the task.

- Test with multiple variations to find optimal phrasing: Slight changes in wording can significantly impact the output.

Zero-shot prompting earns its place as a fundamental type of prompting due to its simplicity and efficiency for common tasks. Its accessibility and minimal setup make it an ideal starting point for anyone venturing into the world of prompt engineering. Understanding its strengths and limitations empowers you to choose the right prompting strategy for your specific needs, paving the way for effective and impactful interactions with LLMs.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

2. Few-Shot Prompting

Few-shot prompting is a powerful technique in the world of prompt engineering and represents a significant advancement in how we interact with large language models (LLMs). It sits comfortably within the broader landscape of "types of prompting" as a crucial bridge between the simplistic zero-shot prompting and the more resource-intensive fine-tuning. This approach provides the LLM with a small number of examples (typically 1-5) that demonstrate the desired task before asking it to perform the same task on new, unseen input. Think of it as providing the model with a quick tutorial or cheat sheet before giving it the actual test. This "learning by example" approach allows the model to grasp the pattern, format, and style expected in the output, significantly boosting its performance on the target task.

This method leverages the inherent "in-context learning" capabilities of LLMs. Instead of explicitly training the model's weights on a massive dataset (like in fine-tuning), few-shot prompting provides just enough context within the prompt itself for the model to infer the desired behavior. This makes it a highly efficient and adaptable method for various tasks.

How Few-Shot Prompting Works:

The core of few-shot prompting lies in crafting a prompt that includes both demonstrations and the new input. Each demonstration consists of an input example paired with its corresponding desired output. For instance, if you're aiming for sentiment analysis, a demonstration would be a sentence followed by its sentiment label (positive, negative, or neutral). After presenting these examples, you then provide the new input sentence and ask the model to classify it based on the patterns it observed in the demonstrations.

Examples of Successful Implementation:

Few-shot prompting finds applications across a diverse range of tasks. Here are a few examples:

- Sentiment Analysis: Provide a few examples like: "This movie was amazing! - Positive", "The food was terrible. - Negative", "The weather is okay. - Neutral". Then ask the model to classify a new sentence like "I enjoyed the book."

- Code Generation: Demonstrate with examples of Python functions that perform specific tasks, followed by a description of a new function you want the model to generate.

- Creative Writing: Provide a few short poems in a particular style (e.g., haiku, sonnet) and then ask the model to generate a new poem in the same style based on a given theme.

- Translation: Give examples of phrases translated between two languages, and then prompt the model to translate a new phrase.

- Question Answering: Provide a passage and a few example questions with their correct answers, then ask a new question about the same passage.

Advantages of Few-Shot Prompting:

- Significantly Improved Task Performance: Compared to zero-shot prompting, few-shot prompting drastically enhances the accuracy and relevance of the model's output.

- Better Control Over Output Format: By demonstrating the desired format in the examples, you guide the model to produce output that adheres to your specific needs.

- Handles Complex and Specialized Tasks: It enables tackling tasks that are too nuanced or specific for zero-shot prompting to handle effectively.

- More Consistent Results: The provided examples help anchor the model's responses, leading to more predictable and consistent outputs.

Disadvantages of Few-Shot Prompting:

- Increased Token/Context Length: Including multiple examples within the prompt consumes more tokens, potentially limiting the length of the input you can process.

- Need for Quality Examples: The effectiveness hinges on the quality and representativeness of the examples; poorly chosen examples can mislead the model.

- Potential for Bias: If the examples reflect specific biases, the model's output may also exhibit those biases.

- Scalability Challenges: For extremely complex tasks, the number of examples needed might become prohibitive, making the approach less practical.

Tips for Effective Few-Shot Prompting:

- Choose Diverse and Representative Examples: Ensure your examples cover a range of possible input variations and desired outputs.

- Prioritize High-Quality Examples: Use clear, concise, and error-free examples to avoid confusing the model.

- Match Example Complexity to Target Task: The complexity of the examples should align with the complexity of the task you're trying to solve.

- Maintain Consistent Formatting: Use a uniform format for all examples and the new input to facilitate pattern recognition by the model.

Few-shot prompting, popularized by the OpenAI GPT research team (Tom Brown et al. in the GPT-3 paper), has become a cornerstone technique in effectively utilizing large language models. It empowers users to achieve remarkable results on a wide variety of tasks without the need for extensive model training. By understanding its strengths and limitations, and by following the tips outlined above, you can leverage the power of few-shot prompting to unlock the full potential of LLMs and achieve your desired outcomes.

3. Chain-of-Thought (CoT) Prompting

Chain-of-Thought (CoT) prompting is a powerful technique within the broader landscape of "types of prompting" that significantly enhances the reasoning capabilities of large language models (LLMs). Instead of expecting the AI to produce an answer directly, CoT prompting encourages the model to break down complex problems into a series of smaller, intermediate reasoning steps, mirroring the way humans solve problems. This step-by-step approach allows the LLM to tackle more intricate tasks involving logic, math, and multi-step reasoning, ultimately leading to more accurate and insightful results. It's a crucial strategy for anyone working with LLMs to understand and implement.

CoT prompting works by explicitly prompting the model to articulate its thought process. Rather than simply asking for the solution, the prompt guides the LLM to explain how it arrived at the solution. This is achieved by providing examples of proper reasoning chains within the prompt itself, demonstrating the desired step-by-step breakdown. By mimicking these examples, the LLM learns to decompose the problem, revealing its intermediate thinking processes and making its reasoning more transparent. This transparency is invaluable for debugging, understanding the model's limitations, and building trust in its outputs.

One of the most compelling reasons to use CoT prompting is its ability to dramatically improve performance on complex reasoning tasks. Where traditional prompting might fail to produce accurate or logical answers, CoT prompting empowers the LLM to navigate intricate problems with significantly higher accuracy. This is especially evident in tasks like solving math word problems, navigating logical puzzles, and performing complex analysis. For example, instead of asking "What is 12 multiplied by 3 then added to 5?", a CoT prompt would be structured more like this: "Let's think step by step. What is 12 multiplied by 3? That's 36. Now, what is 36 added to 5? That's 41. Therefore, the final answer is 41." This demonstrates the core principle of breaking down the problem into digestible steps.

Furthermore, CoT prompting enhances the interpretability of AI reasoning. By explicitly outlining each step in the thought process, the LLM's output becomes much easier for humans to understand and analyze. This increased transparency allows users to identify potential flaws in the reasoning, verify the logic behind the answer, and gain valuable insights into the model's inner workings. This is a significant advantage over traditional prompting, where the lack of transparency can make it difficult to understand how the AI arrived at a particular conclusion.

While CoT prompting offers substantial benefits, it also has some drawbacks. It requires longer prompts and generates longer responses, which can be more computationally expensive. For simple tasks, CoT prompting might be overkill, adding unnecessary complexity. Additionally, the quality of the LLM's reasoning is highly dependent on the quality of the reasoning examples provided in the prompt. If the examples are flawed or incomplete, the LLM's reasoning will likely be as well.

To effectively use CoT prompting, consider these tips: Incorporate phrases like "Let's think step by step" or "Reason through this" to guide the model. Provide clear and concise examples of well-structured reasoning chains relevant to the task. Encourage the model to explain its reasoning at each step, not just provide the final answer. Finally, always verify the logic of the reasoning presented by the LLM, not just the final result. This ensures the model isn't just mimicking the format of the examples without understanding the underlying logic.

Chain-of-Thought prompting is a valuable tool for anyone seeking to unlock the full potential of LLMs. Its ability to improve accuracy on complex reasoning tasks, enhance transparency, and reduce logical errors makes it a crucial technique in the arsenal of prompt engineering. For those seeking to delve deeper into this fascinating area, learn more about Chain-of-Thought (CoT) Prompting. By understanding the nuances of CoT prompting and applying the provided tips, you can significantly enhance your interactions with LLMs and achieve more accurate and interpretable results. This method rightfully earns its place amongst the most effective types of prompting for AI professionals, developers, and anyone working with large language models. Its impact on generating coherent and logically sound outputs is transformative.

4. Tree of Thoughts (ToT) Prompting

Tree of Thoughts (ToT) prompting represents a significant advancement in the field of prompt engineering. It moves beyond the linear, step-by-step approach of Chain-of-Thought prompting and embraces a more comprehensive, tree-like exploration of potential solutions. This method treats problem-solving as a search process through a tree of possible "thoughts," allowing language models to explore multiple reasoning paths simultaneously. Instead of being confined to a single line of reasoning, ToT allows the model to consider various options, backtrack from dead ends, and systematically evaluate different approaches to arrive at optimal solutions, particularly for complex problems. This makes it a powerful tool in the arsenal of any prompt engineer working with large language models (LLMs).

How does ToT prompting work? Imagine a tree where the root is the initial problem statement. Each branch represents a possible thought or line of reasoning stemming from the problem. The model generates multiple branches, effectively exploring various approaches simultaneously. Each branch can further branch out into more specific thoughts, forming a tree-like structure. The model then evaluates these different thought paths, potentially pruning less promising ones and focusing on the most likely routes to a solution. This ability to backtrack and correct course is a key advantage over linear prompting methods. By systematically evaluating the different branches, ToT prompting allows the model to find optimal or near-optimal solutions that might be missed by simpler prompting techniques.

The benefits of this approach are particularly evident in complex, strategic problems. For example, consider the 24-number game where you have to combine four numbers using basic arithmetic operations to get 24. ToT prompting allows the model to explore different combinations and sequences of operations, effectively searching the solution space more thoroughly. Other examples of successful ToT implementation include complex planning problems, multi-objective optimization tasks, and even creative writing with multiple plot explorations. In creative writing, ToT can be used to brainstorm different narrative paths, allowing the model to generate more diverse and intricate storylines.

While ToT prompting offers significant advantages, it's important to be aware of its limitations. The primary drawback is the computational cost. Exploring multiple branches simultaneously requires multiple calls to the language model, making it significantly more expensive than simpler methods. It is also more complex to implement and manage, requiring careful consideration of search depth, evaluation criteria, and pruning strategies. For simple reasoning tasks, ToT is generally overkill and simpler methods like Chain-of-Thought prompting are often sufficient.

When deciding whether to use ToT prompting, consider the complexity of the problem and the need for exploring multiple solutions. If the task involves strategic planning, requires foresight, or benefits from a systematic search for optimal solutions, ToT is likely a good choice. However, if the task is relatively simple and a single line of reasoning is sufficient, other prompting methods might be more efficient.

Here are some tips for effectively using ToT prompting:

- Define clear evaluation criteria: Establish how the model should assess the quality of each thought or branch.

- Set appropriate search depth limits: Prevent the tree from becoming excessively large and computationally unmanageable.

- Use ToT for problems that benefit from exploration: Focus on tasks where exploring multiple solution paths is crucial.

- Implement pruning strategies: Discard less promising branches early on to manage complexity.

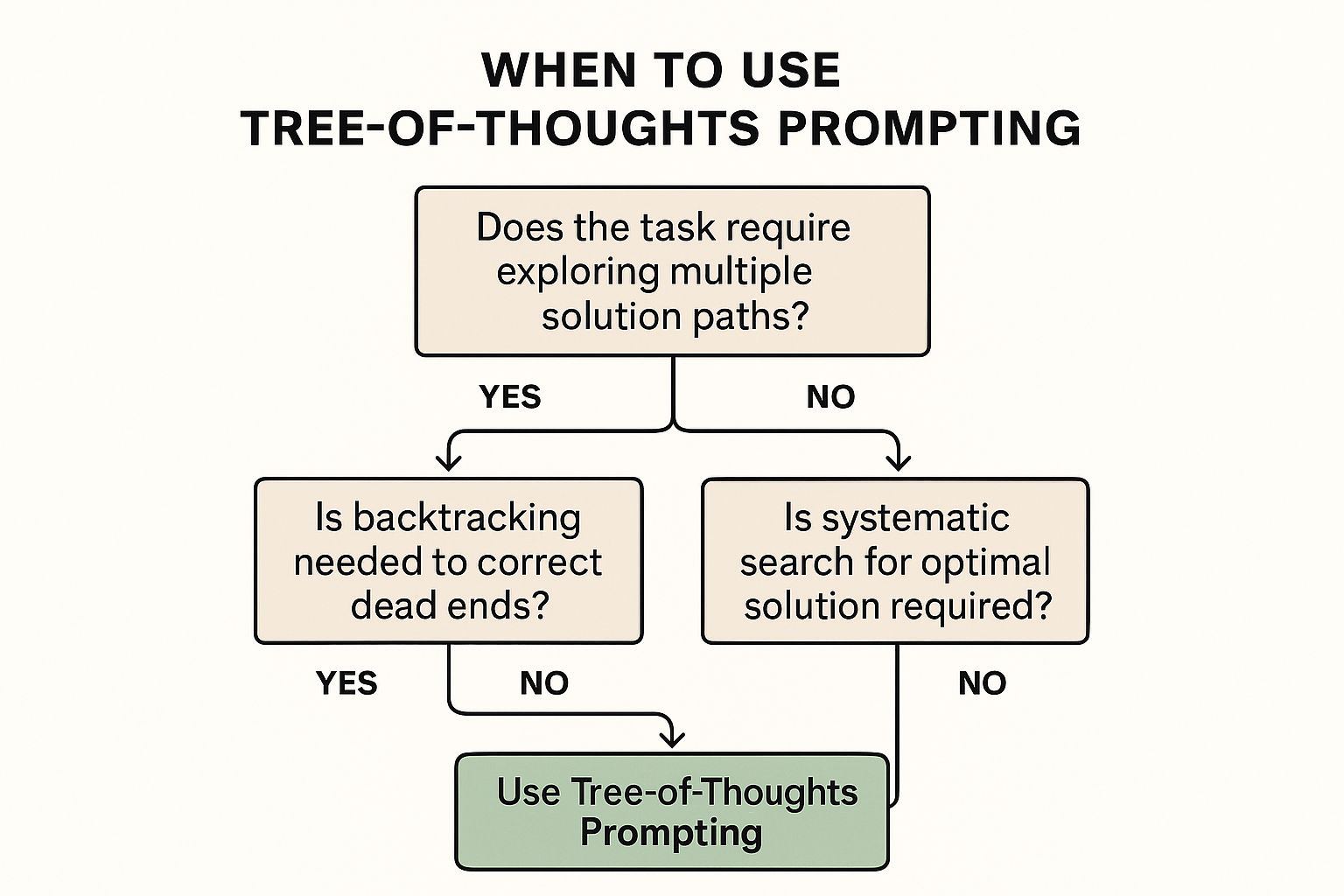

The following infographic provides a simple decision-making process to determine if Tree-of-Thoughts Prompting is suitable for a given task:

This infographic visualizes a decision tree that guides you on when to use ToT prompting. It starts with the question "Does the task require exploring multiple solution paths?" and leads through further questions regarding backtracking and optimization needs.

The infographic clearly shows that ToT prompting is most effective when a problem demands exploring various solutions, requires backtracking to avoid dead ends, and benefits from a systematic search for optimal solutions. By considering these factors, you can make an informed decision about whether ToT is the right prompting technique for your specific needs. Tree of Thoughts prompting, while computationally demanding, offers a powerful way to leverage the capabilities of large language models for complex problem-solving, securing its place as an important "type of prompting" for advanced applications. Pioneered by Shunyu Yao, Dian Yu, and Jeffrey Zhao at Princeton, this method holds great promise for future developments in prompt engineering and AI problem-solving.

No spam, no nonsense. Pinky promise.

5. Role-Based Prompting

Role-based prompting, a powerful technique among the various types of prompting, directs AI models to respond from a specific persona, role, or identity. By explicitly instructing the AI to act as a particular expert, professional, or character, you leverage its vast training data on diverse perspectives and specialized knowledge domains. This approach allows you to elicit more contextually appropriate, expert-level, and even creative responses tailored to your specific needs. Think of it as casting the AI in a play; you provide the script and character description, and the AI delivers the performance.

This method works by tapping into the AI's ability to mimic different writing styles, tones, and perspectives. Instead of simply asking a question, you frame it within the context of a specific role. For instance, rather than asking "What are the benefits of using Python?", you might prompt: "As a senior software engineer, explain the advantages of Python for web development." This nuanced instruction guides the AI to respond not just with a list of benefits, but with the insights and perspective of an experienced software engineer. This can result in responses that are more practical, detailed, and aligned with real-world applications.

Role-based prompting deserves a place on this list due to its ability to significantly enhance the quality and relevance of AI-generated content. By assigning a specific role, you unlock access to a deeper well of knowledge and expertise within the AI model. It allows you to tailor the output to specific audiences and purposes, resulting in more engaging and informative interactions.

One of the most significant benefits of this type of prompting is its ability to maintain a consistent perspective throughout a conversation or piece of writing. This is particularly useful for creating believable characters in storytelling, generating consistent brand messaging, or maintaining a specific tone in educational materials.

Here are a few examples demonstrating the versatility of role-based prompting:

Technical: "Act as a senior software engineer reviewing this code:

[insert code here]." This prompt elicits a technical analysis from an expert perspective, going beyond simple debugging and offering insights into code quality, efficiency, and best practices.Historical: "You are a medieval historian explaining the significance of the Battle of Hastings." This prompt frames the question within a specific historical context, prompting the AI to draw on its knowledge of the period and provide a nuanced historical analysis.

Educational: "Respond as a patient teacher helping a beginner understand the concept of fractions." This prompt encourages the AI to adopt a clear and accessible teaching style, breaking down complex concepts into simpler terms.

Business: "Take the role of a critical business analyst evaluating the potential market for this product:

[product description]." This prompt calls upon the AI's analytical capabilities within a business context, providing a structured assessment of market viability.

While the benefits are significant, role-based prompting also has some limitations. Overly specific role definitions might artificially constrain the AI's responses, limiting flexibility. There's also a risk of the AI generating inaccurate information while trying to embody a particular role, especially if the role is complex or requires specialized knowledge beyond its training data. The effectiveness of this method heavily depends on the clarity and quality of the role definition.

To maximize the effectiveness of role-based prompting, consider the following tips:

Specificity is Key: Be precise in defining the role's expertise and perspective. The more specific the role, the more focused and relevant the response will be.

Context Matters: Provide relevant context about the role's background, experience, and even motivations. This helps the AI embody the role more convincingly.

Define the Tone: Clearly specify the desired tone and communication style. Should the AI be formal, informal, humorous, or serious?

Match Complexity: Align the complexity of the role with the task requirements. A simple task might not require a highly specialized role.

You can learn more about Role-Based Prompting and related advanced prompting techniques to enhance your interactions with AI models. Role-based prompting offers a powerful way to access specialized knowledge, improve the relevance and context of responses, and create more engaging interactions with AI, making it a valuable tool in any prompter's toolkit.

6. Instruction Following/Task Specification Prompting

Instruction Following/Task Specification Prompting represents a highly structured and systematic approach among the various types of prompting. It prioritizes precision and control, providing the AI with clear, detailed instructions on what to do, how to do it, and the expected output format. This method excels in scenarios demanding predictable and consistent results, making it particularly valuable for production applications and tasks requiring a high degree of accuracy.

At its core, this prompting technique involves crafting meticulous instructions that leave no room for ambiguity. This includes specifying the task's objective, outlining the steps involved, defining the desired output format, and setting any necessary constraints or limitations. The more detailed and precise the instructions, the more likely the AI is to generate the desired outcome. Think of it as providing a comprehensive blueprint that guides the AI through the entire process.

The power of Instruction Following lies in its ability to minimize misinterpretations and maximize control. By providing explicit guidelines, you effectively reduce the AI's freedom to deviate from the intended path, resulting in highly predictable and consistent outputs. This is crucial for tasks where accuracy and reliability are paramount.

Features of Instruction Following/Task Specification Prompting:

- Detailed Task Specifications and Constraints: Clearly defined objectives, steps, and limitations.

- Clear Output Format Requirements: Explicit instructions on the desired format (e.g., JSON, CSV, paragraph text).

- Explicit Behavioral Guidelines: Specific instructions regarding the AI's behavior during task execution.

- Systematic Instruction Structuring: A logical and organized presentation of instructions.

Pros:

- High Degree of Control over Outputs: Precise instructions lead to predictable results.

- Consistent and Predictable Results: Reduces variability in outputs, ensuring reliability.

- Reduces Ambiguity and Misinterpretation: Clear instructions minimize the chances of the AI misunderstanding the task.

- Works Well for Production Applications: Ideal for automated tasks requiring consistent and accurate outputs.

Cons:

- Can Be Rigid and Limit Creativity: Highly structured instructions may stifle the AI's ability to generate novel outputs.

- Requires Careful Instruction Design: Crafting effective instructions can be time-consuming and require careful planning.

- May Over-Constrain the Model: Excessively restrictive instructions may hinder the AI's performance.

- Time-Intensive to Develop Optimal Instructions: Iterative refinement and testing are often necessary to achieve the desired results.

Examples of Successful Implementation:

- Detailed content writing briefs with style guides: Specifying the topic, target audience, desired tone, and specific style guidelines ensures the generated content aligns with the requirements.

- API documentation generation with specific formatting: Providing clear instructions on the desired structure, content, and formatting allows for consistent and well-structured API documentation.

- Data analysis tasks with exact output requirements: Specifying the data sources, analysis methods, and desired output format ensures the analysis is conducted accurately and the results are presented in a usable format.

- Code generation with specific standards and patterns: Providing clear instructions on coding standards, design patterns, and specific functionalities leads to cleaner and more maintainable code. When crafting these instructions, understanding code structure best practices is crucial for clear and effective prompting. Well-structured code, like well-structured prompts, contributes significantly to clarity, maintainability, and overall effectiveness.

Tips for Effective Instruction Following:

- Use clear, unambiguous language: Avoid jargon and technical terms that the AI might not understand.

- Specify output format explicitly: Clearly state the desired format (e.g., JSON, table, paragraph text).

- Include constraints and limitations: Clearly define any restrictions or boundaries for the AI.

- Test instructions with edge cases: Evaluate the instructions with unusual or unexpected inputs to identify potential issues.

- Iterate and refine based on results: Analyze the outputs and adjust the instructions as needed to improve performance.

When to use Instruction Following/Task Specification Prompting: This type of prompting is particularly suitable when dealing with tasks that require a high level of precision, consistency, and control. It's ideal for automating processes, generating structured outputs, and ensuring adherence to specific guidelines. If your primary goal is predictable and reliable results, Instruction Following is the way to go. Learn more about Instruction Following/Task Specification Prompting. This approach ensures that the AI performs the task exactly as intended, minimizing errors and maximizing efficiency. This methodical approach to types of prompting is a cornerstone in achieving consistent, high-quality outputs from large language models.

7. Iterative Refinement Prompting

Iterative refinement prompting stands as a powerful technique within the broader landscape of types of prompting, offering a dynamic and interactive approach to harnessing the capabilities of AI models. Unlike single-prompt approaches, iterative refinement prompting involves a cyclical process of prompt-response interactions, where each subsequent prompt builds upon and refines the preceding response. This continuous feedback loop allows for progressive improvement of the AI’s output, gradually honing in on the desired result through corrections, enhancements, and incremental adjustments. This makes it an invaluable tool for complex tasks that require high quality and precision.

This method works by establishing an initial prompt that outlines the general objective. The AI model generates a response based on this prompt. The user then analyzes the response, identifying areas for improvement, providing specific feedback, and formulating a refined prompt that incorporates these corrections. This cycle repeats until the output reaches the desired level of quality or a predefined stopping criterion is met. Think of it like sculpting clay – you start with a general shape and progressively refine it until the final form emerges.

Several key features distinguish iterative refinement prompting:

- Multiple rounds of prompt-response cycles: The back-and-forth exchange between the user and the AI is at the heart of this method.

- Progressive refinement and improvement: Each iteration aims to enhance the output based on previous results.

- Incorporation of feedback and corrections: User feedback is crucial in guiding the AI towards the desired output.

- Adaptive based on intermediate results: The prompts evolve dynamically in response to the AI’s intermediate generations.

The benefits of this approach are significant:

- Achieves higher quality final outputs: Through multiple iterations and refinements, the final output often surpasses the quality achievable with single-prompt approaches.

- Allows for course correction during the process: Errors and deviations can be addressed during the process, preventing them from propagating through to the final result.

- Handles complex tasks through decomposition: Large, complex tasks can be broken down into smaller, manageable steps, simplifying the process for both the user and the AI.

- Enables collaborative human-AI problem solving: Iterative refinement fostering a dynamic partnership between the user and the AI, leveraging the strengths of both.

However, this method is not without its drawbacks:

- Requires multiple API calls and higher costs: Each iteration consumes API calls, which can translate to higher costs depending on the usage and pricing model.

- Time-intensive process: Multiple rounds of interaction inherently require more time compared to single-prompt methods.

- Needs human oversight and guidance: Effective iterative refinement relies on active user participation and feedback throughout the process.

- Can lose context over long conversations: Maintaining context across numerous turns can be challenging, particularly for models with limited memory.

Consider these examples of successful implementation:

- Iteratively improving creative writing: Start with a basic plot outline and refine the narrative, dialogue, and character development through successive iterations.

- Refining code through debugging cycles: Identify and fix bugs in code by providing the AI with error messages and expected behavior.

- Progressively enhancing research analysis: Begin with broad research questions and refine the analysis based on intermediate findings.

- Collaborative document editing and improvement: Use the AI to suggest edits, rephrase sentences, and improve overall clarity through multiple revisions.

To effectively utilize iterative refinement prompting, consider these tips:

- Start with broad objectives and narrow down: Initial prompts should define the overall goal. Subsequent prompts should become progressively more specific.

- Provide specific feedback on each iteration: Clearly articulate what needs improvement, highlighting both strengths and weaknesses of the AI's output.

- Maintain context across conversation turns: Recap key information or use techniques like prompt chaining to preserve context throughout the process.

- Set clear stopping criteria for iterations: Define the desired quality level or other criteria to determine when the process should conclude.

- Document successful refinement patterns: Keep track of effective prompt structures and refinement strategies to reuse in future tasks.

Iterative refinement prompting deserves its place in any discussion about types of prompting because it empowers users to achieve exceptional results with AI models. While single-prompt methods might suffice for simple tasks, iterative refinement offers a crucial pathway to tackling complex challenges and realizing the full potential of AI for creative and technical endeavors. For AI professionals, developers, marketers, and anyone working with large language models (LLMs) like ChatGPT, Google Gemini, or Anthropic's Claude, understanding and mastering this technique opens doors to a new level of precision and quality in AI-generated content.

7 Types of Prompting Compared

| Prompting Technique | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Zero-Shot Prompting | Low – Simple, direct task instructions | Minimal – No examples needed | Variable quality; works well for common tasks | Quick, common, straightforward queries | Fast and easy to implement, minimal setup |

| Few-Shot Prompting | Medium – Requires crafting 1-5 examples | Moderate – Needs tokens for demonstrations | Improved accuracy and output consistency | Complex or specialized tasks needing format | Better control and more consistent outputs |

| Chain-of-Thought (CoT) Prompting | Medium-High – Involves step-by-step reasoning | Higher – Longer prompts and responses | High accuracy on complex, logical tasks | Multi-step reasoning, math, logic problems | Dramatically improves reasoning and clarity |

| Tree of Thoughts (ToT) Prompting | High – Complex branching and backtracking | Very high – Multiple model calls required | Superior results on strategic, multi-path problems | Planning, optimization, strategic problem-solving | Finds optimal solutions via systematic search |

| Role-Based Prompting | Low-Medium – Defining roles or personas | Low – Simple role descriptions | Contextually relevant, expert-level responses | Domain-specific expertise, dialogue engagement | Accesses specialized knowledge and tone |

| Instruction Following Prompting | Medium – Detailed, precise instructions | Moderate – Needs well-structured prompts | Highly controlled, consistent, and predictable | Production tasks requiring strict outputs | High control and reduces ambiguity |

| Iterative Refinement Prompting | High – Multiple interaction rounds | High – Repeated API calls and human input | Progressive quality improvement and error correction | Complex creative or technical tasks needing iterations | Enables collaborative refinement and quality |

Mastering AI Communication with MultitaskAI

This article explored seven key types of prompting, providing a foundational understanding of how to effectively communicate with AI. We've covered everything from simple zero-shot prompting to more complex methods like Chain-of-Thought (CoT) and Tree of Thoughts (ToT) prompting. By understanding the nuances of these different approaches—including few-shot, role-based, instruction following, and iterative refinement prompting—you can tailor your interactions with large language models (LLMs) to achieve specific goals and unlock their full potential.

The key takeaway here is that mastering these types of prompting isn't just about getting better responses from AI; it's about fundamentally changing how we interact with and leverage these powerful tools. Whether you're a software engineer building AI-powered applications, a digital marketer crafting compelling content, or an entrepreneur exploring new possibilities, effective prompting empowers you to solve complex problems, automate tedious tasks, and ultimately, innovate faster.

Want to streamline your prompting workflow and boost your AI productivity? MultitaskAI, a powerful browser-based chat interface, allows you to connect directly to leading AI models, manage multiple conversations simultaneously, and leverage powerful features like file integration and custom agents. It’s the perfect platform to experiment with the different types of prompting we've discussed, refine your approach, and truly master the art of AI communication. Try MultitaskAI today and transform how you work with AI. The future of interaction is here, and the power to shape it is in your prompts.