Self Hosted AI Assistant for Privacy & Productivity

Streamline your workflow and secure your data with a self hosted ai assistant. Click to learn expert setup tips for privacy and efficiency.

Why Self-Hosted AI Assistants Are Becoming Essential

Self-hosting AI assistants is changing how we interact with artificial intelligence. Instead of relying on big tech companies, individuals are taking more control. This trend is growing because of concerns about data privacy, security, and the desire for a more personalized experience. Self-hosting allows people to take ownership of their data and how they use AI.

Data Privacy and Security: Taking Back Control

One of the biggest advantages of a self-hosted AI assistant is better data privacy. With cloud-based assistants, your data goes through and is stored on someone else's servers. This raises concerns about data breaches and unauthorized access. Self-hosting keeps your data in your own environment, reducing these risks. This is especially important for sensitive information, business data, or personal conversations.

Self-hosting also offers more security. By controlling where your data is and who can access it, you're less likely to be targeted. This means fewer vulnerabilities and more peace of mind knowing your information is safe.

Customization and Control: Shaping Your AI Experience

Besides privacy and security, self-hosted AI assistants offer significant customization. Unlike cloud-based assistants with limited features, self-hosted solutions let you change the AI's behavior, abilities, and what other apps it works with. This flexibility lets you build an assistant perfect for your needs and how you work. Plus, you have more control over how the AI develops. You choose the models, how to train them, and what data they learn from.

The increasing use of AI coding assistants highlights this growth. Developers can work 55.8% faster with these tools, according to Microsoft research. Self-hosted AI assistants aim to offer similar productivity increases with added privacy. However, development involves challenges like privacy concerns and managing large amounts of data. Learn more about this from these AI assistant statistics.

Embracing the Future of AI Interaction

Self-hosted AI assistants are more than just a technical advancement. They represent a move toward digital sovereignty. As we use AI more in our daily lives, controlling this technology is critical. This shift empowers people and businesses to use AI's full potential while protecting their data and staying in control of their digital future.

Hardware and Software Requirements: What You Need

Running a self-hosted AI assistant means thinking carefully about your hardware and software. There's no single perfect setup. The best choice depends on how you plan to use the assistant and how big your operation is. A single developer working on a project will have different needs than a large company supporting many users.

Hardware Considerations: Balancing Performance and Cost

The size of your AI model has a big impact on the hardware you'll need. Larger models offer more power and features, but they also need more processing power, RAM, and storage space. A small model might run fine on a regular workstation with a decent GPU and 16GB of RAM. But larger models often need high-end GPUs, 32GB or 64GB of RAM, and lots of storage. Solid-state drives (SSDs) are important for fast performance. They reduce delays when accessing data.

If you're a single user experimenting with an AI assistant, a mid-range setup is probably enough. This could be a consumer-grade GPU with plenty of VRAM and a modern multi-core CPU. But if you want to support multiple users or run more complex models, you'll need stronger hardware. This might mean investing in server-grade GPUs and CPUs, more RAM, and maybe even a separate storage server.

To help visualize the hardware requirements, let's take a look at the following table:

Hardware Requirements by Model Size: Comparison of minimum hardware specifications needed for different sizes of AI models

| Model Size | CPU/GPU | RAM | Storage | Estimated Cost |

|---|---|---|---|---|

| Small (e.g., for testing, single user) | Consumer-grade GPU, Multi-core CPU | 16GB | 500GB SSD | $1,000 - $2,000 |

| Medium (e.g., for small teams, limited users) | Mid-range GPU, High-end CPU | 32GB | 1TB SSD | $2,500 - $5,000 |

| Large (e.g., for enterprise, numerous users) | Server-grade GPU, Server-grade CPU | 64GB+ | 2TB+ SSD | $5,000+ |

As you can see, the cost and requirements scale up significantly with model size. Choosing the right size for your needs is essential for balancing performance and budget.

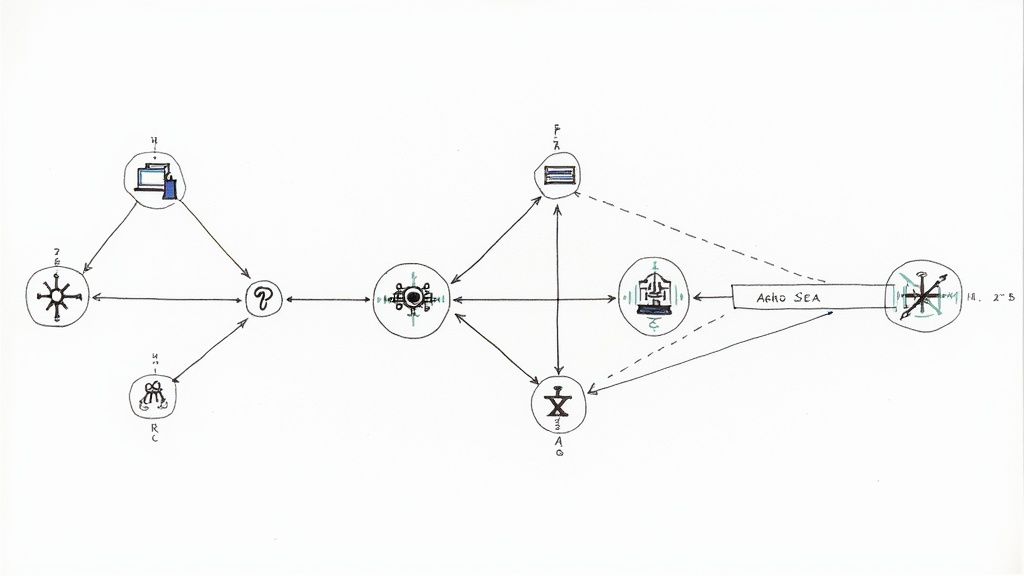

Software Essentials: Building Your AI Ecosystem

Choosing the right software is just as important as the hardware. You'll need an operating system that's good for AI workloads, like a stable and efficient Linux distribution. Ubuntu, for example, is a popular choice because it has great community support and is easy to use. Using Docker containers can also simplify deployment and management. Containers make it easier to separate different parts of your AI assistant, improving security and making it easier to manage.

The AI model itself is a key decision. Open-source models like Llama-2 are a good starting point for trying things out and customizing. You'll also need software to handle the model's memory, including tools like vector stores for semantic search. These tools help the assistant remember context and give better answers. A good user interface is also essential for easy interaction.

Balancing Self-Hosting and Cloud Resources

Self-hosting gives you more control, but sometimes using cloud resources can help. You might use cloud storage for backups or use cloud-based GPUs for tasks that need lots of processing power. This mixed approach lets you use the cloud's flexibility while keeping control of your data. The best setup depends on your specific needs and budget. Carefully thinking about what you need is the key to building a self-hosted AI assistant that is powerful and efficient.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

Setting Up Your Self-Hosted AI Assistant: A Step-by-Step Guide

Want to run your own AI assistant? Setting up a self-hosted solution involves choosing the right framework, configuring your environment, and making sure everything runs without a hitch. This guide will walk you through the process, regardless of your technical background.

Choosing Your Framework

The first step is picking an open-source framework. This forms the base of your AI assistant. Several options exist, each with its pros and cons.

- Llama-2: Developed by Meta, Llama-2 is a powerful and versatile choice known for its performance and community support.

- Mistral: This framework balances performance and efficiency, suitable for a wide range of uses.

- CodeLlama: For code generation and assistance, CodeLlama excels at these specific tasks, potentially boosting your coding productivity.

Think about your needs and the complexity of the tasks you want to handle. Research each framework thoroughly before deciding.

Installation and Configuration

After selecting a framework, you'll need to install and configure it. While this varies depending on your chosen framework, the general process is similar.

- System Preparation: Make sure your system meets the hardware and software requirements. A stable Linux distribution like Ubuntu is often recommended.

- Framework Installation: Follow the specific instructions provided for your framework. This usually involves command-line tools and downloading files.

- Model Download: Download the pre-trained model that matches your chosen framework. Model size will affect performance and resource usage.

- Dependency Installation: Install any required dependencies and libraries for the framework and model.

Test each step thoroughly to ensure everything works correctly.

Deployment Strategies: Bare Metal vs. Containerized

You can deploy your self-hosted AI assistant in two main ways: bare metal and containerized. Bare metal means installing the framework directly onto your hardware. This gives you maximum control and potential performance gains, but can be harder to manage. Containerized solutions, like those using Docker, package the AI assistant and its dependencies into a container. This simplifies deployment and portability, but might introduce a slight performance overhead. Choose the approach that best suits your technical skills and comfort level. For further information, check out this resource: How to master self-hosting a ChatGPT alternative.

Testing and Integration

After deployment, testing and integration are critical for a successful setup.

- Initial Testing: Run basic commands and interactions to check if the AI assistant responds as expected.

- Workflow Integration: Connect your assistant to your existing workflows, applications, or tools. This might involve APIs or custom scripts.

- Performance Monitoring: Set up monitoring tools to track resource usage and spot potential bottlenecks.

Regular testing will ensure your assistant performs well as you integrate it further into your systems, allowing you to catch and address any issues early on.

Mastering Memory: The Key to Truly Helpful Assistants

A truly helpful self-hosted AI assistant needs more than just processing power. It needs effective memory management. This is the difference between a frustrating and a productive user experience. The ability to retain context and recall previous interactions is vital for natural, helpful conversations. Let's explore how leading self-hosted AI assistants handle this.

The Challenge of Context Retention

Current Large Language Models (LLMs) excel at processing information. However, they struggle to retain context across conversations. This limits their usefulness for tasks requiring memory of past interactions or personal details. Imagine a self-hosted AI assistant that forgets your preferences every time you start a new conversation. Building a robust memory system is essential.

One hurdle is the sheer volume of data generated in conversations. Storing and retrieving this information efficiently is a complex task. Saving entire conversation logs can quickly become unwieldy and resource-intensive. That's where techniques like vector stores for semantic search come in.

Data privacy is also crucial. A self-hosted AI assistant must store information securely. This involves careful data management and robust encryption. As your assistant interacts with more data, you need mechanisms to prevent storage overload. Effective memory management is critical for a truly helpful self-hosted AI assistant.

Long-Term Memory and Data Retention

One significant challenge is achieving long-term memory and data retention. Current AI models process vast amounts of data but don't retain it over time. Techniques like Retrieval Automated Generation (RAG), which links a vector database to the AI system for recalling specific information, are essential for overcoming this. Learn more about AI personal assistant development in this article: Shirley: My Quest to Create a Truly Useful AI Personal Assistant (Self-Hosted ChatGPT, Long-Term Memory, RAG).

Implementing Effective Memory: Vector Stores and More

Vector stores address context retention by converting words and phrases into numerical vectors. These vectors capture the meaning of the text, allowing for semantic search. The assistant can find information based on meaning, not just keywords.

Semantic Search: This allows the assistant to understand the intent behind a query. This leads to more accurate responses. If you ask about "project deadlines," the assistant can find information about upcoming due dates, even if that exact phrase wasn't used before.

Efficient Retrieval: Vector stores optimize data retrieval, speeding up the process of finding relevant information. This makes the assistant more responsive and interactions more natural.

Beyond vector stores, efficient document retrieval systems are vital. These systems let the assistant access and process files, emails, or web pages, expanding its knowledge base. This is crucial for tasks like summarizing documents or answering questions based on specific information.

Conversation Memory Across Sessions

A helpful self-hosted AI assistant remembers past conversations, even after you close the application. This requires storing conversation history persistently, allowing the assistant to pick up where you left off. This creates a continuous and personalized experience. However, managing this persistent memory requires careful design to avoid privacy risks and storage issues. Balancing remembering information and respecting privacy is key.

Protecting Your Data: The Privacy Perks of Self-Hosted AI

Beyond its technical capabilities, self-hosted AI offers something incredibly valuable in today's world: true data privacy. This key advantage sets it apart from cloud-based solutions, especially when dealing with sensitive information. Let's explore why this privacy matters and how self-hosting reduces real-world risks.

Data Sovereignty and Control: Your Data, Your Rules

With a self-hosted AI assistant, you are in control of your data. This is the core principle of data sovereignty. Unlike cloud-based assistants like Siri or Alexa, your information isn’t sent to external servers. This means no third party can access your conversations, personal details, or confidential business information. This level of control is crucial for individuals and organizations concerned about data breaches and unauthorized access. For more information on AI data privacy, check out this helpful resource: How to master AI data privacy.

Minimizing Risks with Self-Hosting

Self-hosting significantly lowers the risks linked to cloud-based AI. Imagine a company using a self-hosted AI assistant to analyze sensitive financial records. Because the data stays within their secure network, the risk of external breaches or leaks is minimized. This localized control provides peace of mind and simplifies compliance with data privacy regulations.

Practical Security Steps for Stronger Privacy

Security-conscious organizations employ several strategies to boost the security of their self-hosted AI assistants. This involves more than just keeping data on-site.

Network Isolation: Isolating the AI assistant's network limits access points, shrinking the potential attack surface. This gives attackers fewer avenues to reach your system.

Encryption: Encrypting data, both at rest (stored data) and in transit (data being transferred), adds a vital layer of security. Even if a breach occurs, the encrypted data is unusable without the decryption keys.

Access Controls: Strict access controls restrict who can use the AI assistant and view the underlying data. This reduces the risk of internal breaches or unauthorized access.

To better understand the differences between self-hosted and cloud-based AI in terms of privacy, take a look at the comparison table below:

Self-Hosted vs. Cloud AI Privacy Comparison

Comparison of privacy and security aspects between self-hosted and cloud-based AI assistants.

| Aspect | Self-Hosted AI | Cloud-Based AI | Privacy Implications |

|---|---|---|---|

| Data Control | User retains full control | Data stored on provider's servers | Potential for third-party access |

| Data Location | On-premise, within user's network | Provider's data centers | Subject to provider's security measures and data location laws |

| Data Transmission | Minimal to none | Data transmitted to and from the cloud | Increased risk of interception during transmission |

| Compliance | Easier to comply with specific regulations | Reliant on provider's compliance certifications | May not meet specific industry or regional requirements |

| Transparency | Full visibility into data handling | Limited visibility into provider's data handling practices | Potential for undisclosed data usage |

This table highlights the key differences in data control and security between the two approaches. Self-hosting provides greater control and transparency, while cloud-based solutions rely on the provider's security measures.

Building a Privacy-Focused Approach

A complete privacy strategy involves more than just technical safeguards. Organizations also need solid governance policies that balance security with a positive user experience. This includes performing privacy impact assessments to pinpoint potential risks and establish procedures to handle them. This proactive approach fosters a privacy-first culture and promotes responsible data management within the organization. This framework is particularly important when handling sensitive data in regulated industries.

By implementing these strategies, businesses can fully utilize the power of AI while keeping data privacy a top priority. Self-hosting offers a strong path toward achieving both innovation and data security.

No spam, no nonsense. Pinky promise.

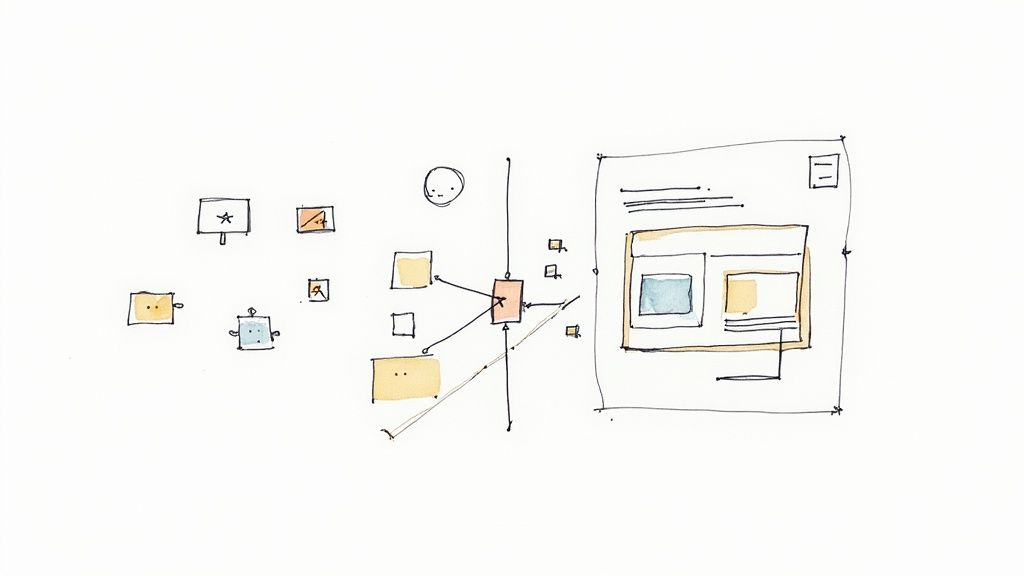

Personalizing Your AI Assistant: From General Purpose to Specialized Tool

The real strength of a self-hosted AI assistant isn't just about data privacy. It's about customizing the AI to work precisely the way you need it to. This means going beyond the general abilities of a standard model and building a truly personalized productivity tool. This involves several key strategies, from refining how you interact with your assistant to integrating it seamlessly with your current software.

Mastering Prompt Engineering

One of the easiest ways to personalize your self-hosted AI assistant is through prompt engineering. This involves creating clear and effective instructions, or prompts, that guide the AI's output. Think of it like giving your assistant a detailed map instead of vague directions. For example, instead of asking "Write a blog post about AI," a better prompt might be "Write a 300-word blog post about the benefits of self-hosted AI assistants for small businesses, focusing on data privacy and cost savings." Check out this helpful resource: How to master prompt engineering. This level of detail significantly improves the quality and relevance of the AI's responses.

Fine-Tuning With Specific Data

Beyond individual prompts, you can fine-tune your entire model with specific data. This is especially helpful for companies that use specialized terminology or have unique workflows. Imagine a law firm fine-tuning their self-hosted AI assistant on legal documents and case law. This specialized training allows the assistant to understand complex legal concepts and generate more accurate and relevant answers in that field. This process effectively teaches your AI assistant the language of your industry.

Enhancing Functionality Through Plugins and Integrations

The real potential of a self-hosted AI assistant comes from custom plugins and integrations. These add-ons transform the assistant from a conversational tool into a powerful productivity center. They allow the AI to interact directly with your current programs and databases. For example, a plugin could connect your assistant to your Google Calendar, letting it schedule meetings, set reminders, and even manage your to-do list. Another integration could allow the assistant to access and analyze data from your company's internal databases, generating reports or summarizing key information. This level of integration streamlines tasks and expands the AI’s practical uses considerably.

Evaluating and Improving Performance

As you personalize your self-hosted AI assistant, it's important to assess its performance and find areas for improvement. Creating an evaluation framework helps track progress and ensures your assistant continues to meet your needs. This might involve getting feedback on response quality, tracking task completion rates, and monitoring resource usage. This repeating process of evaluation and refinement helps you constantly improve your assistant’s abilities, making it an increasingly valuable part of your workflow. This continuous improvement cycle ensures your self-hosted AI assistant remains a powerful and adaptable tool.

Future-Proofing Your AI Assistant

The AI world is constantly changing. How can you make sure your self-hosted AI assistant stays current and capable without needing to rebuild it all the time? This is key to getting the most out of your investment and staying ahead of the game. This section explores ways to maintain a cutting-edge self-hosted AI assistant and adapt to the ever-changing AI world.

The Importance of Adaptability

AI development moves fast. New models, features, and capabilities appear regularly. A self-hosted AI assistant that doesn't change risks becoming outdated and less effective. This is where future-proofing comes in. By designing for adaptability from the beginning, you can avoid expensive and time-consuming overhauls later on.

Strategies for Ongoing Improvements

Leading organizations use several strategies to keep their self-hosted AI assistants updated. These strategies ensure long-term relevance and strong performance.

Regular Model Updates: Like software, AI models benefit from regular updates. Newer models often provide improved accuracy, better performance, and exciting new features. A smooth update process minimizes disruptions.

Continuous Learning: Imagine an assistant that's always learning and getting better. Continuous learning pipelines make this possible. These pipelines feed new data to the model, helping it adapt to new information and improve its responses. This is especially helpful in situations where information changes often.

Exploring New Capabilities: Keeping up with the latest in AI is essential. Regularly checking out new models and features allows you to identify areas for improvement and integrate valuable additions into your existing setup. This might involve exploring new open-source models or using techniques like Retrieval Augmented Generation (RAG). RAG lets Large Language Models (LLMs) access and use external knowledge.

Smart Design for Smooth Updates

A well-designed system makes updates easier. Separating the model components from the integration layers makes swapping models less disruptive. This modular design lets you update the model without rebuilding the entire system, saving time and resources.

Using Feedback For Improvement

Feedback loops are vital for continuous improvement. These loops capture how users interact with the assistant and use that data to refine responses and identify areas for improvement. Tracking user satisfaction or common mistakes can provide valuable insights for future model training and updates. Tools like MultitaskAI, with its features for managing and comparing different AI models, can be especially helpful for evaluating performance and implementing updates.

Balancing Progress and Stability

Staying current is important, but so is stability. Always chasing the latest features can cause instability and disrupt your workflow. A sustainable maintenance strategy that balances innovation with stability is key. This means carefully weighing the benefits of new features against potential problems before making changes. Consider your resources and focus on updates that offer the most improvement without overloading your system. This balanced approach keeps your AI assistant a reliable and high-performing tool.