Top performance optimization techniques for fast apps

Discover top performance optimization techniques that boost speed, reduce load times, and enhance user experience. Click to learn more.

Need for Speed? Boost Your Application's Performance

Speed and performance can make or break an application. A slow website, laggy mobile app, or unresponsive software quickly drives users away, damages your brand, and hurts revenue. We've come a long way from the days of dial-up internet - users now expect lightning-fast experiences.

At its core, performance optimization is about more than raw speed. The real art lies in finding the right balance between speed, code maintainability, scaling smoothly as you grow, and using resources efficiently. Whether you're a developer building web apps or an AI engineer training models, understanding how to optimize your code is essential.

This guide explores 10 powerful techniques to dramatically improve your application's performance. We'll cover proven strategies that can help transform slow systems into snappy, responsive experiences that delight users. Get ready to learn practical optimization approaches you can start using today to unlock your application's full potential.

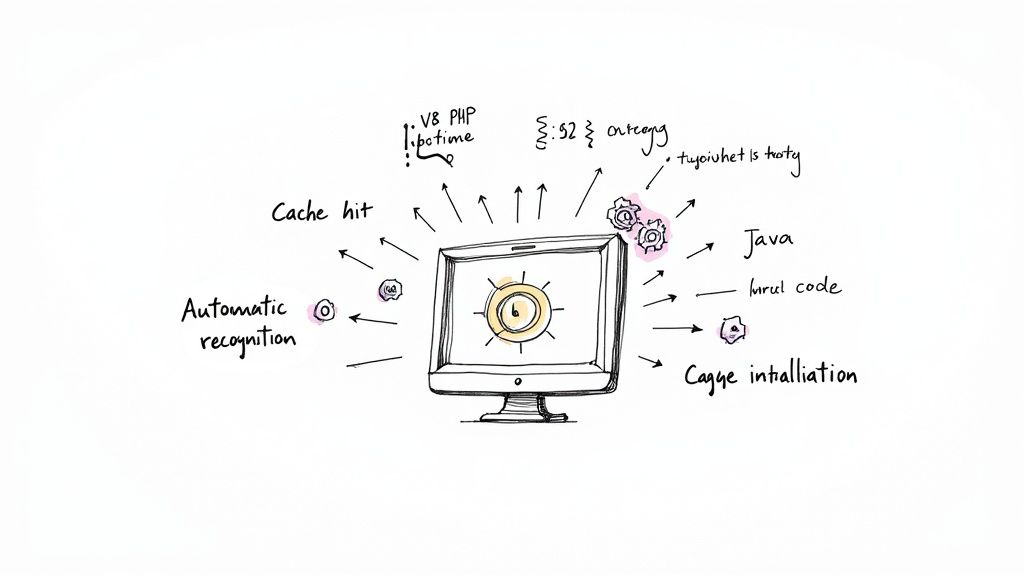

1. Code Caching

Code caching makes programs run faster by storing compiled code so it doesn't need to be compiled again. Think of it like saving your favorite websites - you don't have to download them each time you visit.

How Code Caching Works:

When code runs for the first time, it gets compiled into instructions the computer can understand. Code caching saves these instructions. The next time that same code runs, it can use the saved version instead of compiling again. This simple approach leads to major speed improvements.

Key Features:

- Memory Storage: Keeps compiled code ready in RAM or on disk

- Smart Detection: Automatically finds code that runs often

- Multiple Cache Levels: Works at different layers from CPU to application

- Flexible Implementation: Can be added at hardware or software level

Main Benefits:

- Faster Program Speed: Common operations run much quicker

- Less CPU Work: Computer doesn't waste time recompiling

- Better User Experience: Programs feel more responsive

- Lower Power Use: Less work means less energy needed

Quick Comparison:

| Good Things | Trade-offs |

|---|---|

| Much faster execution | Uses extra memory |

| Reduces CPU load | Can be tricky to update cached code |

| More responsive programs | Not helpful for rarely-used code |

| Saves power |

Real Examples:

Major platforms use code caching to boost performance. Google Chrome and Node.js cache JavaScript code to make web apps faster. PHP uses OpCache to speed up websites. Java compiles code while running and saves it for later use.

Tips for Using Code Caching:

- Check Usage: Make sure the cache is being used effectively

- Set Good Sizes: Pick cache sizes that balance speed and memory use

- Pre-fill Important Code: Load key code into cache at startup

- Keep Cache Fresh: Update cached code when source code changes

Why It Matters:

Code caching is essential for fast, efficient programs. It helps everything from websites to AI systems run better. Any developer who cares about performance should understand and use code caching.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

2. Database Query Optimization

Database slowdowns can significantly impact application speed and user experience. Optimizing database queries helps applications run faster, use fewer server resources, and work more efficiently overall. Well-optimized database queries are a foundation of high-performing applications.

Understanding the Concept:

Database query optimization focuses on reducing the resources needed to process and return data. This includes understanding how databases handle queries - from initial parsing to creating execution plans to retrieving and returning results.

Features and Techniques:

- Index Optimization: Creating strategic indexes lets databases quickly locate data without full table scans. Choosing the right indexes is key for performance.

- Query Plan Analysis: Looking at query execution plans reveals how queries work internally. The

EXPLAINcommand helps spot slow areas that need fixing. - Smart Denormalization: Sometimes adding redundant data strategically can reduce complex joins and speed up queries, though this requires careful consideration.

- Connection Pooling: Reusing database connections instead of creating new ones reduces overhead and helps applications handle more users.

Pros:

- Faster response times: Queries complete more quickly

- Lower server load: Optimized queries use fewer resources

- Better resource usage: More efficient use of CPU, memory and I/O

- Improved user experience: Pages load faster and feel more responsive

Cons:

- Takes expertise: Requires understanding database internals

- Needs monitoring: Must review and update as usage patterns change

- Storage costs: Indexes take up disk space

- Ongoing work: Regular reviews needed to maintain performance

Real-World Examples:

- Instagram's Database Sharding: Splits data across servers for parallel processing

- Facebook's Query Optimizations: Uses caching and custom database solutions

- Twitter's Performance Work: Optimizes queries to handle millions of real-time interactions

Tips for Implementation:

- Use

EXPLAINto analyze queries: Understand execution plans and find bottlenecks - Maintain indexes regularly: Keep indexes updated and relevant

- Track query metrics: Monitor execution times to spot slow queries

- Set up connection pooling: Use built-in features to manage connections efficiently

Industry Growth and Impact:

Database optimization has become increasingly important as data volumes grow. Development communities around systems like PostgreSQL and MySQL have made major contributions to optimization techniques. As applications handle more data, efficient queries remain critical for good performance.

Strong database query optimization skills help developers build faster, more scalable applications that provide excellent user experiences. Understanding these core techniques is essential for working with modern data-driven systems.

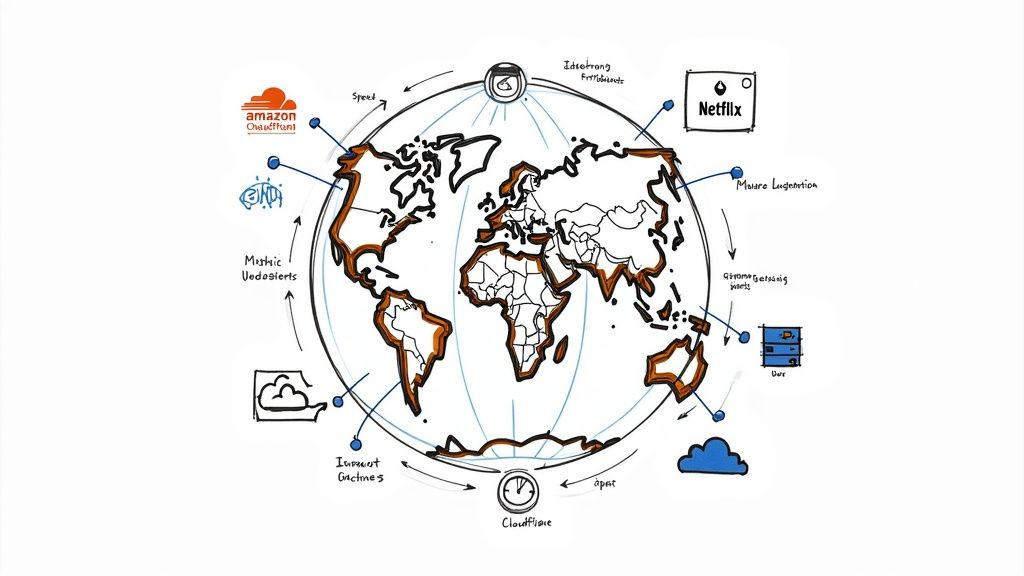

3. Content Delivery Network (CDN)

A Content Delivery Network (CDN) spreads your website content across servers worldwide, delivering it from locations closest to each visitor. This simple but powerful approach cuts load times and makes websites faster for everyone.

Think of a CDN like having copies of your website stored in different cities globally. When someone visits your site, they get content from the nearest server rather than waiting for data to travel long distances. This makes CDNs essential for fast, reliable websites.

Key CDN features include:

- Global Server Network: Servers placed strategically in data centers worldwide

- Smart Routing: Picks the fastest path to deliver content based on network conditions

- Edge Caching: Stores files like images and videos on local servers

- Security: Built-in protection against DDoS and other attacks

Real Examples:

Netflix uses its OpenConnect CDN to stream shows smoothly to millions of viewers. Amazon CloudFront powers many popular websites. Cloudflare speeds up sites while blocking threats.

History:

CDNs emerged as websites needed to serve content faster to growing global audiences. Companies like Akamai and Fastly helped develop CDN technology. While big companies used CDNs first, they're now affordable for businesses of all sizes.

Benefits:

- Faster Loading: Pages load quickly from nearby servers

- Better Uptime: Multiple servers mean better reliability

- Server Efficiency: Main servers handle fewer requests

- Added Security: Protection from common attacks

Challenges:

- Cost: Monthly fees for CDN services

- Setup Work: Takes time to configure properly

- Cache Management: Keeping content updated across servers

- Provider Lock-in: Moving between CDNs can be difficult

Tips for Success:

- Set appropriate cache times to balance speed and freshness

- Create clear processes for updating cached content

- Watch performance metrics to spot issues

- Consider using multiple CDNs for reliability

CDNs are a key tool for making websites faster and more reliable. When chosen and set up well, they help create better experiences for users worldwide while keeping sites secure and stable.

4. Load Balancing

Load balancing helps improve system performance by spreading incoming traffic across multiple servers. Rather than overwhelming a single server, requests get distributed so resources are used efficiently and applications stay responsive. It's like having a smart traffic manager that directs users to the servers that can handle their requests best.

Understanding in Practice:

Picture an e-commerce site having a huge sale. Thousands of shoppers flood the website at once to grab deals. A single server would likely crash under all those simultaneous requests. By putting a load balancer in front of multiple servers, each request gets routed to a server with capacity. This keeps the site running smoothly even during peak traffic.

Key Features:

- Distribution Methods: Load balancers use different approaches like round-robin, least connections, or IP hashing to spread traffic effectively

- Server Health Monitoring: The system regularly checks if servers are working properly. If one fails, traffic automatically moves to healthy servers

- Session Handling: For apps needing user session data, requests from the same user go to the same server to maintain continuity

- SSL Processing: Load balancers can handle the SSL encryption/decryption work, taking that load off application servers

Benefits:

- Better Uptime: The system keeps running even if some servers fail

- Balanced Server Usage: Traffic gets spread across servers to prevent overload

- Easy Growth: Add or remove servers as needed to handle traffic changes

- System Reliability: Built-in backups keep things running if problems occur

Limitations:

- More Complex Setup: Adding load balancing means more moving parts to manage

- Extra Costs: Good load balancers require investment in hardware or software

- Setup Challenges: Getting the configuration right takes careful planning

- Single Failure Point: If the load balancer fails, it affects everything (though redundant load balancers help)

Popular Tools:

- AWS Elastic Load Balancing: Amazon's cloud load balancing service

- Google Cloud Load Balancing: Google's cloud offering

- NGINX Plus: A trusted open-source load balancing solution

Implementation Tips:

- Pick the Right Method: Test different traffic distribution approaches to find what works best

- Watch Server Health: Set up good monitoring to catch problems early

- Track Performance: Keep an eye on how servers handle their workload

- Plan for Failures: Build backup systems to handle outages smoothly

Industry Growth:

Companies like F5 Networks, NGINX and HAProxy helped make load balancing standard practice. As internet use grew and apps got more complex, good traffic management became essential. The technology evolved from basic hardware to advanced software and cloud services, including built-in features in platforms like Kubernetes.

The Value of Load Balancing:

High availability and consistent performance are must-haves for modern applications. Load balancing delivers both by helping systems handle heavy traffic and stay reliable. For teams building serious applications, load balancing is a core part of good system design.

No spam, no nonsense. Pinky promise.

5. Memory Management Optimization

Memory management is essential for building fast and stable applications. Good memory practices determine how effectively your program allocates, uses, and releases memory resources. When done poorly, memory issues can slow down performance, cause crashes, and waste computing resources - that's why optimizing memory usage should be a key part of any performance improvement plan.

This is particularly important for AI applications and large language models that consume significant memory. Proper memory management helps these resource-intensive systems run efficiently and cost-effectively.

Key Features and Techniques:

- Memory Pooling: Set aside memory blocks for specific objects ahead of time to reduce allocation overhead and memory fragmentation

- Garbage Collection Settings: Choose the right collection algorithm and tune parameters like heap size to optimize memory cleanup

- Leak Detection: Use tools like Valgrind to find and fix memory that isn't properly released

- Resource Cleanup: Properly close file handles, network connections, and database connections when done to maintain stability

Benefits:

- Less Memory Fragmentation: Smart allocation leads to more efficient memory use

- Faster Performance: Fewer allocation cycles and garbage collection pauses improve speed

- Lower Resource Usage: Efficient memory handling reduces overall memory needs and costs

- Better Stability: Preventing leaks and resource drain helps avoid crashes

Challenges:

- Technical Complexity: Advanced memory techniques require deep expertise

- Learning Curve: Understanding memory management needs solid systems knowledge

- Ongoing Work: Regular monitoring needed to catch issues early

- Platform Differences: Solutions often vary by operating system and language

Real Examples:

- Java's G1 Collector: Java's G1GC shows how modern garbage collection minimizes pauses for responsive apps

- Go's Memory System: Go simplifies memory handling with built-in garbage collection and smart analysis

- Redis Memory Tools: Redis uses techniques like LRU/LFU policies and compression for efficient in-memory data

Implementation Tips:

- Use profiling tools to find memory bottlenecks

- Release resources properly with patterns like RAII in C++

- Track memory metrics continuously

- Pick garbage collection settings suited to your needs

By mastering these memory management techniques, developers can build applications that perform better, stay stable, and use resources efficiently - especially important for AI and data-heavy systems.

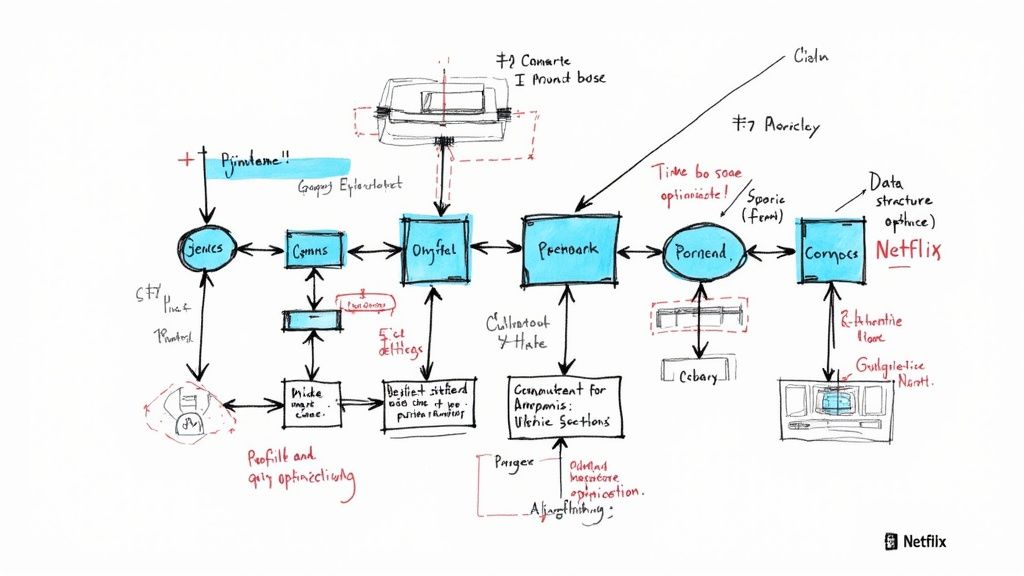

6. Algorithmic Optimization

Efficient code starts with smart algorithmic choices. Rather than just tweaking existing code, algorithmic optimization focuses on fundamentally improving how your code approaches problems through better algorithms and data structures. This can unlock major performance gains that other optimization methods simply can't match.

The process involves analyzing how different algorithms perform in terms of speed and memory usage as data grows larger. For example, switching from a nested loop search (which gets slower exponentially) to a hash table lookup (which stays fast regardless of size) can dramatically improve performance with big datasets. Similarly, choosing the right data structure - like using a linked list instead of an array when you need frequent insertions - can make a huge difference.

Key aspects of algorithmic optimization include:

- Time complexity analysis: Understanding how runtime grows with input size

- Space complexity optimization: Using memory efficiently

- Algorithm selection: Picking the best algorithm for each specific task

- Data structure optimization: Using the most effective data structures

Real-World Examples:

Major tech platforms rely heavily on algorithmic optimization. Google's search rankings, Facebook's news feed personalization, and Netflix's recommendation system all need highly optimized algorithms to handle millions of users efficiently. Computer science pioneers like Donald Knuth and Robert Sedgewick helped establish the foundations for analyzing and designing better algorithms.

Benefits:

- Faster execution: Programs run significantly quicker

- Less resource usage: Programs need less memory and CPU power

- Better scaling: Can handle larger amounts of data smoothly

- Lower costs: Reduced server and energy expenses

Challenges:

- More complex code: Optimized algorithms can be harder to understand

- Needs CS knowledge: Requires solid understanding of algorithms

- Takes time: Designing optimal algorithms isn't quick

- Harder maintenance: Complex algorithms are tougher to debug

Implementation Tips:

- Profile first: Use tools to find the actual bottlenecks before optimizing

- Balance trade-offs: Sometimes using more memory to gain speed makes sense

- Document well: Explain your optimization logic clearly

- Test thoroughly: Verify optimized code works correctly and performs better

For broader insights beyond code optimization, check out: Process Optimization Techniques. Whether you're building AI applications with LLMs or running an online business, understanding algorithmic optimization helps create faster, more efficient solutions.

I will rewrite this section about caching strategies to be more engaging and clear:

7. Caching Strategies

Caching is a powerful way to make applications faster by storing frequently accessed data closer to where it's needed. Think of it like keeping your most-used items in a desk drawer instead of walking to a storage room every time. When implemented properly, caching can dramatically speed up data access and create a smoother experience for users.

There are several key approaches to implementing caching effectively:

- Multi-level caching: Setting up caches at different points - in the browser, through CDNs, on servers, and at the database level. This creates a layered system where data can be retrieved from the closest possible location.

- Cache removal policies: Rules for what to remove when the cache gets full, like taking out the least recently used items (LRU), least frequently used (LFU), or first items added (FIFO).

- Distributed caching: For larger applications, spreading cached data across multiple servers using tools like Redis or Memcached to handle more users and stay reliable.

- Cache consistency: Making sure all copies of cached data stay in sync across distributed systems to avoid serving outdated information.

Popular caching tools have made this technology accessible to more developers. Redis offers advanced data structures beyond simple storage. Memcached focuses on high-performance distributed caching. Varnish Cache excels at caching for web applications.

Real-world examples: Online stores cache product details to reduce database load. Social networks cache user profiles and feeds. News sites cache articles for faster page loads. For more advanced browser storage options, check out our guide on IndexedDB.

Benefits:

- Faster data retrieval: Significantly cuts down the time to access information

- Less database strain: Reduces the load on backend systems

- Better experience: Makes applications more responsive

- Room to grow: Helps handle increased traffic

Challenges:

- Complex invalidation: Keeping cached data current requires careful management

- Memory usage: Caches need dedicated memory resources

- Data freshness: Risk of serving outdated information

- Setup difficulty: Requires expertise to implement properly

Key tips for success:

- Set smart expiration times: Match cache duration to how often data changes

- Track performance: Monitor cache hit rates to spot improvement areas

- Plan for updates: Have a clear strategy for updating cached data

- Preload important data: Fill caches with commonly accessed information

For developers and teams building high-performance applications, understanding these caching approaches is essential. The right caching strategy can make applications faster, more reliable, and ready to serve more users.

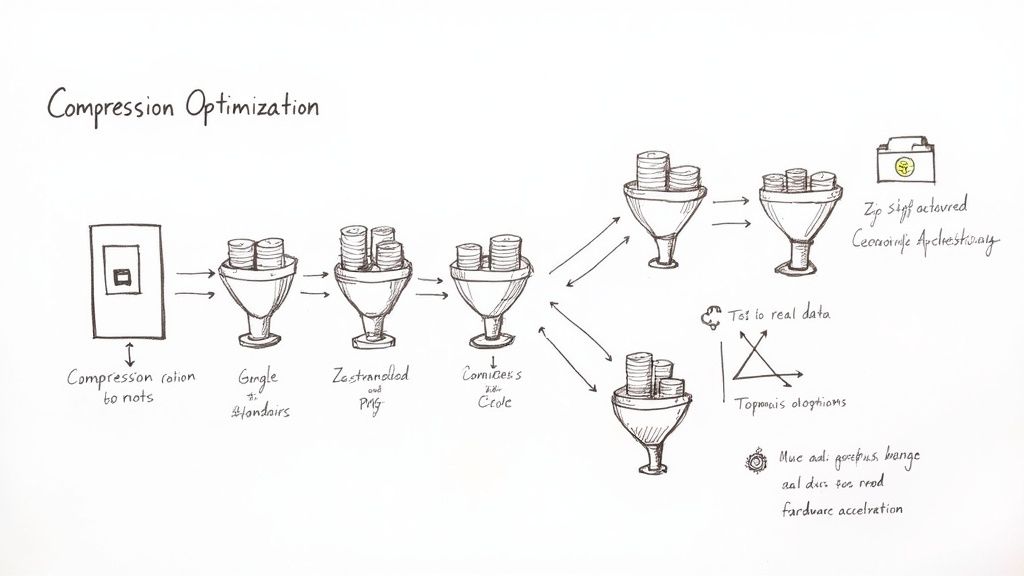

8. Compression Optimization

Data compression makes files smaller while keeping information intact. This helps apps and websites run faster while using less storage space and bandwidth. Let's explore how compression works and why it matters.

When you compress data, smart algorithms remove repetitive patterns to shrink file sizes. The best part? You can decompress files later to get back the original content. Modern compression uses several key approaches:

- Smart Algorithm Selection: Different compression methods work better for specific file types. Popular options include GZIP for web content, Brotli by Google, and Zstandard from Facebook.

- Dynamic Adaptation: Modern compression tools analyze data patterns and adjust their approach to get the best results.

- CPU Acceleration: New processors have built-in features to speed up compression and decompression.

- Targeted Compression: Focus compression on files that benefit most, like images and text documents.

Real Success Stories:

- Google's Brotli makes web pages load noticeably faster than older methods like GZIP. Many major websites saw big speed gains after switching.

- Facebook uses Zstandard to handle massive amounts of data efficiently. Its fast compression helps them process information quickly.

- Tools like TinyPNG and ImageOptim shrink image files while keeping quality high, helping websites load faster.

Why It Works:

Compression has come a long way since early methods like Huffman coding. Modern techniques like Brotli and Zstandard are faster and more effective. As websites and apps handle more data, good compression becomes essential.

Benefits:

- Save Money: Smaller files mean lower storage costs and less hardware needed

- Faster Loading: Compressed data moves through networks more quickly

- Less Data Used: Great for mobile users and limited internet connections

- Better Performance: Reduces strain on servers and network equipment

Challenges:

- Processing Power: Compression needs CPU time, though hardware help reduces this

- Quality Trade-offs: Some methods (like JPEG) can slightly reduce image quality

- Setup Work: Adding compression to existing systems takes planning

- Tool Requirements: Different compression types need specific software to work

Tips for Success:

- Pick the Right Method: Match compression type to your data and speed needs

- Balance Speed vs Size: Stronger compression takes longer but saves more space

- Use Hardware Help: Modern CPUs can speed up compression tasks

- Test Thoroughly: Try different options with real data to find what works best

By using compression wisely, you can make your apps and websites faster while saving money on storage and bandwidth. The key is choosing the right approach for your specific needs.

9. Parallel Processing

Parallel processing has become a key method for speeding up complex computations. By dividing a big task into smaller independent pieces that can run simultaneously on multiple processors, you can complete work much faster and use your hardware more efficiently.

Think of it like building a house - one person working alone would take a long time, but having multiple builders working on different sections at once gets the job done much quicker. Parallel processing applies this same concept to computer tasks.

Key Features of Parallel Processing:

- Multi-threading: Splitting a program into multiple threads that run at the same time

- Distributed processing: Spreading work across multiple connected computers

- Task parallelization: Breaking problems into independent pieces that can run in parallel

- Load balancing: Efficiently sharing work across available resources

Benefits:

- Faster processing: Completes complex calculations much more quickly

- Better hardware usage: Makes full use of available computing power

- Easy scaling: Can handle bigger workloads by adding more processors

- Quicker results: Essential for time-sensitive applications

Challenges:

- Complex coordination: Requires careful management of parallel tasks

- Race conditions: Errors when multiple threads modify shared data

- Hard to debug: Finding errors in parallel code is tricky

- Coordination overhead: Managing threads takes extra processing power

Real Examples:

- Hadoop MapReduce: A system for processing huge datasets across computer clusters

- Apache Spark: A fast data processing system that runs computations in memory

- CUDA: NVIDIA's platform for using GPUs to speed up general computing

History and Growth:

The rise of multi-core processors and distributed computing drove wider adoption of parallel processing. Growing data volumes led to frameworks like Hadoop and Spark. GPU advances and CUDA made parallel processing more accessible across many fields.

Tips for Using Parallel Processing:

- Pick the right tasks: Focus on independent, computation-heavy work

- Manage shared data carefully: Use proper locks and controls to prevent errors

- Watch resource usage: Monitor active threads and system load

- Test extensively: Check thoroughly for concurrency problems

You may also like: How to Multitask Effectively. Good multitasking shares key principles with parallel processing, especially in managing multiple concurrent tasks.

Parallel processing earns its place here because it lets us harness modern hardware's full power. This makes it vital for handling complex tasks in AI, data science, scientific modeling and high-performance computing.

10. Asset Optimization

Asset optimization helps create faster-loading websites by reducing file sizes and improving delivery of images, scripts, and stylesheets. When websites load quickly, visitors stay longer and are more likely to convert. That's why it's essential to optimize all your web assets.

Why it matters:

Slow sites drive visitors away and hurt search rankings. By making assets load faster, you keep users engaged and happy. This directly impacts your site's success and bottom line.

Key Features and Benefits:

- Image Optimization: Using formats like WebP and responsive images reduces file sizes while keeping quality. This makes pages load much faster.

- Script and CSS Optimization: Removing extra spaces and comments from code files makes them smaller and faster to process.

- Resource Bundling: Combining multiple files into one reduces server requests, making pages load faster.

- Lazy Loading: Loading images only when needed saves bandwidth and speeds up initial page loads.

Pros:

- Faster Sites: Less data means quicker loading times

- Lower Data Usage: Helps mobile users save data

- Better Experience: Quick loading keeps users happy

- Reduced Costs: Less bandwidth means lower hosting bills

Cons:

- Complex Setup: Takes time to configure optimization tools

- Ongoing Work: Need to maintain optimized files

- Harder Debugging: Minified code is tougher to fix

- Tool Lock-in: Relying on specific optimization tools

Real Examples:

Online stores see big gains from image optimization - faster pages lead to more sales. News and content sites load much quicker after optimizing scripts and styles.

History and Growth:

Asset optimization became crucial as mobile browsing grew. Tools like webpack, Grunt, and Gulp made it easier. Google Lighthouse now checks for optimized assets.

Practical Tips:

- Use build tools to automate optimization

- Add lazy loading for below-fold content

- Track asset sizes regularly

- Run performance checks often

Examples:

- WordPress has many optimization plugins

- React apps optimize during builds

- Angular CLI includes built-in optimization

Asset optimization helps deliver faster sites that keep users happy. Focus on making your assets efficient and you'll see improved engagement, rankings, and results.

10-Point Performance Optimization Comparison

| Technique | Implementation Complexity (🔄) | Resource Requirements (⚡) | Expected Outcomes (📊) | Ideal Use Cases (💡) | Key Advantages (⭐) |

|---|---|---|---|---|---|

| Code Caching | Moderate complexity; cache invalidation challenges | Medium – requires additional memory overhead | Improved execution time and lower CPU utilization | Frequently executed and hot code paths | Significantly reduces execution time |

| Database Query Optimization | High complexity; ongoing maintenance and reoptimization | Medium to high – needs extra storage (indexes) | Faster query execution and reduced server load | Data-intensive applications and large databases | Enhanced responsiveness and resource utilization |

| Content Delivery Network (CDN) | Moderate to high complexity; setup and cache invalidation challenges | High – involves distributed infrastructure and cost | Reduced latency, improved availability, and enhanced security | Global content delivery and media streaming | Improved speed, reduced origin load, and added security benefits |

| Load Balancing | High complexity; configuration and potential failover issues | High – additional hardware/software infrastructures | Optimal traffic distribution, high availability, and scalability | Distributed server environments and high-traffic systems | Scalability, fault tolerance, and improved resource utilization |

| Memory Management Optimization | High complexity; requires deep technical knowledge and ongoing monitoring | Low – aims to lower overall memory usage | Reduced memory fragmentation and better overall application performance | Applications with heavy memory usage and performance-critical tasks | Improved stability and lower resource consumption |

| Algorithmic Optimization | Moderate to high complexity; involves intricate algorithm selection | Low – primarily focuses on code efficiency | Enhanced performance and scalability through better algorithms | Performance-critical computations and data processing tasks | Lower operational costs and improved efficiency |

| Caching Strategies | High complexity; managing multi-level caching and invalidation strategies | Medium – may incur memory overhead | Faster data access and reduced database load | Systems with high read frequency and heavy data access | Improved user experience and scalability |

| Compression Optimization | High complexity; tuning algorithms and ensuring format compatibility | Medium – increased CPU usage during compression | Reduced storage costs, faster transmission, and lower bandwidth usage | Data transmission and storage optimization | Efficient resource utilization and lower bandwidth consumption |

| Parallel Processing | High complexity; requires careful coordination and synchronization | High – necessitates multiple processors/cores | Improved performance with reduced processing time | Compute-intensive tasks and big data processing | Scalability and better resource utilization |

| Asset Optimization | Moderate complexity; involves build process tooling and maintenance overhead | Low – optimized assets decrease overall resource load | Faster page loads, reduced bandwidth usage, and smoother user experiences | Web and mobile applications seeking faster asset delivery | Enhanced user experience and reduced hosting costs |

Supercharge Your App: Performance Optimization Awaits!

We've covered many strategies to boost app performance in this guide - from code caching and database optimization to using CDNs and implementing load balancing. We've also explored key techniques like memory management, algorithmic enhancements, caching approaches, compression methods, parallel processing, and asset optimization. These tools can help transform slow applications into high-performing systems.

To get the best results, take a methodical approach. Start by using profiling tools to find the actual bottlenecks in your app. Then choose optimization techniques that will have the biggest impact within your technical constraints. For example, if database queries are causing delays, focus first on query optimization and caching. For slow page loads, look at adding a CDN, optimizing assets, and using compression. When specific code functions are slowing things down, that's when to optimize algorithms. Good memory management is especially important for resource-heavy applications to prevent leaks.

Remember that performance tuning is an ongoing process. Keep monitoring your app's speed and responsiveness over time. Be ready to adjust your optimization strategy as usage patterns change and new technical capabilities emerge. Stay current with developments in areas like hardware acceleration that could benefit your app.

Key Takeaways:

- Use profiling tools: Find the real bottlenecks before making changes

- Target high-impact areas: Focus efforts where they'll make the most difference

- Monitor consistently: Keep tracking performance and adjusting optimizations

Performance optimization requires sustained attention, not just one-time fixes. By applying these principles thoughtfully and staying flexible, you can keep your app running smoothly and meeting user needs. The effort invested in optimization pays off through better user satisfaction and app success. Start optimizing systematically and you'll see the results in your app's performance.