OpenAI model pricing: 8 Key Models Compared

Delve into openai model pricing to compare costs and features of 8 key models. Get insights to choose the best fit for your needs.

Decoding OpenAI's Pricing Structure

Understanding how to navigate OpenAI's pricing can make or break your AI project's budget. As these language models have grown from simple to sophisticated, their pricing models have evolved significantly too. Good pricing needs to work for both developers with modest budgets and large companies deploying AI at scale. This balance shapes everything about how OpenAI charges for its technology.

When you're planning an AI project, knowing exactly what you'll pay is essential. The difference between choosing GPT-3.5 versus GPT-4 could mean hundreds or thousands of dollars in monthly costs depending on your usage patterns. Each model comes with its own pricing structure based on factors like processing power, token usage, and capabilities.

In this guide, we'll break down the cost structures for eight key OpenAI models in plain language. You'll learn what factors affect pricing and how to make smart decisions that optimize your spending without sacrificing performance. By the end, you'll have the knowledge to confidently choose the right model that fits both your technical needs and your budget constraints.

Whether you're building a simple chatbot or developing an enterprise-level AI solution, understanding these pricing details will help you avoid unexpected costs and make the most of OpenAI's powerful technology. Let's dive into the specifics so you can plan your next AI project with confidence.

1. GPT-4o

GPT-4o represents OpenAI's most advanced multimodal model to date. What makes it stand out is its ability to process text, images, and audio simultaneously, creating opportunities that simply weren't possible with earlier models. Its reasonable pricing combined with these enhanced capabilities makes it the top choice on our list of OpenAI models when considering value for money.

The multimodal capabilities of GPT-4o open up exciting possibilities. Digital marketers can now generate compelling ad copy based on product images, dramatically cutting down on time spent crafting marketing materials. Software developers can build more intelligent applications, like accessibility tools that provide detailed descriptions of images for visually impaired users. For entrepreneurs and indie developers, GPT-4o creates opportunities to develop entirely new categories of products and services.

From a technical perspective, GPT-4o comes with an 8K context window as standard, which can be expanded to 128K for more complex applications at an additional cost. It's priced at $10 per million tokens for input and $30 per million tokens for output, making it more affordable than previous GPT-4 models. Its response times are also noticeably faster, enhancing the user experience for real-time applications. You might want to check out our pricing at Multitask AI to see how we compare to OpenAI's offerings.

Pros:

Highest capability model in the GPT lineup: Its ability to process text, images, and audio simultaneously enables previously impossible applications.

More cost-effective than previous GPT-4 models: The lower pricing makes it more accessible for a wider range of projects.

Handles complex reasoning and creative tasks well: Whether you need code generation or creative writing, GPT-4o delivers impressive results.

Supports vision and audio processing: This enables applications like image captioning, audio transcription, and much more.

Cons:

More expensive than GPT-3.5 models: If your application only needs text processing, GPT-3.5 might be more cost-effective.

Higher token costs for longer contexts: Using the extended context window can significantly increase your costs.

Higher latency than smaller models: While faster than its predecessors, it still responds more slowly than simpler models.

Not available for fine-tuning yet: This limits your ability to customize the model for specific use cases.

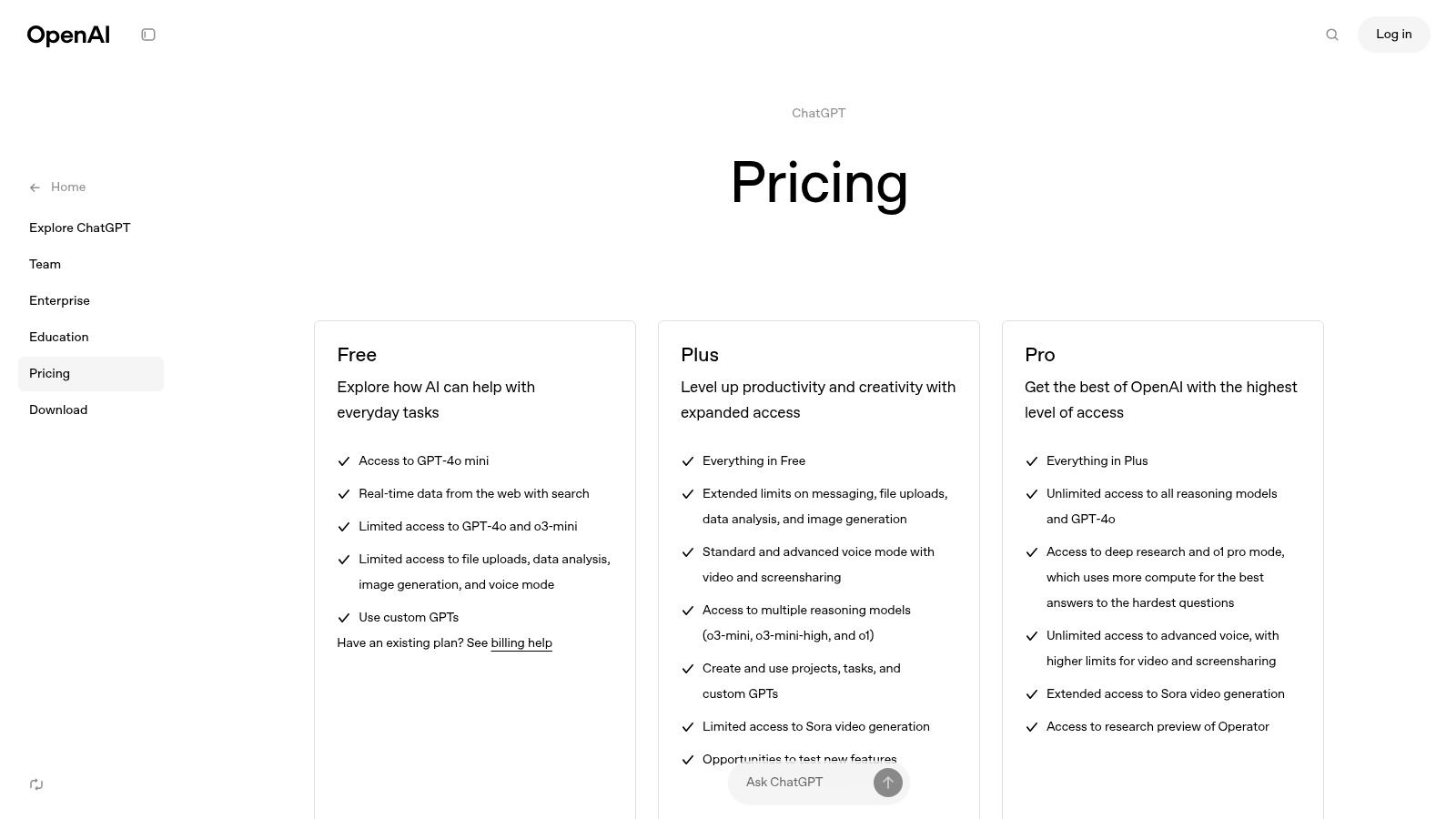

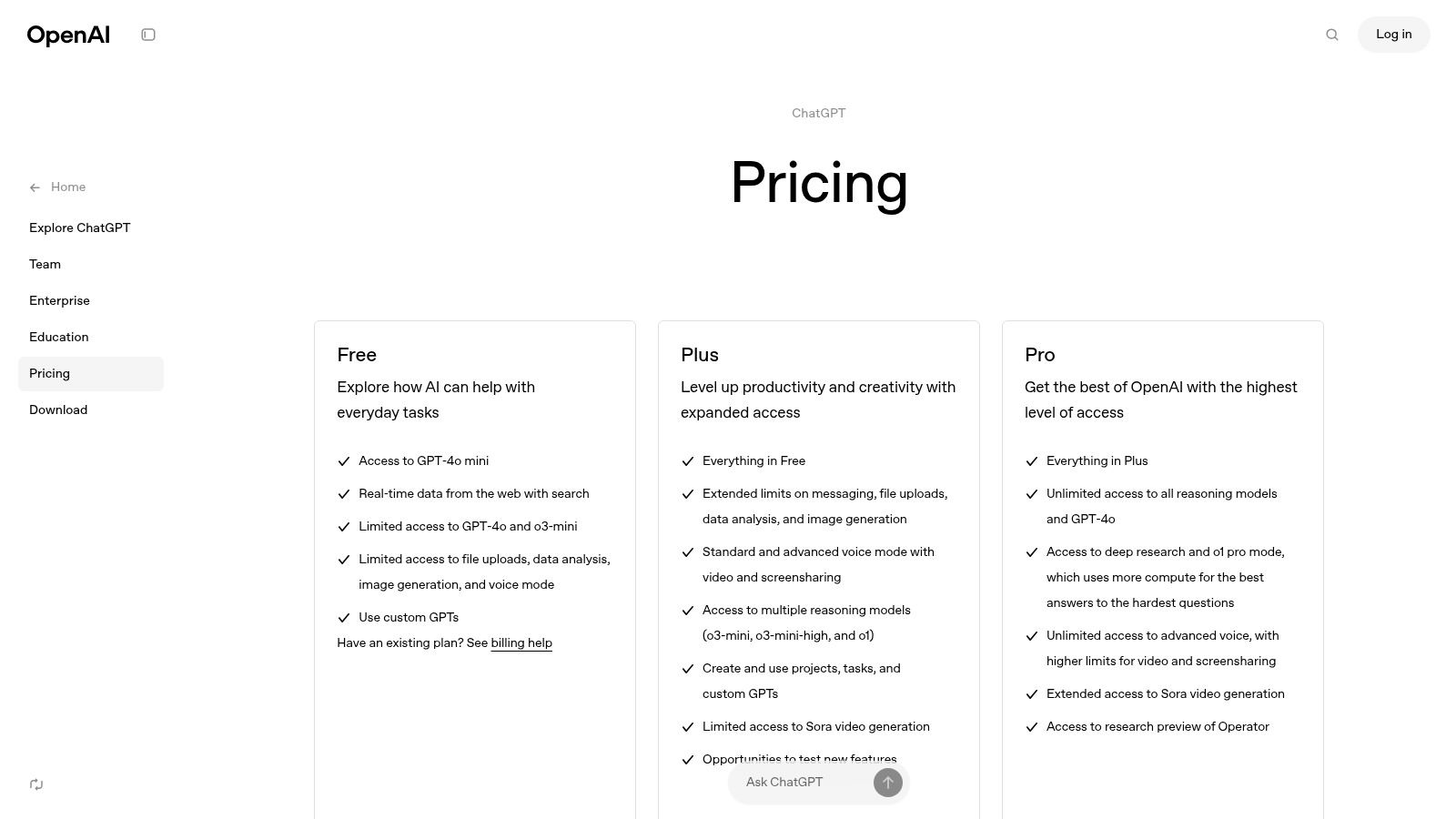

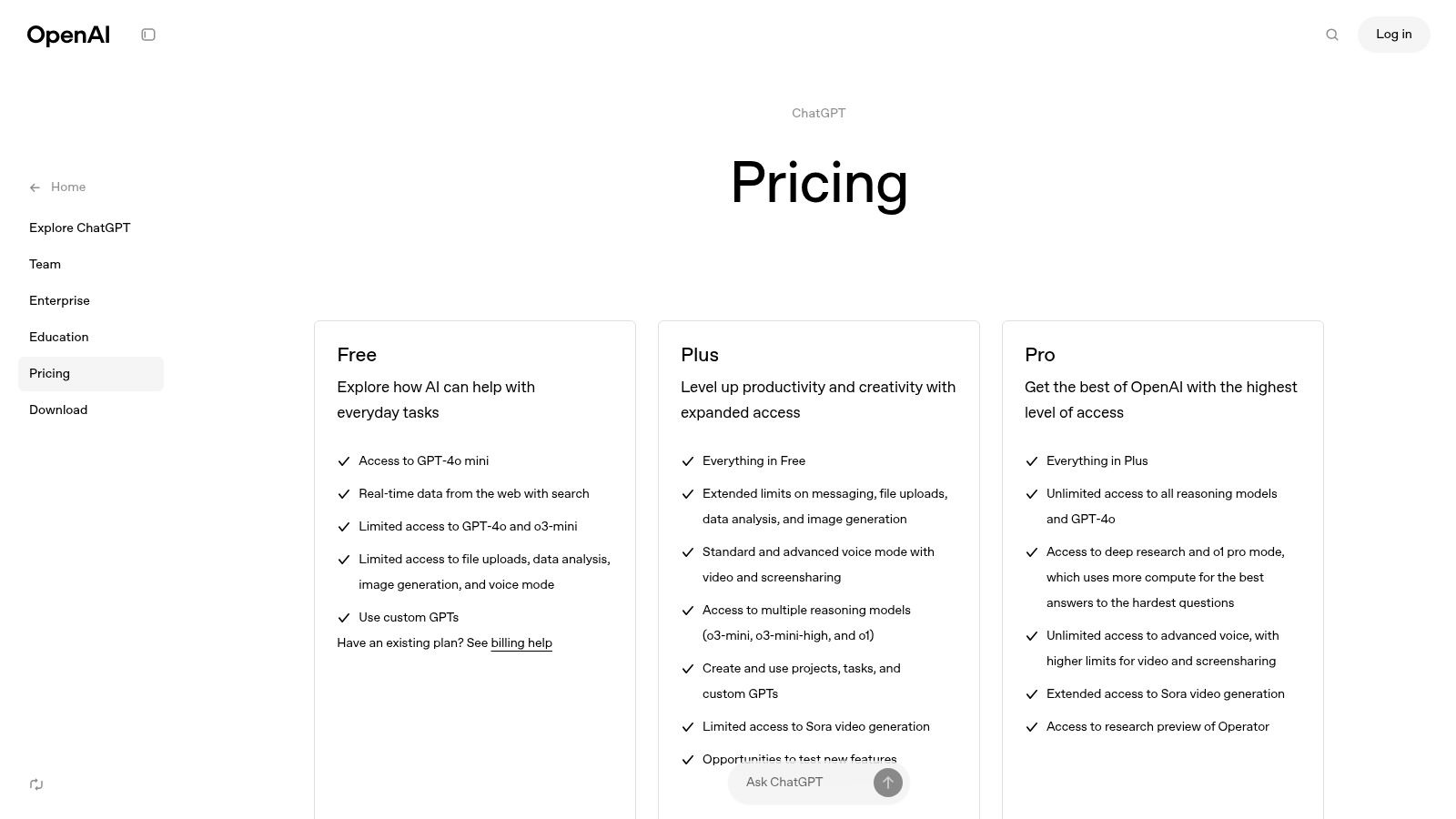

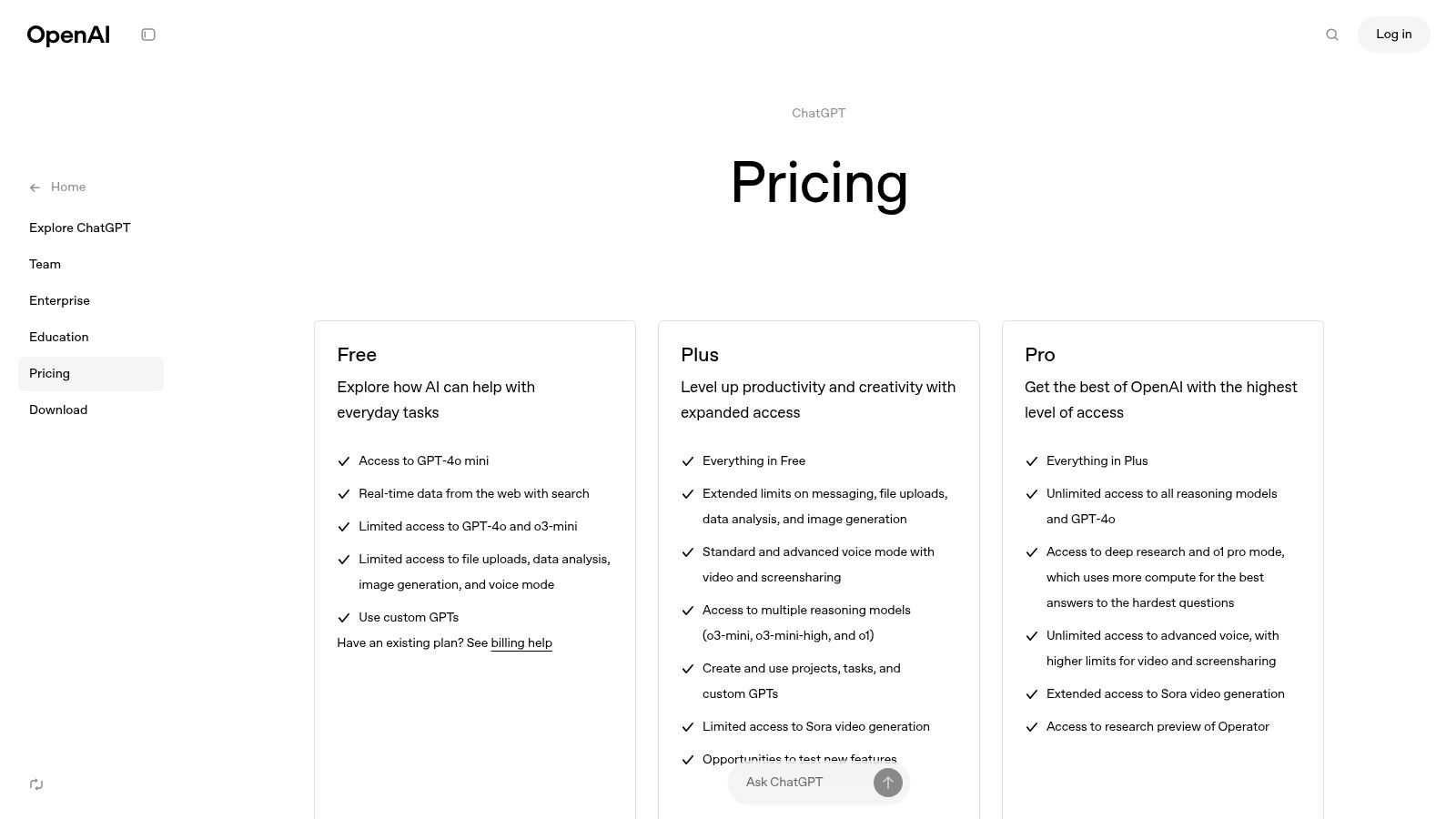

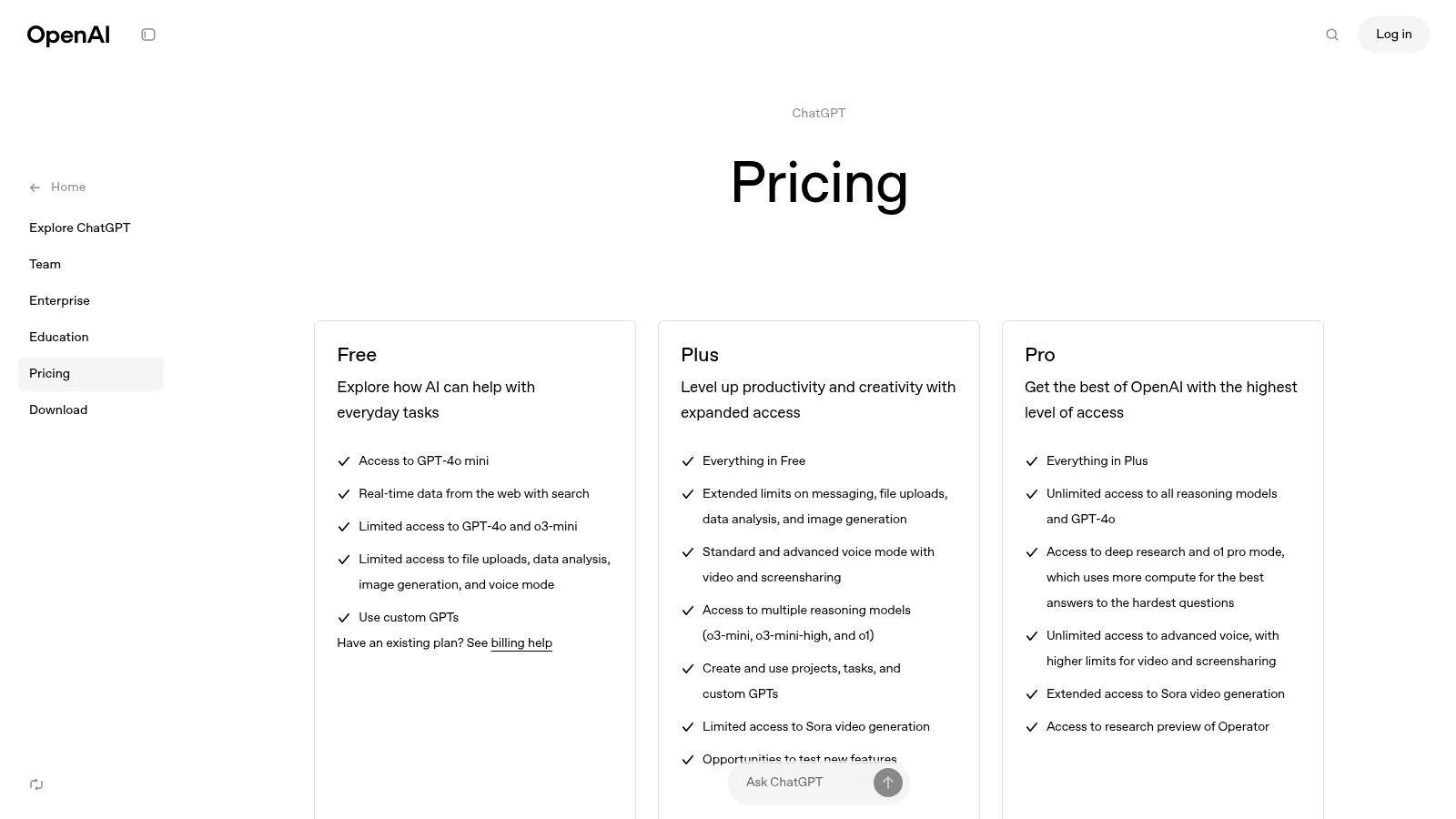

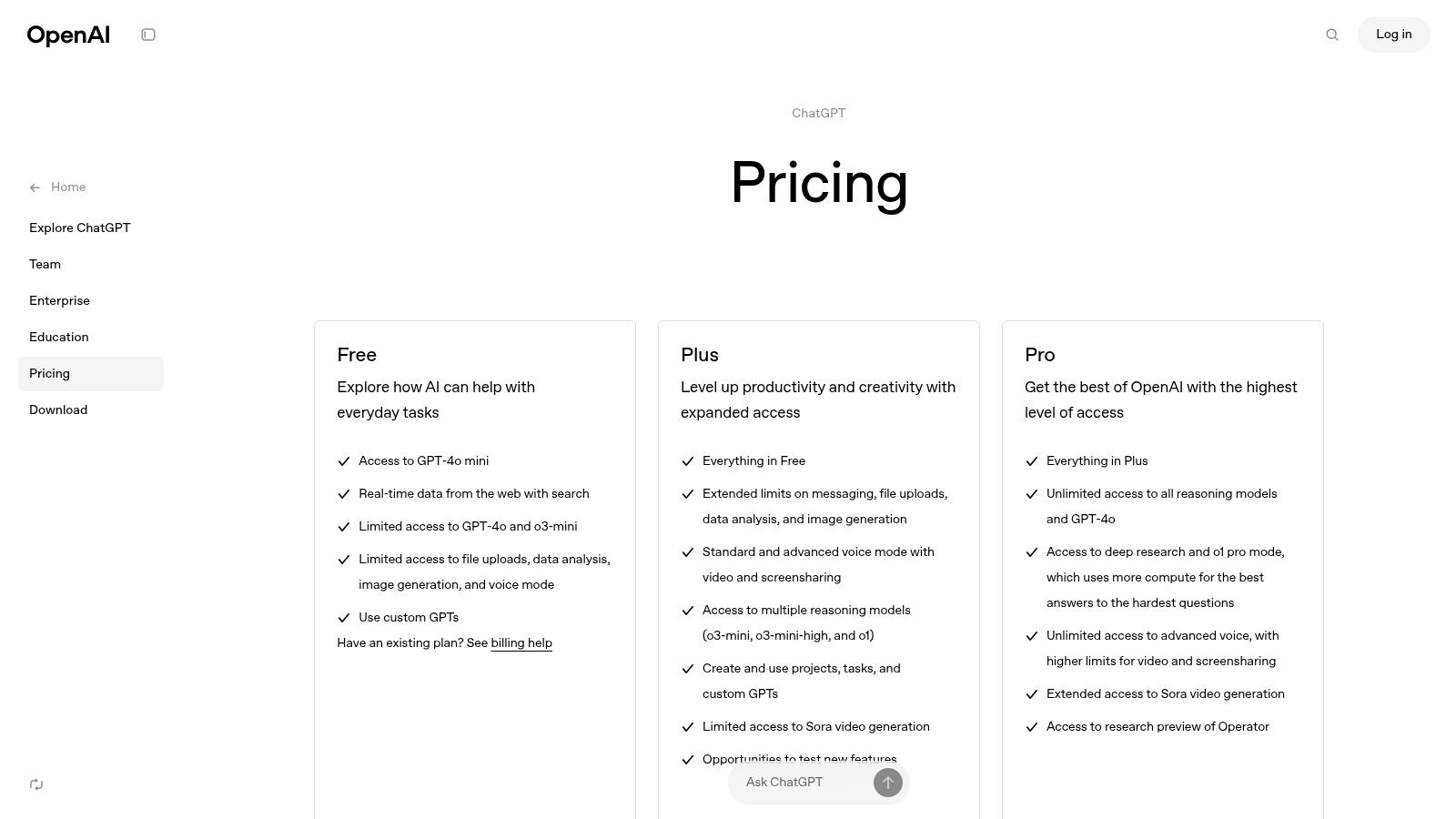

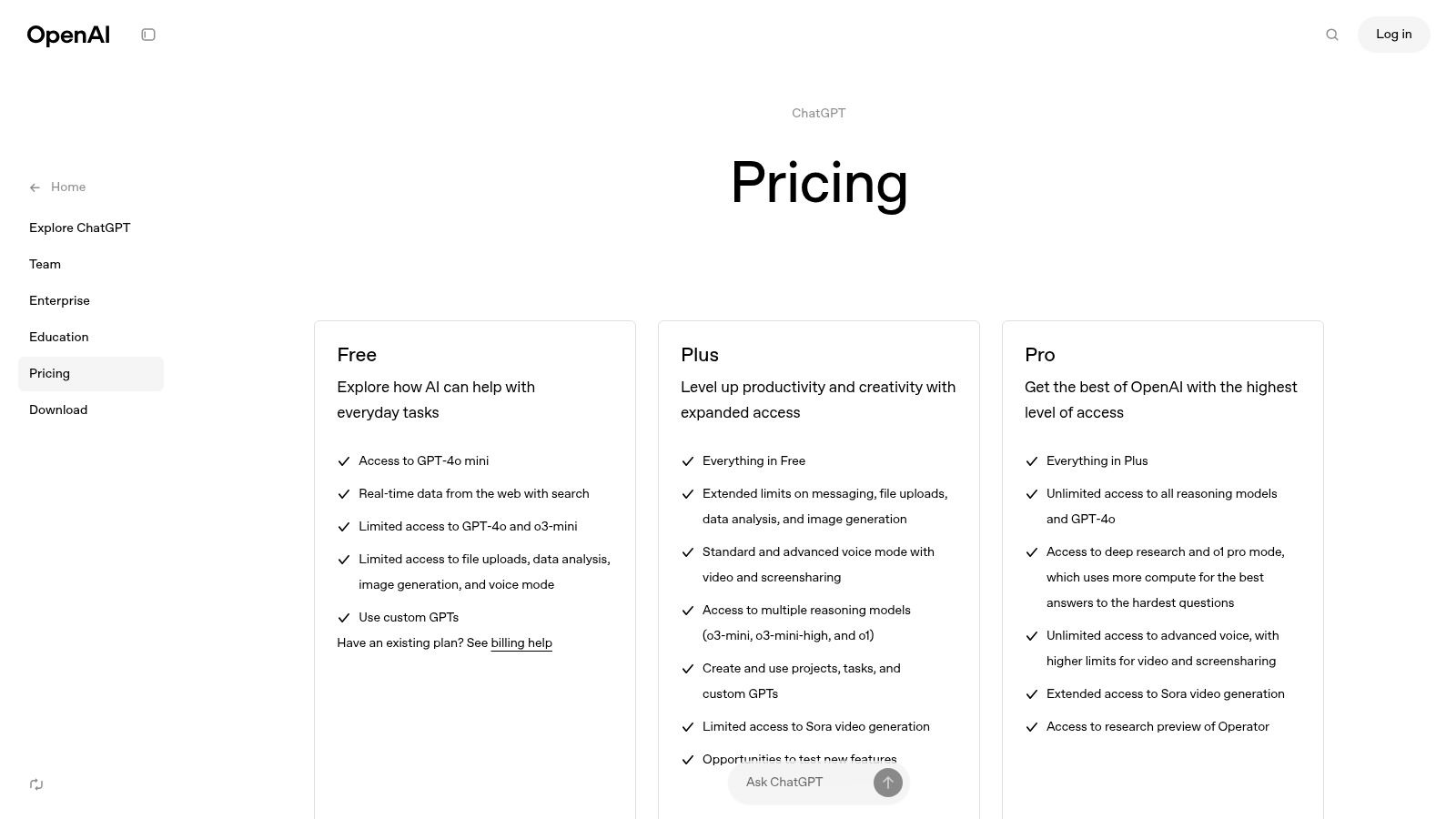

For more information about pricing and availability, check out the official OpenAI pricing page.

While GPT-4o represents a major advancement in AI capabilities, the right model for your project depends on your specific requirements and budget constraints. For applications requiring advanced reasoning, creative content generation, and multimodal processing, GPT-4o is clearly the best choice. For projects with tighter budgets or those focused primarily on text processing, GPT-3.5 might be more suitable. Read also: [Tips for optimizing your spending on OpenAI models]. (This is a placeholder, replace with a real link if available).

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

2. GPT-4 Turbo

GPT-4 Turbo stands out in the AI landscape as a powerful yet accessible language model. While newer options like GPT-4 with Vision have emerged, GPT-4 Turbo maintains its relevance due to its excellent balance of performance and affordability, making it practical for many applications.

One of its most impressive features is the 128K context window – a game-changer for handling extensive text. This means you can analyze entire legal documents, perform comprehensive code reviews, or process complete ebooks in a single prompt. The expanded context allows the model to maintain coherence across large volumes of text, something earlier models couldn't achieve.

Beyond its context capabilities, GPT-4 Turbo delivers robust reasoning skills that make it ideal for complex tasks requiring logical thinking and problem-solving. This makes it valuable for developing advanced chatbots, creating sophisticated content, and building AI research assistants. The addition of vision capabilities further expands its potential, enabling applications that can process and understand both images and text, creating opportunities for innovative multi-modal projects.

Pricing and Technical Requirements:

GPT-4 Turbo offers competitive pricing:

Input: $10/million tokens

Output: $30/million tokens

This makes it much more affordable than the original GPT-4 while delivering better performance than GPT-3.5 models. However, it's worth noting that it still costs more than GPT-3.5-turbo. When selecting a model, developers should weigh cost against performance requirements for their specific project needs.

Comparison with Similar Tools:

Compared to GPT-3.5-turbo, GPT-4 Turbo provides better reasoning abilities and a much larger context window, though at higher cost and potentially increased response time. While GPT-4 with Vision offers more advanced features, GPT-4 Turbo remains compelling for text and image applications where budget efficiency matters. While GPT-4o is gradually taking over in cutting-edge applications, GPT-4 Turbo's current pricing and availability make it a practical choice for many projects.

Implementation/Setup Tips:

Getting started with GPT-4 Turbo is straightforward through the OpenAI API. Developers can use existing libraries and SDKs in various programming languages to quickly integrate the model. Remember to consider context window limitations when designing prompts, and optimize prompt structure to minimize token usage and control costs.

Pros:

Large context window for handling lengthy documents

Strong reasoning capabilities

Vision functionality included

More affordable than original GPT-4

Cons:

More expensive than GPT-3.5 models

Slower than GPT-3.5 models

Being replaced by GPT-4o in many applications

Higher latency than newer models

Website: OpenAI Pricing

GPT-4 Turbo earns its place on this list by offering an excellent balance of performance and cost. It's a solid choice for developers who need the capabilities of a powerful language model with extended context and vision features without paying premium prices for the very latest models. Its reasonable cost and easy-to-use API make it valuable for a wide range of AI applications.

3. GPT-3.5 Turbo

GPT-3.5 Turbo has earned its place as the practical workhorse in OpenAI's model lineup. It offers an impressive balance of performance and affordability, making it the default choice for many applications. For businesses and developers who need a reliable language model without breaking the bank, GPT-3.5 Turbo provides an excellent foundation.

What makes GPT-3.5 Turbo so versatile?

Its true strength comes from handling everyday NLP tasks with ease. It excels at creating chatbots, generating content (like blog posts or emails), writing code, translating languages, and summarizing text. While it doesn't match GPT-4's capabilities, its faster performance and lower cost make it perfect for many real-world projects.

Pricing and Technical Details:

Input: $0.0005 / 1,000 tokens

Output: $0.0015 / 1,000 tokens (About 4 tokens equal one English word)

Context Window: 16,000 tokens by default (This determines how much text the model can consider during a conversation)

Knowledge Cutoff: September 2021 (The model doesn't know about events after this date)

Fast Response Times: Noticeably quicker than GPT-4, reducing wait times in applications

Practical Applications and Use Cases:

Customer Support Chatbots: Answer common questions quickly and helpfully

Content Generation: Create marketing copy, blog posts, or social media updates

Code Generation: Help developers write code snippets or complete functions

Language Translation: Convert text between different languages

Summarization: Condense long documents into brief, readable summaries

Pros:

Cost-Effective: Much more affordable than GPT-4, making it good for high-volume use

Faster Responses: Lower wait times create a better user experience

Handles Common Tasks Well: Performs excellently on most everyday NLP tasks

Lower Latency: Perfect for applications where quick responses matter

Cons:

Less Capable than GPT-4: Not as good with complex reasoning or specialized tasks

Smaller Context Window: Can't handle conversations or documents as long as GPT-4

Older Knowledge Cutoff: Lacks information about anything after September 2021

Less Accurate for Complex Tasks: May struggle with specialized knowledge or intricate logic

Implementation Tips:

Start with the OpenAI API Documentation: The official guides provide clear examples in various programming languages

Test Different Prompts: How you phrase instructions greatly affects results - experiment to find what works best

Consider Fine-tuning: For specific use cases, training the model on your own data can significantly improve results

Comparison with Similar Tools:

While many large language models exist today, GPT-3.5 Turbo hits a sweet spot between price and performance. More powerful models like GPT-4 offer better results but at a cost that may not make sense for many applications. For teams needing a reliable and affordable solution, GPT-3.5 Turbo remains an excellent choice.

Website: OpenAI Pricing

4. DALL-E 3: High-Fidelity Image Generation

DALL-E 3, OpenAI's newest image generation model, stands out for its exceptional ability to create detailed, high-quality images from text descriptions. What makes this tool particularly impressive is how it understands complex instructions and translates them into visually striking images. This makes it perfect for creating marketing materials, website visuals, design prototypes, or unique artistic works.

The model shows a marked improvement over previous versions in interpreting nuanced prompts, giving users much more control over their creative output.

Pricing and Availability:

DALL-E 3 offers two resolution options with straightforward pricing:

Standard Resolution: $0.020 per image

HD Resolution: $0.040 per image

You can access DALL-E 3 through the OpenAI API to integrate it into your existing workflows. It's also available to users with ChatGPT Plus and Enterprise subscriptions, making it easy to access for many people.

Features and Benefits:

Creates images from detailed text prompts: DALL-E 3 excels at understanding complex descriptions, allowing you to generate exactly what you have in mind. The level of detail you can specify gives you remarkable control over the final image.

Multiple resolution options: Choose between standard and HD resolution based on your specific needs and budget constraints.

Improved image accuracy: Building on previous versions, DALL-E 3 creates more realistic and accurate representations of your prompts.

Integration with ChatGPT Plus/Enterprise: Direct access through these platforms makes the image generation process simple and efficient.

Pros:

High-quality image generation

Strong understanding of complex prompts

Multiple resolution options

Improved image accuracy compared to previous versions

Cons:

More expensive than some competitor image models (e.g., Stable Diffusion)

Usage costs can add up quickly for bulk image generation

Limited control over specific artistic styles compared to specialized tools like Midjourney

No direct editing features within the API; changes require generating new images

Implementation Tips:

Start with specific prompts: The more details you include in your prompt, the better DALL-E 3 will understand your vision. Try different wording and descriptions to get the best results.

Consider resolution needs: Standard resolution works well for many purposes, but HD resolution offers noticeably better quality for print or high-resolution displays.

Monitor usage costs: Keep track of your image generation to avoid surprise expenses, especially if you're creating many images. Look into bulk pricing options if available through the API.

Why DALL-E 3 Deserves its Place:

DALL-E 3 represents a major step forward in AI image generation, particularly in how well it understands and interprets complex prompts. While it costs more than some alternatives, its ease of use through the API and ChatGPT Plus/Enterprise, combined with its outstanding image quality, makes it a valuable tool for professionals and creatives who need reliable, high-quality image generation.

Website: OpenAI Pricing

No spam, no nonsense. Pinky promise.

5. Whisper API

The Whisper API stands out as a highly accurate and budget-friendly solution for speech-to-text applications. Created by OpenAI, this tool excels at transcribing and translating audio into text, making it valuable for developers, AI professionals, and marketers.

What makes Whisper particularly useful is its ability to handle different languages and accents, process various audio formats, and perform well even with poor audio quality. These capabilities open up numerous practical applications:

Podcast Transcription: Turn audio recordings into searchable text content to improve accessibility and SEO.

Generating Subtitles/Captions: Create accurate captions for videos to enhance user experience and reach wider audiences.

Market Research Analysis: Convert customer interviews and focus groups into text to extract valuable insights.

Voice-Activated Assistants: Build reliable voice-controlled applications with solid speech recognition.

Multilingual Content Creation: Transcribe and translate audio in different languages to reach global audiences more efficiently.

At just $0.006 per minute of audio, the API offers excellent value for processing large volumes of audio content.

Technical Requirements and Implementation Tips:

Using Whisper API is simple - you send your audio file to the API endpoint and receive the transcribed or translated text back. While the API itself has standard requirements, you'll need to properly handle audio data on your end, which might include some pre-processing depending on audio quality and format. For more information on integrating speech-to-text functionality into your applications, check out Multitaskai's documentation on speech-to-text settings.

Pros:

High accuracy for speech recognition

Cost-effective pricing at $0.006 per minute

Strong multilingual support

Good performance with challenging audio conditions

Cons:

Costs increase directly with audio length, making long files more expensive

Not designed for real-time transcription, limiting some interactive applications

Limited formatting options for output text, often requiring post-processing

No built-in speaker identification for conversations with multiple people

Comparison with Similar Tools:

While other options exist like Google Cloud Speech-to-Text and Amazon Transcribe, Whisper stands out with its competitive pricing and excellent multilingual capabilities. Its accuracy is generally considered superior, especially with complex audio scenarios.

Website: https://openai.com/pricing

Read also: [OpenAI's other API offerings] (This placeholder link can be replaced with a relevant internal link within your content). This provides another way to explore OpenAI's range of tools and compare their features and pricing. Whisper's affordable pricing and ease of use make it a valuable resource for anyone working with audio content.

6. Embeddings Models (Ada v2)

OpenAI's Ada v2 embeddings model stands out for its excellent balance of performance and cost. This powerful tool converts text into numerical vectors that capture the meaning behind words and phrases. Whether you're a data scientist, marketer, or developer, Ada v2 offers practical solutions for making sense of text data.

What can you do with Ada v2 embeddings? Think about building a smart search engine for your website that understands what users are looking for, grouping similar customer feedback to spot trends, or creating personalized recommendations. Ada v2 makes these complex tasks much more approachable. It particularly shines in:

Semantic Search: Find content based on meaning, not just keywords. This helps users discover relevant information even when they don't use exact terms.

Clustering: Group similar text automatically. This helps with organizing topics, segmenting customers, and finding unusual patterns in your data.

Classification: Sort text into categories without manual work. Perfect for filtering spam, analyzing sentiment, and tagging content.

Pricing and Technical Details:

At just $0.10 per million tokens, Ada v2 is remarkably affordable. It creates vectors with 1536 dimensions, giving rich representations of text meaning, and can handle text segments up to 8191 tokens long.

Pros:

Budget-Friendly: The low cost makes it accessible for projects of all sizes, from startups to enterprises.

Quality Semantic Understanding: The model captures meaning effectively, improving results in downstream applications.

Powerful Search Capabilities: Build search tools that understand what users actually want, not just what they type.

Works Well with Vector Databases: Easily pairs with tools like Pinecone or Weaviate for efficient storage and retrieval.

Cons:

Requires Supporting Infrastructure: For a complete solution, you'll need to integrate with other tools and services.

Vector Database Needed: While this enables powerful features, it adds complexity to your setup.

Fixed Dimensions: The 1536-dimension output can't be adjusted, which might limit certain specialized applications.

Technical Learning Curve: While not extremely difficult, effective implementation requires understanding vector operations and related concepts.

Implementation Tips:

Select the right vector database: Consider your needs for speed, scale, and cost when choosing where to store your vectors.

Clean your data first: Well-prepared text data significantly improves embedding quality and downstream results.

Test different settings: Adjust parameters like text length and similarity thresholds to get the best performance for your specific use case.

Website: OpenAI Pricing

Ada v2 gives you high-quality text understanding capabilities without breaking the bank. For developers, businesses, and researchers looking to build smarter text-based applications, it provides an excellent foundation. The combination of reasonable cost, quality results, and flexibility makes it a valuable tool for anyone working with text data.

7. Fine-tuning (GPT-3.5 Turbo)

Fine-tuning gives you the power to customize GPT-3.5 Turbo specifically for your unique needs. Unlike using generic prompts, fine-tuning actually trains the model on your own data, creating outputs that are more accurate and relevant to your specific situation. For many specialized applications, this approach offers a more affordable alternative to larger models like GPT-4, while still delivering impressive results.

What makes fine-tuning so valuable is the balance it strikes between power and cost. For AI developers and professionals, this opens up countless possibilities. You could train the model on your company's documentation to build a custom support chatbot, or create a code generator that perfectly matches your team's coding standards. Small business owners and entrepreneurs can use fine-tuning to develop specialized AI tools without breaking the bank. Content creators and marketers might fine-tune models to generate content that perfectly matches their brand voice.

Key Features and Benefits:

Specialized Models: Train GPT-3.5 Turbo on your unique data to create an AI assistant tailored to your specific needs.

Improved Performance: Get better results for domain-specific tasks compared to generic prompting.

Cost-Effective: Save money compared to using GPT-4, especially for clearly defined use cases.

Reduced Token Usage: Fine-tuned models often need fewer tokens for instructions, cutting costs over time.

Larger Context Window: Work with up to 16K tokens of context, processing more text in a single interaction.

Pricing:

Training: $3.50 / million tokens

Input Usage: $1.50 / million tokens

Output Usage: $2.00 / million tokens

Learn more about managing different models and their costs on our documentation page: Multitask AI Models Documentation

Pros:

Creates highly specialized models that fit your exact requirements.

Delivers better accuracy for specific domains or tasks.

More cost-effective than using larger models or complex prompting.

Can reduce long-term costs through more efficient token usage.

Cons:

Requires upfront investment of time to prepare training data.

Needs some technical knowledge to implement properly.

Performance is limited to what the base GPT-3.5 model can do.

Implementation Tips:

Focus on Data Quality: Clean and carefully prepare your training data—the better your data, the better your results. Consider using data augmentation to expand limited datasets.

Start Small: Begin with a smaller dataset to test your approach before investing in larger training runs.

Track Performance: Monitor key metrics during training to ensure your model is improving as expected.

Learn the API: Take time to understand the OpenAI API and best practices for fine-tuning.

For more information on pricing and technical details, visit the official OpenAI pricing page: OpenAI Pricing

8. GPT-4 with 128K context

GPT-4 with 128K context stands out for its exceptional ability to handle much longer text inputs than standard language models. This expanded context window creates new possibilities for applications that need to process and understand large volumes of information at once.

This version features a massive 128,000 token context window, allowing it to "remember" and work with information equivalent to about 50-100 pages of text in a single request. This represents a major leap forward from standard GPT-4 models and significantly broadens what you can accomplish with it.

Features:

128K token context window: The standout capability that lets you process much longer text sequences than before.

Input cost: $0.06 per 1,000 tokens.

Output cost: $0.12 per 1,000 tokens.

Maintains GPT-4 level reasoning abilities: Despite handling more context, this model keeps the strong reasoning capabilities of the original GPT-4.

Practical Applications and Use Cases:

In-depth Document Analysis and Summarization: Analyze long reports, legal documents, or research papers without losing important context. You can summarize complex materials in seconds while accurately capturing key points and nuances.

Enhanced Chatbots and Conversational AI: Keep track of context throughout extended conversations, creating more natural and helpful user experiences. This works especially well for customer service bots handling complex issues or multi-step dialogues.

Code Generation and Debugging: Process entire codebases for better understanding, generation, and troubleshooting. Developers can review large code files and get relevant suggestions or spot potential bugs more easily.

Long-Form Content Creation: Create longer articles, stories, or scripts with better coherence and consistency throughout.

Comprehensive Question Answering: Answer complex questions that require deep understanding and analysis of substantial text.

Pros:

Handles extremely long documents without losing track.

Maintains context across lengthy conversations.

Excels at document analysis and summarization.

Enables complex multi-step reasoning with full context.

Cons:

Much more expensive than standard GPT-4, which might limit access for some users. Consider the cost implications carefully for high-volume applications.

Higher response times due to processing longer contexts. Working with 128K tokens takes noticeably more time than shorter prompts.

Now largely overtaken by GPT-4o, which offers similar 128k context plus other improved capabilities. Consider whether GPT-4o might be a better option for your needs.

Implementation/Setup Tips:

You can access GPT-4 with 128K context through the OpenAI API. Make sure your application is set up to use the correct model designation. Check the OpenAI documentation for specific implementation instructions.

Comparison:

While the 128k context window was a major advance when introduced, newer models like GPT-4o and Claude 2 now match or exceed this capability. Consider these alternatives, especially if you need additional features beyond extended context, such as multi-modal capabilities.

Website: OpenAI Pricing

This enhanced version of GPT-4 provides a powerful tool for working with extensive text data. Though the cost is higher than basic models, the ability to maintain complete context across large volumes of information opens up new possibilities for AI applications and makes it valuable for professionals who need to fully utilize large language models with lengthy content.

OpenAI 8-Model Pricing Comparison

Model | Core Features ★ | Unique Selling Points ✨ | Value Proposition 💰 | Target Audience 👥 |

|---|---|---|---|---|

GPT-4o | Multimodal (text, image, audio); 8K/128K context | Highest capability; excels in complex reasoning & vision | Competitive per-token pricing | Pro developers; creative enterprises |

GPT-4 Turbo | 128K context; vision analysis | Balanced performance; affordable upgrade vs. GPT-4 | Cost-effective for lengthy documents | Advanced app developers; enterprise solutions |

GPT-3.5 Turbo | 16K context; fast responses | General purpose; low latency | Best cost-efficiency for common tasks | Broad market; everyday applications |

DALL-E 3 | Text-to-image generation; standard & HD resolutions | High-quality visuals; reliable artistic output | Tiered pricing per image | Designers; creative professionals |

Whisper API | Speech recognition; transcription & translation; multilingual | Accurate audio processing; robust in challenging conditions | Low per-minute audio cost | Media teams; transcription services |

Embeddings Models (Ada v2) | 1536-dim semantic vectors; optimized for search & clustering | Powerful for recommendations; efficient vectorization | Very cost-effective for vector operations | Data scientists; ML developers |

Fine-tuning (GPT-3.5 Turbo) | Custom training; supports up to 16K context | Specializes in domain-specific tasks | Lower fine-tuning costs compared to GPT-4 models | Enterprises; developers customizing AI |

GPT-4 with 128K context | Extended 128K context; maintains GPT-4 reasoning | Handles extremely long documents; advanced multi-step logic | Premium pricing for extensive processing capabilities | Researchers; document analysis experts |

Making Smart Choices with OpenAI

Understanding OpenAI model pricing helps you select the most cost-effective option for your projects. This guide provides key insights to help you navigate OpenAI's offerings and make the most of these powerful models, whether you prioritize performance, affordability, or specific capabilities.

Your specific needs should guide your model selection. For complex reasoning and nuanced conversations, GPT-4 or GPT-4 Turbo with its 128K context window might be your best bet. If you need speed and cost-effectiveness, GPT-3.5 Turbo delivers excellent value. For visual creation, DALL-E 3 offers impressive capabilities, while Whisper API excels at transcription. When building semantic search or retrieval systems, embedding models like Ada v2 work perfectly. For specialized applications, you can fine-tune GPT-3.5 Turbo to match your exact requirements.

Getting started is straightforward. OpenAI provides detailed documentation and API keys for easy integration. Begin with a small project to test the waters and understand what each model can and cannot do.

Budget considerations are essential in your decision-making process. Analyze your expected usage volume and choose a model with pricing that fits your financial plan. Remember that both input and output tokens affect your total cost. Keep a close eye on usage, especially during development phases, to prevent unexpected expenses. Also consider your computational resources and memory requirements, particularly when working with larger models and datasets.

Make sure your chosen model works well with your existing systems. OpenAI offers APIs and libraries in many programming languages, which simplifies integration. However, think about the development work and ongoing maintenance needed for smooth operation.

Key Takeaways:

Cost vs. Performance: Find the right balance for your project. GPT-3.5 Turbo offers good value, while GPT-4 provides better results at a higher price.

Context Window: Consider how much information your application needs to process at once. GPT-4 with 128K context handles much larger documents and conversations.

Fine-tuning: Consider customizing models for specific tasks to improve results and potentially reduce costs.

Experimentation: Start small to learn how the models work and optimize your approach.

Monitoring: Track your API usage carefully to manage your budget effectively.

OpenAI offers diverse models with different pricing structures to meet various needs. By carefully weighing performance, cost, context window size, fine-tuning options, and integration requirements, you can confidently select the right OpenAI model to power your AI applications and achieve your goals.