Top 10 OpenAI Alternatives for 2025: AI Tools Compared

Discover the top 10 openai alternatives for 2025. Compare features, pricing, and privacy of MultitaskAI, Claude, Gemini and more to find your ideal AI tool.

Why Explore OpenAI Alternatives?

OpenAI set a high standard for generative AI, but many teams need more cost control, data privacy, or specialized features. This listicle highlights the top 10 openai alternatives—MultitaskAI, Claude AI (Anthropic), Google Gemini, Cohere, Mistral AI, Meta AI (LLaMA series), HuggingFace, Perplexity AI, Ollama, and Amazon Bedrock. You’ll learn how each tool tackles performance bottlenecks, privacy requirements, and budget constraints, helping you choose the right AI partner. From cloud-based giants to open-source frameworks, we cover options that suit developers, researchers, and businesses aiming to optimize AI workflows.

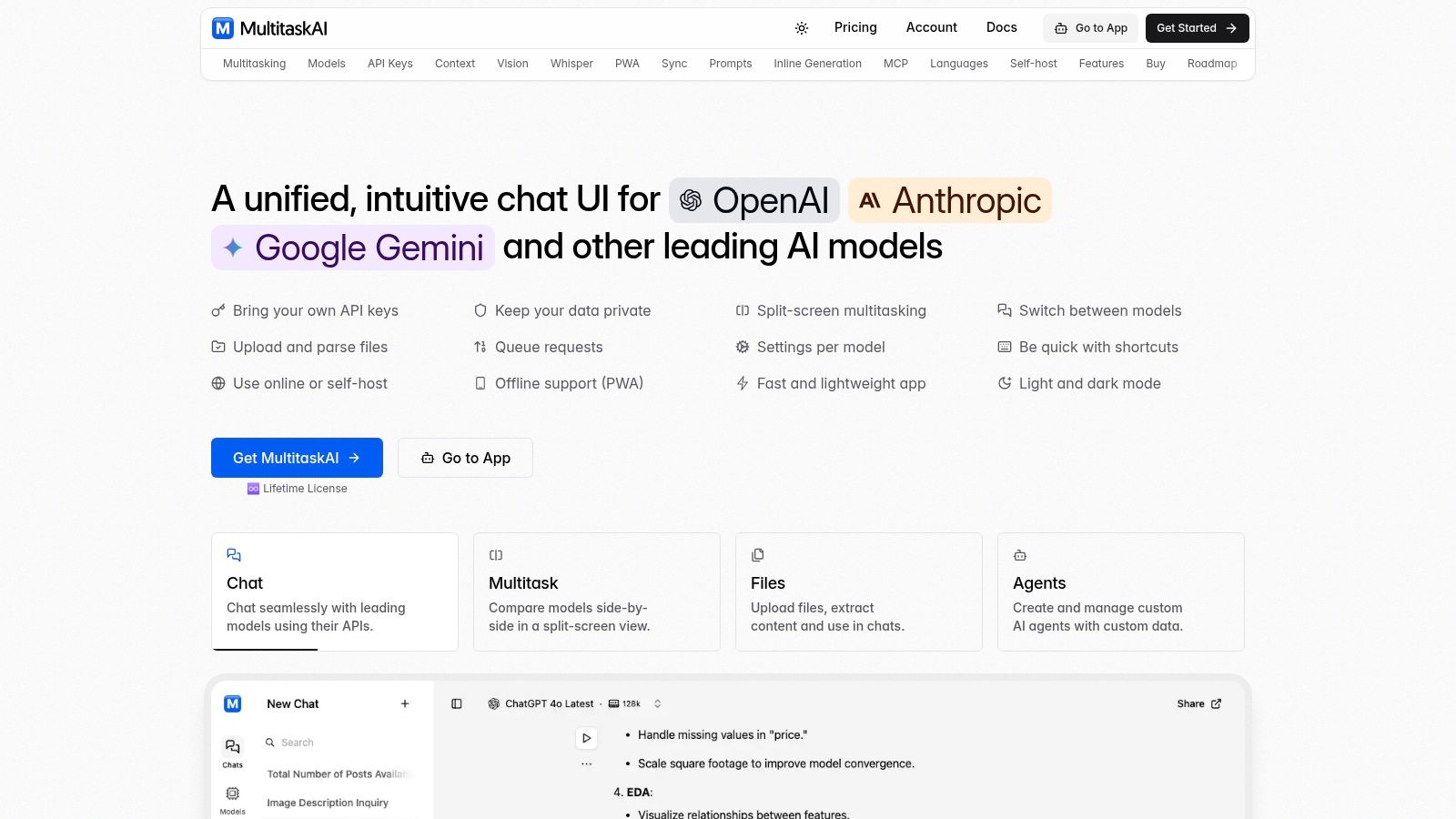

1. MultitaskAI

MultitaskAI stands out among openai alternatives as a browser-based chat hub that connects you directly to top AI models—GPT-4, Anthropic’s Claude, Google Gemini, and more—while keeping your data fully private. With true multitasking power (split-screen comparisons, queued prompts, concurrent threads), file integration, customizable agents, and offline PWA support, it’s built for AI professionals, developers, and power users who demand speed, flexibility, and control.

Key Features and Benefits

- Split-screen chat & queue system: Compare two models side-by-side or queue up prompts without waiting for responses.

- Direct API key connections: No hidden fees or middlemen; your API usage billing comes straight from OpenAI, Anthropic, Google, etc.

- Custom AI agents & dynamic prompts: Build task-specific bots (e.g., code reviewer, market copy generator) and inject variables on the fly.

- File integration: Upload PDFs, DOCs, CSVs to parse and analyze documents within the chat.

- Offline PWA support: Install as a Progressive Web App to chat even without internet.

- Multi-language UI: Interface available in 29+ languages, ideal for global teams.

- Self-hosting option: Deploy on any static file server or FTP—perfect for on-premises requirements.

- Encrypted API key storage: Safeguard your keys locally with AES encryption.

Practical Use Cases

- Model benchmarking: Run GPT-4 vs. Claude vs. Gemini in parallel to pick the best performer for summarization, coding assistance, or long-form content.

- Research workflows: Upload academic papers to automatically extract key points, references, and generate annotated summaries.

- Marketing automation: Spin up a “social media caption” agent, queue daily prompts, and bulk-export generated content.

- Localization & translation: Leverage multi-language support to translate materials and compare outputs across multiple translation engines.

- Offline brainstorming: Draft ideas and prompts offline via PWA, sync when you’re back online.

Pricing & Technical Requirements

- One-time license: €99 EUR (currently 33% off from €149), includes lifetime updates and 5 device activations.

- API keys: Requires user-provided API keys for each model provider.

- Hosting:

- Hosted: Sign up at app.multitaskai.com, enter your keys, and start chatting.

- Self-hosted: Upload static files to any web server or FTP—no backend required.

- Browser: Modern Chromium or Safari browser with PWA support.

Comparison with Similar Tools

- Vs. Official ChatGPT: Supports multiple providers and offline mode; ChatGPT is limited to OpenAI and online only.

- Vs. Other aggregators: Offers split-screen, queueing, file parsing, and custom agents—features often missing in single-pane interfaces.

- Vs. Desktop apps: No bulky installs, cross-platform PWA, plus easy self-hosting for privacy.

Setup Tips

- Purchase your license and note your activation code.

- Choose hosted or self-hosted setup; for self-hosting, upload the

/distfolder to your server. - Open the app, navigate to Settings, and add your API keys under “Providers.”

- Create a custom agent template, assign prompts, and test it in split-screen mode.

- Install as PWA (click “Add to Home Screen”) to enable offline access.

Pros and Cons

Pros:

- True multitasking: split-screen chats + prompt queueing

- Full data privacy via direct API connections and self-hosting

- One-time lifetime purchase—no subscriptions

- Advanced features: file parsing, custom agents, dynamic prompts, PWA

- Broad model support: GPT-4, Claude, Gemini, and more

Cons:

- Requires user-supplied API keys (can be a hurdle for beginners)

- Some features (Google Drive sync, Chrome extension) are still in development

MultitaskAI deserves its top spot in our list of openai alternatives for its unparalleled flexibility, privacy-first architecture, and productivity-boosting multitasking features. Learn more about MultitaskAI.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

2. Claude AI (Anthropic)

Claude AI, built by Anthropic, is designed to be a safe, helpful, and honest assistant—making it one of the top openai alternatives for teams that prioritize ethical AI. With a focus on reducing hallucinations through its Constitutional AI approach, Claude excels at complex reasoning, document analysis, creative writing, and coding tasks.

Key Features and Technical Specs

- Massive Context Window: Claude 3 Opus supports up to 200,000 tokens—ideal for processing entire research papers, long legal contracts, or book-length manuscripts in one go.

- Strong Reasoning & Analysis: Outperforms many models on logic puzzles, multi-step math, and nuanced text interpretation.

- Multimodal Input: Upload images alongside text prompts to get detailed visual analyses—useful for design critique, chart interpretation, or OCR-based workflows.

- API Access: RESTful endpoints with flexible rate limits; integrates via standard HTTP libraries in Python, JavaScript, Ruby, and more.

- Safety-First Design: Constitutional AI framework lowers the risk of harmful or biased outputs, making Claude a standout among openai alternatives for enterprise compliance.

Practical Applications & Use Cases

- Legal & Finance: Draft summaries of contracts or financial reports and flag risky clauses automatically.

- Software Development: Generate boilerplate code, refactor legacy scripts, or debug errors with contextual insights.

- Content & Marketing: Produce natural, conversational copy for blogs, social media, and ad campaigns—while staying on-brand.

- Research & Education: Analyze large datasets, extract key findings, and create study guides or interactive tutorials.

Pricing & Requirements

Anthropic offers both free and paid plans:

- Free tier with limited usage to experiment with Claude’s core capabilities.

- Paid plans start at approximately $0.03 per 1K tokens for Claude 3 Shell and $0.06 per 1K tokens for the full Claude 3 Opus context window.

- Enterprise licenses and custom SLAs are available—contact Anthropic sales for volume discounts, dedicated support, and on-premise options.

- You’ll need an API key from Anthropic, basic HTTP/JSON experience, and a secure environment to store credentials.

Implementation Tips

- Chunk Large Documents: Break very long texts into logical sections (e.g., chapters or articles) to stay within token limits.

- Adjust Temperature: Use a lower temperature (0.2–0.5) for factual outputs, higher (0.7–1.0) for creative brainstorming.

- Leverage Streaming: Enable streaming responses for real-time feedback in chatbots or IDE integrations.

- Safety Checks: Combine Claude’s built-in filters with your own post-processing to catch any edge-case outputs.

Why Claude AI Deserves #2 Spot

Claude AI shines among openai alternatives by blending cutting-edge context handling with industry-leading safety. Its long memory makes it perfect for heavy-duty document work, while multimodal support opens up new workflows for designers and analysts. Though its premium tiers are priced higher than some competitors, the trade-off in reliability and reduced hallucinations pays off for mission-critical applications.

Pros and Cons

Pros:

- Exceptional at understanding nuance and following complex instructions

- More conversational and natural tone than many competitors

- Strong safety measures and reduced tendency to generate harmful content

- Transparent about its limitations

Cons:

- Higher pricing for premium tiers compared to some alternatives

- More limited third-party integrations than OpenAI

- Less widespread adoption in the developer ecosystem

- Newer to market with less established track record

For more details on how Claude stacks up against other models and to compare features side by side, Learn more about Claude AI (Anthropic).

Official website: https://www.anthropic.com/claude

3. Google Gemini

Google Gemini (formerly Bard) stands out as one of the most powerful openai alternatives, offering a multimodal AI that natively connects with Google Search, Workspace, and the wider cloud ecosystem. Whether you need to analyze images, summarize long documents, or generate production-ready code with real-time web data, Gemini delivers flexible performance across text, audio, images, and video.

Features & Benefits

- Multimodal reasoning: Interpret and generate text, images, audio, and video

- Real-time knowledge: Fetches up-to-the-minute facts via Google Search

- Coding assistance: Built-in execution environment supports Python, JavaScript, and more

- Ecosystem integration: Seamless use in Docs, Sheets, Gmail, and Google Meet

- Three model tiers:

- Ultra for large-scale cloud workloads

- Pro for mid-range tasks and teams

- Nano for on-device/mobile applications

Practical Use Cases

- Digital marketers automate campaign copy and ad-creative testing with live trend data

- Developers generate and debug code snippets, schema migrations, or API clients

- Knowledge workers summarize meeting transcripts, draft emails, and prepare slide decks

- AI researchers prototype multimodal pipelines combining vision and language

Pricing & Technical Requirements

- Free tier with generous monthly quota for text and image processing

- Pay-as-you-go pricing on Google Cloud AI Platform (starting at $0.10 per compute unit)

- To get started, enable the Gemini API in Google AI Studio, set up a Cloud project, and acquire an API key

- For on-device Nano, install the Gemini SDK on supported Android phones

Comparison with Other OpenAI Alternatives

Unlike GPT-4, Gemini taps into live web data and Google’s search algorithms. Compared to Anthropic’s Claude, it offers stronger multimodal support and deeper product integrations, though it may be slightly more conservative in creative tasks.

Implementation Tips

- Begin in Google AI Studio: test prompts in the playground before coding.

- Use context windows smartly—inject relevant docs or image references.

- Combine Gemini’s retrieval API with your own document store for private data access.

- Monitor usage in Cloud Console to optimize cost versus performance.

Pros

- Direct Google Search integration and real-time info

- High accuracy on math, analytics, and code execution

- Free tier with robust monthly limits

Cons

- Evolving API documentation can be challenging

- Creative outputs are more guarded due to strict safety filters

- Smaller third-party plugin ecosystem than OpenAI’s

Learn more about Google Gemini and how it compares among other openai alternatives: Learn more about Google Gemini

Official site: https://gemini.google.com/

4. Cohere

When exploring openai alternatives for enterprise-grade NLP, Cohere stands out as a powerful platform designed specifically for business use cases like content generation, text classification, and semantic search. Its flexible API and emphasis on customizability make it a top choice for AI professionals and developers who need reliable, scalable language models with enterprise-level security.

Key Features

- Command models

Instruction-following and text generation optimized for tasks such as drafting emails, summarization, and chatbot responses. - Embed models

Generate high-quality text embeddings for semantic search, similarity detection, and recommendation systems. - Multilingual support

Over 100 languages supported, making it ideal for global applications. - Enterprise-grade security

SOC 2 compliant, private cloud deployment options, and strict data privacy controls. - Custom model training

Fine-tune on your own datasets or train entirely new models for domain-specific needs.

Practical Applications & Use Cases

- Semantic Search

Leverage embedding models to build vector-based search engines that return more relevant results than keyword matching. - Content Generation

Automate blog posts, product descriptions, and social media copy with instruction-tuned command models. - Classification & Extraction

Implement high-accuracy sentiment analysis, entity recognition, and topic tagging pipelines. - Multilingual Chatbots

Deploy customer support agents that understand and respond in multiple languages without juggling separate models.

Pricing & Technical Requirements

- Free tier

Includes 50,000 tokens of command usage and 50,000 embed tokens per month. - Pay-as-you-go

• Command models: starting at $0.15 per 1,000 generation tokens

• Embed models: $0.10 per 1,000 embeddings - Enterprise plans

Custom pricing with dedicated SLAs, private cloud deployment, and volume discounts. - Technical stack

• RESTful API with official Python, JavaScript, and Java SDKs

• Token-based authentication; keep your API keys secure using environment variables or secret managers

• Webhooks for asynchronous batch processing

Comparison with Similar Tools

- vs. OpenAI

Cohere excels in enterprise security and custom training, whereas OpenAI’s models often lead in raw creative generation. - vs. Anthropic Claude

Claude focuses on safe-AI research and guardrails, while Cohere prioritizes multilingual support and embedding performance. - vs. AI21 Studio

AI21 Studio is strong in writing assistance, but Cohere’s private deployment options give it an edge for regulated industries.

Implementation Tips

- Start with embeddings – Test semantic search with a small dataset to gauge relevance before scaling up.

- Fine-tune wisely – Allocate budget for model training and maintain data hygiene to avoid overfitting.

- Use batching – Group multiple requests in a single API call to reduce latency and cost.

- Monitor usage – Set up alerts for token consumption to stay within budget and avoid rate-limit surprises.

Pros & Cons

Pros:

- Specialized in enterprise NLP solutions

- Strong multilingual capabilities

- Flexible deployment (public or private cloud)

- Excellent for search and retrieval applications

Cons:

- Less known for creative content generation

- Smaller developer community compared to OpenAI

- More business-focused than consumer-oriented

- Documentation can be sparse for beginners

Cohere’s enterprise focus, robust security, and top-tier multilingual models make it a compelling openai alternative for organizations that require full control over their AI deployments.

Website: https://cohere.com/

No spam, no nonsense. Pinky promise.

5. Mistral AI

Mistral AI is a rising French AI company offering high-performance open models that serve as compelling OpenAI alternatives. By blending open-source releases with commercial APIs, Mistral delivers efficient large-language models that often match or exceed competitors in reasoning tasks while using fewer compute resources. This makes Mistral AI ideal for developers and organizations seeking cost-effective, transparent, and powerful LLM solutions.

Key Features

- Range of model sizes:

- Mistral 7B: Lightweight, suitable for on-device or low-cost cloud inference

- Mixtral 8x7B: Ensemble of eight 7B models for advanced reasoning

- Le Chat: Chat-optimized variant for conversational AI

- Efficient architecture that cuts memory and compute requirements by up to 30%

- Fully open-source checkpoints available on GitHub

- Commercial API access via the Mistral platform

- Strong benchmarks in reasoning, coding, and multilingual tasks

Practical Applications & Use Cases

- Chatbots & Virtual Assistants: Leverage Le Chat for customer support with low latency and high coherence.

- Code Generation & Review: Use Mixtral or Mistral 7B to automate boilerplate code, run static analysis, or suggest refactorings.

- Data Extraction & Summarization: Efficiently process large documents or logs with on-prem deployments to meet data privacy requirements.

- Fine-Tuning for Domain Expertise: Tailor open checkpoints on specialized corpora (medical records, legal documents) without vendor lock-in.

Pricing & Technical Requirements

- API pricing starts around $0.002 per 1K tokens—typically 20–50% cheaper than comparable GPT-4 calls.

- Self-hosting requires a GPU with ≥24 GB VRAM for Mistral 7B; Mixtral models may need multi-GPU setups or high-memory instances.

- Docker images and Python SDK simplify deployment:

pip install mistral-client export MISTRAL_API_KEY="your_api_key" - Official docs: https://mistral.ai/docs

Comparison with Other OpenAI Alternatives

- Vs. OpenAI GPT: Mistral models offer similar reasoning at lower cost and full transparency via open checkpoints.

- Vs. Llama 2: Mixtral often outperforms Llama 2 on coding benchmarks while requiring less memory.

- Vs. Anthropic Claude: Mistral’s European base provides an alternative compliance framework and simpler commercial terms.

Implementation Tips

- Choose the right model size: Start with Mistral 7B for prototyping before scaling to Mixtral for production.

- Optimize inference: Use ONNX or TensorRT conversions to further reduce latency.

- Monitor performance: Integrate GPU metrics (NVIDIA DCGM) and token-usage dashboards to control costs.

- Community & Support: Join Mistral’s Discord and GitHub Discussions for sample notebooks and troubleshooting.

Why Mistral AI Deserves Its Spot

Mistral AI stands out among openai alternatives due to its unique blend of open-source freedom, cost efficiency, and strong performance in reasoning and coding tasks. Its rapid growth and European base make it a strategic choice for teams seeking both innovation and regulatory diversity.

Pros:

- Fully open-source models increase transparency

- Excellent performance-to-size ratio

- More affordable API pricing than many competitors

- European-based with distinct compliance approach

Cons:

- Newer company with evolving infrastructure

- Fewer specialized model variants compared to OpenAI

- Documentation and ecosystem still growing

Website: https://mistral.ai/

6. Meta AI (LLaMA, Llama 2, Llama 3)

Meta AI’s LLaMA series is one of the most compelling openai alternatives on the market today. By releasing full model weights for LLaMA, Llama 2, and Llama 3, Meta empowers developers and enterprises to run powerful large language models entirely on private infrastructure—no API calls, no per-token fees. Whether you need a compact 7 billion-parameter model for on-device inference or a 70 billion-parameter powerhouse for research, Meta AI delivers both flexibility and performance.

Meta AI models shine in use cases like:

- Custom chatbots and virtual assistants (fine-tuned with dialogue datasets)

- Document summarization and knowledge extraction in regulated industries

- Retrieval-augmented generation (RAG) pipelines for searchable knowledge bases

- Domain-specific NLP tasks (legal, healthcare, finance) requiring on-prem privacy

Key Features

- Open-source weights for local deployment (7B–70B parameters)

- Fine-tuned chat versions optimized for conversational AI

- Commercial usage permitted under Meta’s Llama 2 license

- Compatibility with Hugging Face Transformers, llama.cpp, Ollama, etc.

Technical & Pricing Overview

- Model weights: free to download (no usage fees)

- Inference hardware: GPU with ≥16 GB VRAM for 7B; ≥48 GB for 70B (or multi-GPU setups)

- Cloud GPU cost: roughly $0.50–$3.00/hour on AWS g4dn/g5 instances, depending on size & region

- Fine-tuning: supports LoRA and full-parameter updates; requires additional compute

Why Meta AI Deserves #6 on This List

As one of the top openai alternatives, Meta AI balances performance with openness. You gain full control over data privacy, eliminate ongoing API charges, and tap into a vibrant community pushing model improvements. Compared to closed models (e.g., GPT-4, Google Gemini), LLaMA’s transparent license and local deployment options make it ideal for startups, research labs, and enterprises with strict compliance needs.

Implementation Tips

- Quantize with bitsandbytes or use llama.cpp for CPU-only deployment.

- Start with the 7B model on a single A100 or 3090; scale up as needs grow.

- Use Hugging Face’s

transformers+acceleratefor easy training & inference scripts. - Explore LoRA for lightweight fine-tuning on custom datasets (< 8 GB RAM).

- Monitor performance: latency can be improved with batching and TensorRT.

Pros

- Fully deployable on private infrastructure

- No per-call or per-token costs after setup

- Highly customizable and fine-tunable

- Robust open-source community support

Cons

- Steep hardware requirements for larger models

- Requires DevOps and ML engineering expertise

- No native hosted API (must self-manage inference)

- Slight performance gap versus the latest closed models in some benchmarks

For more details and downloads, visit Meta AI’s official site:

https://ai.meta.com/llama/

7. HuggingFace

HuggingFace stands out as a premier platform and community-driven hub for thousands of open-source AI models, making it one of the most powerful openai alternatives on the market. Rather than offering a single proprietary model, HuggingFace aggregates transformer-based models for tasks like text generation, summarization, translation, vision, speech and more. Its Inference API provides a unified REST interface to these models, while Spaces allows you to deploy interactive demos and full applications in minutes.

Why it earns a spot among the top openai alternatives:

- Unparalleled model variety (over 50,000 community-contributed models)

- Transparent licensing and code access

- Flexible pay-per-compute pricing keeps costs predictable

- Vibrant tutorials, documentation and community support

Key Features

- Model Hub: Browse and download thousands of transformer, diffusion, and specialized models (BERT, GPT-2, RoBERTa, Stable Diffusion, etc.).

- Inference API: Call any hosted model via REST or Python SDK—no infrastructure to manage.

- Spaces: Instantly deploy Gradio or Streamlit demos, share interactive UIs with collaborators.

- Transformers & Datasets Libraries: High-level Python packages for seamless training, fine-tuning, tokenization and data processing.

- Training & Fine-Tuning: Built-in Trainer API and support for distributed GPU/TPU training.

Practical Use Cases

- Chatbots and virtual assistants powered by open-source language models

- Automated summarization and document analysis in enterprise workflows

- Multilingual translation pipelines for global content localization

- Custom vision applications (object detection, image-to-text)

- Rapid prototyping of novel AI demos using Spaces

Pricing

- Free Tier: Generous free calls to Inference API (subject to rate limits) and unlimited free public Spaces.

- Pay-Per-Use: From $0.0005 per token for smaller models to $0.03–0.12 per 1,000 tokens for large LLMs; GPU/CPU billing on HuggingFace Hub starts at $0.08 per GPU-hour.

- Enterprise Plans: Custom pricing, SLAs, private model hosting and enhanced support.

Technical Requirements & Setup Tips

- Install Python packages:

pip install transformers datasets huggingface_hub - Authenticate with the Hub:

huggingface-cli login - Load a model in Python:

from transformers import pipeline nlp = pipeline("text-generation", model="gpt2") print(nlp("Hello, world")) - Deploy your own demo:

- Fork a Gradio or Streamlit template in Spaces

- Add your model ID and requirements.txt

- Push to the

mainbranch—your app is live automatically.

- Optimize performance with model quantization (ONNX or HuggingFace Optimum) for faster inference and lower memory use.

Comparison with Other Tools

- Versus OpenAI: More model choice and transparency, lower entry cost, but you manage consistency and performance tuning.

- Versus Cohere: HuggingFace offers a broader ecosystem (vision, speech, community hubs), while Cohere focuses on text embeddings and generation.

- Versus Anthropic: Anthropic’s Claude models come with strong safety guardrails, whereas HuggingFace gives you freedom to explore niche, research-grade models.

Pros & Cons

Pros:

- Access to thousands of open-source AI models

- Community-driven with extensive learning resources

- Transparent, non-proprietary approach

- Flexible pay-per-compute pricing

Cons:

- Model performance varies—selecting the right model takes research

- Steeper learning curve for beginners

- Less centralized support than commercial, closed-source providers

Explore the full platform and get started with one of the best openai alternatives today: https://huggingface.co/

8. Perplexity AI

Perplexity AI is a standout entry among openai alternatives, blending cutting-edge language models with real-time web search. Its ability to fetch up-to-the-minute information, cite sources, and handle conversational follow-ups makes it ideal for researchers, developers, and tech-savvy entrepreneurs who need both accuracy and transparency.

Key Features

- Real-Time Web Search

Instantly retrieves the latest data from across the web—no knowledge cutoff. - Source Attribution & Citations

Every answer comes with clickable references, boosting credibility in reports and presentations. - Conversational Interface

Ask follow-up questions in a chat-style window to refine results or dig deeper. - Developer-Friendly API

RESTful endpoints with JSON responses, API keys, and clear documentation for easy integration. - Collections for Organized Research

Save and categorize answers, links, and snippets into shareable collections.

Practical Applications & Use Cases

- Academic Research & Literature Review

Quickly gather and cite peer-reviewed articles, news updates, or patent filings. - Competitive Intelligence

Monitor industry trends and competitor announcements in real time. - Content Creation & Fact-Checking

Generate outlines, validate facts, and embed source links directly into drafts. - Customer Support & Chatbot Enhancement

Power chatbots with live information, reducing outdated or incorrect responses. - Data-Driven Product Development

Integrate via API to surface real-time metrics or market insights in your apps.

Pricing & Technical Requirements

- Free Tier

Generous monthly quota with basic search and chat capabilities—perfect for casual users and initial prototyping. - Pro Plan (~$20/mo)

Unlimited queries, priority access to new features, GPT-4 powered responses, and higher rate limits on the API. - Enterprise Solutions

Custom SLAs, dedicated support, and white-label options. - Technical Needs

Modern web browser or HTTP client

API key (obtained via email signup)

JSON/REST integration (supported by popular SDKs in Python, JavaScript, and more)

How It Compares to Other OpenAI Alternatives

- Vs. ChatGPT (OpenAI)

• Perplexity: Real-time web search + citations

• ChatGPT: Strong in creative generation and fine-tuning options - Vs. Google Bard

• Perplexity: Transparent sourcing, developer API

• Bard: Deep integration with Google services - Vs. Bing Chat

• Perplexity: Cleaner interface, research-oriented features

• Bing Chat: Built into Edge, voice support

Implementation Tips

- Start with Specific Queries

Frame questions with keywords like “statistical overview” or “latest trends” to leverage the real-time search engine. - Use Collections Early

Organize snippets by project or topic—Collections can be shared with team members for collaborative research. - Chain Follow-Ups

After receiving an answer, ask targeted follow-up questions (e.g., “Can you drill down into the methodology?”). - Integrate via API for Automation

Automate routine data-pulls—set up scheduled scripts to fetch the latest statistics or news headlines relevant to your niche.

Pros & Cons

Pros

- Up-to-date information without knowledge cutoffs

- Transparent sourcing improves credibility

- Clean, user-friendly interface

- Free tier with generous usage limits

Cons

- Search-focused approach may limit some creative applications

- Less suitable for purely generative tasks (poetry, stories)

- Newer API with a less mature ecosystem than some competitors

- Limited model customization compared to other platforms

Why Perplexity AI Earns Its Spot

As one of the most robust openai alternatives, Perplexity AI excels in factual accuracy, transparency, and developer usability. If your workflows demand the latest information, verifiable sources, and an intuitive API, Perplexity AI is a powerful tool to add to your AI toolkit.

For more information or to sign up, visit: https://www.perplexity.ai/

9. Ollama

Ollama is a powerful openai alternative that lets you run large language models locally on your personal computer—no cloud dependency required. Whether you’re an AI professional, developer, or indie hacker, Ollama simplifies downloading, running, and customizing open-source models such as Llama 2, Mistral, and others. This tool is ideal for anyone seeking full data privacy, low-latency inference, and zero usage-based costs once models are installed.

Key Features and Benefits

- Completely Local & Offline: All inference happens on your device, ensuring sensitive data never leaves your network.

- Command-Line & API Interfaces: Use a simple

ollama run llama2command or integrate via RESTful API in your applications. - Multi-Model Support: Choose from ready-to-use open-source models like Llama 2, Mistral, Falcon, and more.

- Optimized for Consumer Hardware: Works on modern Windows, macOS, and Linux machines—supports GPU acceleration when available.

- Extensible & Customizable: Define and fine-tune your own models or import community-driven model definitions.

Practical Applications and Use Cases

- Chatbot Prototyping: Build a local AI assistant for internal documentation, customer support demos, or rapid MVPs.

- Edge Deployment: Deploy LLMs on edge devices where internet is unreliable, like retail kiosks or remote sensors.

- Data-Sensitive NLP: Process healthcare records, legal documents, or proprietary codebases without risking data leaks.

- Offline Content Generation: Generate marketing copy, social media posts, or blog drafts entirely offline.

Pricing and Technical Requirements

- Pricing: Free and open source. No API keys or usage fees.

- Hardware:

- CPU-only (minimum 8 GB RAM, quad-core recommended)

- GPU (NVIDIA with ≥6 GB VRAM) for faster inference

- OS Support: Windows 10+, macOS 12+, Linux (Ubuntu 20.04+)

- Disk Space: 5–20 GB per model, depending on size

Comparison with Similar Tools

- llama.cpp: Ultra-lightweight C++ inference engine, but fewer API conveniences.

- LocalAI: Docker-based local LLM inference with broader network support, but steeper setup.

- Hugging Face Transformers: Extensive model zoo, yet heavier dependencies and less focus on local CLI ease.

Ollama stands out by combining simple setup, multi-model flexibility, and an integrated CLI/API—making it one of the best openai alternatives for developers who want on-device AI.

Setup & Implementation Tips

- Install

macOS (Homebrew):

brew install ollama

Linux (Debian/Ubuntu):

curl https://ollama.com/install.sh | sudo bash - Download a Model

ollama pull llama2 - Run Inference

ollama run llama2 --prompt "Write a product summary for Ollama" - API Integration

Start the local server:

ollama serve

Then send HTTP requests tohttp://localhost:11434.

Pros and Cons

Pros:

- Full data privacy with no external calls

- Zero post-download costs

- Low-latency responses on local hardware

- Flexible CLI and REST API

Cons:

- Limited by your machine’s CPU/GPU power

- Models smaller than cloud-grade GPT-4

- Requires some command-line familiarity

- No enterprise SLAs or dedicated support

Ollama’s ease of use, combined with its emphasis on privacy and offline operation, makes it a standout entry among openai alternatives. Explore more at https://ollama.com/.

10. Amazon Bedrock

Amazon Bedrock is AWS’s fully managed service that lets you access foundation models from leading AI companies—Anthropic, AI21 Labs, Cohere, Meta, Mistral AI—and Amazon’s own Titan models through a single, unified API. As one of the top “openai alternatives,” Bedrock excels at enterprise-grade deployments, offering robust security, governance, and the ability to privately customize models on your own data.

Amazon Bedrock deserves its spot in this listicle because it brings together the best of multiple foundation models—so you can pick the right engine for your use case—while inheriting AWS’s globally proven infrastructure. Whether you’re building a customer-support chatbot, generating marketing copy, or running large-scale RAG (retrieval-augmented generation) pipelines, Bedrock is designed to scale securely and integrate seamlessly with your existing cloud resources.

Key Features and Benefits

- Access Multiple Models via Unified API

Query Anthropic’s Claude, AI21 Labs’ Jurassic, Cohere’s Command, Meta’s LLaMA, Mistral AI, or Titan—without juggling separate keys or SDKs. - Private Model Customization

Fine-tune or embed models on your proprietary data in an isolated environment for better accuracy and compliance. - Enterprise-Grade Security & Governance

Built-in IAM controls, VPC support, encryption at rest/in transit, audit logging, and AWS Artifact integration for regulatory requirements. - Seamless AWS Integration

Use Bedrock alongside S3, Lambda, SageMaker, RDS, Kinesis, and more for end-to-end solutions: data ingestion, transformation, model inference, and real-time analytics. - Knowledge Bases for RAG Applications

Create and manage embedding catalogs directly in AWS to power retrieval-augmented workflows.

Practical Use Cases

- Customer Support Automation

Deploy a Claude or Titan-based chatbot that dynamically pulls from your internal FAQs stored in an S3-backed knowledge base. - Personalized Marketing Content

Generate blog posts, ad copy, or emails fine-tuned on your brand guidelines using private data customization. - Document Understanding & Compliance

Automate contract review or compliance checks by embedding regulatory documents into Bedrock’s managed vector store. - Real-Time Data Enrichment

Enrich streaming data (e.g., social media feeds) with entity extraction or sentiment analysis via Cohere or Mistral AI models.

Pricing and Technical Requirements

- Pricing

• Titan models start at $0.003 per 1K tokens for generation and $0.0015 per 1K tokens for embedding.

• Third-party models vary by provider (e.g., Claude 3 at $0.004 per 1K tokens).

• Additional costs for data storage (S3), compute (Lambda/SageMaker), and network egress. - Technical Requirements

• AWS Account with Bedrock enabled in your desired region (e.g., us-east-1)

• IAM role withbedrock:*permissions and access to associated AWS resources

• Optional VPC endpoint for private network traffic

• AWS SDK/CLI or Developer Tools (e.g., boto3 for Python)

Comparison with Similar Tools

- Versus direct OpenAI API:

Bedrock offers a broader model selection and deeper enterprise controls, whereas OpenAI provides tighter integration with ChatGPT’s ecosystem. - Versus Azure OpenAI:

Both excel in compliance and security; Bedrock gives you non-proprietary model choices beyond OpenAI’s catalog. - Versus Hugging Face Inference API:

Hugging Face shines in community-driven model hosting; Bedrock focuses on SLA-backed, production-ready deployments with AWS billing.

Implementation Tips

- Start with the AWS Console quickstart to provision Bedrock in minutes.

- Use CloudFormation or Terraform modules for repeatable, auditable setups.

- Leverage IAM permission boundaries to isolate workloads between dev, test, and prod.

- Monitor token usage via CloudWatch and set budget alerts to control costs.

- Cache embedding outputs in DynamoDB or ElastiCache for high-volume RAG scenarios.

Pros and Cons

Pros:

- Unified API for multiple top-tier models

- Private fine-tuning and embedding on your data

- Enterprise-grade security, compliance, and governance

- Seamless integration with AWS analytics, storage, and compute

Cons:

- Enterprise features come at a premium price

- Initial setup can be complex without AWS expertise

- Some third-party models on Bedrock may lag behind their native offerings

Learn more and get started at Amazon Bedrock’s official site: https://aws.amazon.com/bedrock/

Top 10 OpenAI Alternatives Comparison

| Product | Core Features/Capabilities | User Experience & Quality ★★★★☆ | Value & Pricing 💰 | Target Audience 👥 | Unique Selling Points ✨ |

|---|---|---|---|---|---|

| 🏆 MultitaskAI | Connects OpenAI, Anthropic, Google models; split-screen multitasking; file integration; custom agents; offline PWA | Fast, private, multitasking focused | One-time €99 lifetime license + pay only API usage | AI pros, developers, multitaskers | True multitasking, full privacy, self-hosting choice |

| Claude AI (Anthropic) | Strong reasoning; long context window; multimodal support | Natural, conversational, safe; ★★★★☆ | Premium pricing, higher than some | Safety-conscious AI users, developers | Constitutional AI safety, nuanced understanding |

| Google Gemini | Multimodal (text, images, audio, video); Google ecosystem integration | Good performance; real-time info; ★★★★☆ | Free tier + paid tiers via API | Google product users, general developers | Real-time web access; Google ecosystem synergy |

| Cohere | Enterprise NLP focus; multilingual; custom training | Business-oriented, reliable; ★★★☆☆ | Enterprise pricing | Enterprises needing NLP solutions | Enterprise-grade security; 100+ languages |

| Mistral AI | Open-source efficient models; strong reasoning | Efficient; good reasoning; ★★★☆☆ | More affordable API pricing | Developers valuing open-source, EU-based | Efficient architecture; open-source models |

| Meta AI (LLaMA series) | Open-source weights; customizable; local deployment | Powerful but requires expertise; ★★★☆☆ | Free to deploy after download | Advanced users, enterprises prioritizing privacy | Full local deployment; no usage fees |

| HuggingFace | Thousands of open models; APIs; training/fine-tuning | Varied model quality; community-driven; ★★★☆☆ | Pay-per-compute, flexible | Developers, researchers, AI community | Huge model variety; open-source ecosystem |

| Perplexity AI | Real-time web search; source citations | User-friendly, research-focused; ★★★☆☆ | Free tier available | Researchers, info seekers | Real-time answers with citations |

| Ollama | Run models locally; offline; supports Llama, Mistral etc. | Low latency; data privacy; ★★★☆☆ | No usage cost post-download | Privacy-focused users, offline needs | Complete offline use; no cloud dependency |

| Amazon Bedrock | Access multiple models; enterprise security; AWS integration | Enterprise-focused; solid but complex; ★★★☆☆ | High enterprise pricing | Enterprises on AWS | Unified API for top models; AWS integration |

Choosing Your Perfect AI Companion

After exploring the top openai alternatives—MultitaskAI, Claude AI (Anthropic), Google Gemini, Cohere, Mistral AI, Meta AI (LLaMA series), HuggingFace, Perplexity AI, Ollama, and Amazon Bedrock—you now have a clear view of each tool’s strengths. Whether you prioritize enterprise-grade security, customizable model fine-tuning, cost-effective usage, or seamless API integration, there’s an AI solution tailored for your project.

Key Takeaways

- MultitaskAI and Claude AI shine in multi-purpose workflows and ethical guardrails.

- Google Gemini and Cohere excel at large-scale language understanding and real-time inference.

- Mistral AI and Meta’s LLaMA models offer cutting-edge open-source flexibility.

- HuggingFace and Ollama empower developers with community-driven models and local deployment.

- Perplexity AI and Amazon Bedrock deliver knowledge retrieval and enterprise support.

Actionable Next Steps

- Define your priorities: privacy requirements, latency tolerance, budget constraints, and compliance needs.

- Sign up for free tiers or trials to benchmark response quality and throughput.

- Evaluate documentation, SDK support, and community activity for smoother onboarding.

- Test real-world prompts and workflows to compare latency, accuracy, and cost per request.

Factors to Consider

- Data governance and on-prem vs. cloud hosting

- Scalability, SLA guarantees, and enterprise support

- Model update cadence, customization options, and plugin ecosystems

By thoughtfully weighing these elements and experimenting with different openai alternatives, you’ll discover the AI companion that accelerates innovation in your team. Embrace the possibilities—your next breakthrough awaits!