Natural Language Processing Tutorial: From Fundamentals to Production-Ready AI

Master natural language processing with expert guidance on building real-world NLP systems. Learn proven techniques, practical implementations, and cutting-edge approaches that deliver measurable results.

The Evolution of NLP: Understanding Where We Are Today

The path of natural language processing (NLP) shows a fascinating shift from a specialized academic field to a key technology that shapes how we interact with computers. Early NLP systems started with a rule-based approach, where developers had to manually code linguistic rules, grammar structures, and word patterns.

These early rule-based systems faced clear limits. Take a simple sentence like "I saw the man with the telescope." The system would have trouble figuring out if the telescope belonged to the man or if it was used to see him. Human language proved too complex for rigid rules alone.

The late 1980s brought a major change with statistical NLP. This moved away from strict rules toward probability-based models trained on large text datasets. The shift helped end an 'AI winter' caused by the limits of rule-based systems. New tools like hidden Markov models improved tasks like identifying parts of speech. More digital text and better computers helped statistical NLP grow, especially in translation work at places like IBM Research. Learn more about this history here.

The Rise of Machine Learning in NLP

Machine learning brought new power to NLP. Methods like Support Vector Machines (SVMs) and Naive Bayes classifiers helped analyze sentiment and sort text. These tools could learn from data, working better than fixed rules. But they still needed experts to hand-pick features, which limited how well they could grasp language.

The Deep Learning Revolution

The real breakthrough came with deep learning. Neural networks, especially RNNs and transformer models, proved much better at understanding text sequences and connections between words. This made complex language tasks more manageable.

Current State and Future Directions

Today's large language models like GPT-3 and BERT power chatbots, translation tools, and text summarizers with impressive results. These models, trained on huge datasets, can write natural text, grasp context, and handle complex tasks. Key challenges remain - like dealing with bias, explaining how the models work, and addressing ethical concerns. Research continues to focus on making these tools more reliable, efficient, and aligned with human needs.

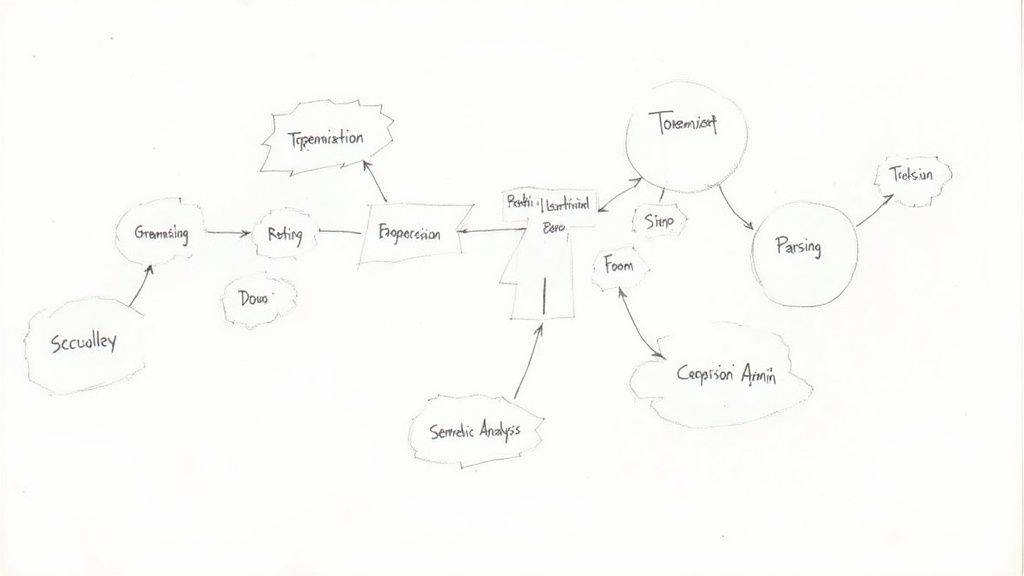

Essential NLP Building Blocks That Drive Results

Let's look at the key techniques that make NLP work in practice. These core components form the building blocks needed for any solid natural language processing system. We'll examine three essential elements: tokenization, part-of-speech tagging, and named entity recognition.

Tokenization: Breaking Down Text Into Meaningful Units

Tokenization splits text into individual pieces called tokens - like words, phrases, or characters. For example, "Natural language processing is powerful" becomes ["Natural", "language", "processing", "is", "powerful"]. While this may seem straightforward, there's more complexity involved.

Basic space-based splitting falls short for languages like Chinese or when handling punctuation marks. Advanced tokenizers use language rules and context to properly process things like contractions and hyphenated words. Getting tokenization right sets up all other NLP tasks for success.

Part-of-Speech Tagging: Identifying the Role of Each Word

After tokenization comes part-of-speech (POS) tagging, which labels each token with its grammatical role - noun, verb, adjective, etc. In our example above, "processing" gets tagged as a noun while "is" is labeled as a verb. This helps computers grasp how words relate to each other in sentences.

POS tagging proves vital for tasks like figuring out the emotional tone of text. Finding adjectives and adverbs gives clues about sentiment, while verbs show actions and intent. You might want to check out: How to master prompt engineering.

Named Entity Recognition (NER): Extracting Key Information

Named entity recognition spots and classifies specific terms in text, like people, companies, places, dates and other important items. If you wrote "MultitaskAI is a useful tool", NER would identify "MultitaskAI" as an organization name.

NER helps pull key details from text automatically. Think about scanning news articles or customer feedback to find mentions of specific companies, products or locations. This automation makes large-scale text analysis practical.

Combining the Building Blocks for Powerful NLP

These three pieces - tokenization, POS tagging, and NER - typically work together in NLP systems. Tokenization breaks text into units, POS tagging adds grammar context, and NER finds specific meaningful terms. This teamwork enables deeper text understanding.

Consider a chatbot as an example. Tokenization helps it understand individual words, POS tagging reveals sentence structure, and NER lets it recognize when users mention names, places or products. Together, these building blocks create more capable natural language systems.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

Building Production-Ready NLP Pipelines

Moving from exploring basic NLP concepts to creating real applications requires careful planning. This guide walks you through building reliable and scalable NLP pipelines - the essential framework that ensures smooth data processing at every stage.

Designing Your NLP Workflow

Start by defining clear goals for your NLP pipeline. Are you building a chatbot? A sentiment analyzer? Or maybe a translation system? Your answer shapes which components you'll need and how to arrange them. For instance, sentiment analysis typically needs text cleaning, tokenization, part-of-speech tagging, and classification steps.

Modularity is key to good pipeline design. Each component should work independently, making it simple to test, modify, or scale individual parts without breaking the whole system. Think carefully about computing resources - efficient resource use is crucial for a pipeline that can grow with your needs.

Data Preprocessing and Optimization

Clean, high-quality data directly affects how well your NLP model performs. Focus first on thorough data preprocessing - clean the text, handle missing data, and remove unwanted characters. Then optimize the data specifically for your NLP task.

Working with social media data for sentiment analysis? You might need to convert emojis to text or expand contractions. For name recognition tasks, you'll want to standardize different versions of names and entities.

Pipeline Construction and Scalability

Connect your pipeline components in a logical order. Popular NLP tools like spaCy and NLTK offer ready-made components that speed up development and follow best practices.

Your pipeline needs to handle growing data volumes without slowing down. This might mean spreading work across multiple machines or using cloud services. For more details, check out: How to master self-hosting MultitaskAI. These approaches help your system adapt as demands increase.

Handling Real-World Challenges

Production systems often need to process multiple languages or specialized vocabulary. This requires specific tools and resources - like language detection for multilingual support and specialized models for different languages.

Processing speed is critical, especially for real-time applications like chatbots. Make each pipeline stage as efficient as possible to keep response times quick.

Regular testing and monitoring keep your pipeline running smoothly. Track performance metrics, find bottlenecks, and make improvements based on real usage data. This ongoing refinement process helps maintain accurate and efficient natural language processing over time.

Making Language Models Work for Business Results

Let's explore how businesses can get real value from advanced language models. We'll look at the practical side of using transformer architectures and large language models (LLMs) to solve business problems and improve operations.

Picking the Right Model for Your Goals

Success starts with choosing the best model for your needs. Think about what you want to accomplish, how much data you have, and what computing power is available. For example, reviewing customer feedback might work well with a smaller, focused model, while translating content often needs a bigger LLM.

Look at key metrics like accuracy, precision, and recall to evaluate different options. Consider whether you can adapt an existing pre-trained model or if you need to build one from scratch. This choice affects both development time and costs.

Getting Better Results Through Model Training

While pre-trained LLMs provide a good starting point, training them further on your specific data can make a big difference. By feeding the model examples from your industry or use case, you can improve its understanding and performance. This makes the model much more effective at handling your unique requirements.

For instance, you could train a general model on medical records to help with diagnosis support, or on legal documents to assist with contract review. The model learns the specific vocabulary and patterns of your field.

Understanding Model Limitations

Current language models have some important drawbacks to keep in mind. They can produce hallucinations - responses that are incorrect or made up. Recent work combines language models with knowledge graphs and retriever models to address this. The Rigel model, for example, uses a special architecture to check facts against a knowledge base, improving answer accuracy by 83% in tests. Learn more about this here.

This combined approach helps create more reliable and useful AI systems that businesses can trust for making decisions.

Using Multiple Models Together

Often, using several models together works better than relying on just one. This ensemble modeling approach lets you combine the strengths of different models. One model might be great at detecting sentiment while another excels at identifying names and organizations. Together, they provide deeper insights. With tools like MultitaskAI, you can easily connect models from OpenAI, Anthropic, and Google to build powerful combined solutions.

Scaling NLP Solutions in the Real World

Building a production-ready NLP system requires much more than just fast code. Let's explore the key considerations for scaling natural language processing solutions effectively.

Handling Increasing Data Volumes

As your NLP application grows, data volumes can quickly multiply beyond what your initial system was built to handle. To process large datasets efficiently, you'll need robust data pipelines using tools like Apache Spark that can distribute processing across multiple machines. This parallel approach helps maintain speed and reliability even as data grows.

Maintaining Performance Under Load

When user traffic spikes, your NLP system needs to keep running smoothly. Picture a chatbot suddenly getting flooded with user messages - without proper architecture, response times could grind to a halt. Two key strategies help here: load balancing to spread requests across servers, and strategic caching of common data to reduce processing overhead.

Managing Deployment Challenges

Getting NLP systems into production brings unique complexities. Setting up automated CI/CD pipelines streamlines updates and ensures smooth deployments. Tools like Docker package your NLP application and dependencies into portable units, making deployment more reliable. The right deployment approach helps you iterate quickly while maintaining stability.

Optimizing for Cost and Performance

You'll need to carefully balance system costs against performance needs. Cloud platforms offer flexibility but expenses can mount quickly. Serverless computing works well for variable workloads since you only pay for what you use. For steady traffic, picking the right cloud instance types and optimizing your infrastructure setup can dramatically impact costs. Check out: How to master ChatGPT for business.

Monitoring and Maintenance

Running NLP at scale requires ongoing oversight. Set up monitoring tools to track key metrics like processing speed, error rates, and resource usage. This visibility helps you spot and fix issues before they impact users. Regular model retraining and updates keep your system accurate as language patterns evolve. Consistent maintenance ensures your NLP solution stays reliable and valuable over time.

No spam, no nonsense. Pinky promise.

Future-Proofing Your NLP Strategy

Building effective natural language processing systems requires adapting to rapid changes in technology. As techniques evolve quickly, having a clear plan to stay current is essential. Let's explore how to build an adaptable NLP system that can grow with new developments.

Evaluating Emerging NLP Trends

Keeping up with NLP advances requires active engagement with the field. Follow key researchers and organizations on social media, attend relevant conferences, and stay involved in NLP communities. Hugging Face and other open-source platforms provide hands-on ways to test new models and assess if they fit your needs.

Smart Adoption of New Technologies

When considering new NLP approaches, balance potential benefits against risks. Look at the technology maturity level - including community adoption, documentation quality, and stability of supporting tools. Also evaluate the technical debt that may come from integrating new components. This helps make informed choices about which innovations to implement.

Building Flexible NLP Systems

Design your NLP infrastructure to easily accommodate changes. Use modular components that can be upgraded independently. For example, a microservices architecture lets you update specific parts without disrupting the whole system. Avoid getting locked into single vendors - choose platform-agnostic solutions that work across different environments. Tools like MultitaskAI help connect various language models while maintaining control over your implementation.

Creating a Learning Culture

The most important part of future-proofing is building a team culture focused on growth. Give your team time to explore new techniques, run experiments, and share what they learn. Make ongoing education a priority through natural language processing tutorials and other resources. This helps everyone stay adaptable and ready to work with emerging NLP capabilities.