Top 8 Local AI Models in 2025: Privacy & Performance

Explore the best local AI models for offline use in 2025. Discover tools that ensure privacy, control, and top performance on your own machine.

Unleash the Power of Offline AI: Top Local Models

Want AI power without the cloud? This listicle delivers eight top local AI models you can run on your own devices. Discover tools like Ollama, LM Studio, LocalAI, Text Generation WebUI, GPT4All, llama.cpp, Jan AI, and Koboldcpp. Local AI models enhance privacy by keeping your data offline and offer greater control over customization. Learn about each model's strengths and choose the best fit for your AI projects.

1. Ollama

Ollama stands out as a powerful and accessible solution for running large language models (LLMs) locally. This open-source framework empowers users to harness the capabilities of LLMs like Llama 2 and Mistral directly on their own hardware, eliminating reliance on cloud services and enhancing privacy. This makes Ollama a compelling option for developers, researchers, and AI enthusiasts seeking efficient and private local AI model deployment. Whether you're experimenting with chatbot development, fine-tuning models for specific tasks, or simply exploring the potential of LLMs, Ollama offers a user-friendly gateway to the world of local AI.

Ollama's simple command-line interface and JSON API make interacting with and managing your local models straightforward. Its built-in model library simplifies the installation process down to a single command, providing access to a growing selection of prominent open-source LLMs. Furthermore, Ollama allows for the creation of custom models using Modelfiles, enabling users to tailor LLMs to specific needs and datasets. This flexibility, combined with its cross-platform support for macOS, Windows, and Linux, makes Ollama an incredibly versatile tool for exploring and utilizing local AI models.

For developers and programmers, Ollama provides a sandbox for experimenting with different LLMs without incurring cloud computing costs. Tech-savvy entrepreneurs and indie hackers can leverage Ollama to build AI-powered applications while maintaining complete control over their data. Even ChatGPT, LLM, Anthropic, Google Gemini, and digital marketing users can benefit from Ollama’s local processing capabilities for faster response times and offline functionality.

Key Features and Benefits:

- Run popular models locally: Deploy Llama 2, Mistral, and other open-source LLMs on your own hardware.

- Simplified interface: Manage models easily with the command-line interface and JSON API.

- One-line model installation: Quickly access and install models from the built-in library.

- Custom model creation: Tailor models using Modelfiles for specific applications.

- Enhanced privacy: Keep your data on your machine, avoiding the risks associated with cloud-based processing.

- Free and open-source: Benefit from community contributions and regular updates.

- Cross-platform compatibility: Run Ollama seamlessly on macOS, Windows, and Linux.

Pros:

- Easy installation process.

- Enhanced data privacy.

- Free and open-source.

- Active community and regular updates.

Cons:

- Requires sufficient hardware resources for larger models (RAM and a good CPU are recommended).

- Performance is dependent on local hardware.

- Limited fine-tuning capabilities compared to dedicated cloud-based solutions.

- Documentation for advanced use cases can be limited.

Website: https://ollama.com/

Ollama deserves its place on this list due to its combination of ease of use and powerful features. It empowers individuals and organizations to harness the potential of local AI models without the complexities and costs associated with cloud services. While hardware requirements for larger models should be considered, the benefits of privacy, control, and cost savings make Ollama an excellent choice for exploring the world of local AI.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

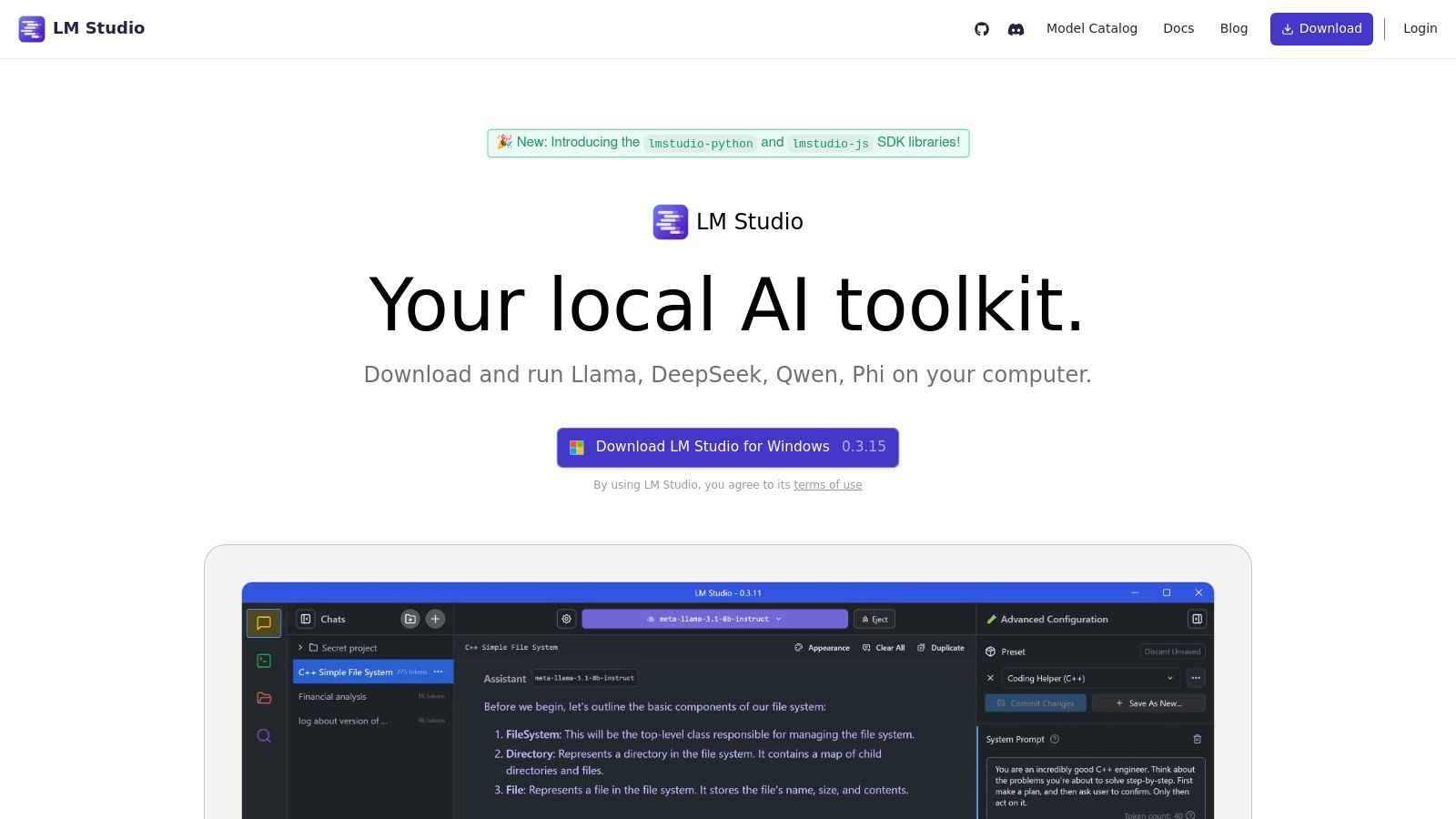

2. LM Studio

LM Studio empowers users to harness the power of local AI models directly on their personal computers. This desktop application simplifies the process of discovering, downloading, and running Large Language Models (LLMs), eliminating the need for complex command-line interfaces and cloud-based services. With its user-friendly graphical interface, LM Studio makes advanced AI accessible to a wider audience, including those without deep technical expertise. This opens up exciting possibilities for various applications, from personal AI assistants to offline content creation and experimentation with cutting-edge AI models. Whether you're an AI professional, a software developer, a tech-savvy entrepreneur, or simply a curious ChatGPT user, LM Studio provides a streamlined pathway to exploring the world of local AI models.

LM Studio earns its place on this list due to its remarkable balance of ease of use and powerful functionality. Its intuitive interface allows users to quickly get started with local AI models, while still offering advanced features for those who want more control. The platform’s built-in chat interface with conversation history makes interacting with LLMs feel natural and familiar, similar to popular commercial AI products. Beyond basic chat functionality, LM Studio provides tools for benchmarking model performance, enabling users to compare different models and optimize their setup for specific tasks. Furthermore, the API server mode allows integration with other applications, expanding the potential use cases of local AI models within existing workflows.

Key Features and Benefits:

- Intuitive GUI: Manage and interact with various local AI models with ease, even without extensive technical knowledge. This is a major advantage for users who are new to running LLMs locally.

- Model Marketplace: Access a diverse selection of pre-trained open-source models directly within the application. This simplifies the process of finding and downloading the right model for your needs.

- Built-in Chat Interface: Experience seamless conversations with your local AI models, complete with conversation history for context and continuity.

- Performance Benchmarking: Evaluate and compare the performance of different models to identify the best one for your specific hardware and requirements.

- API Server Mode: Integrate local AI models with other applications and services, unlocking new possibilities for customized AI solutions.

- Privacy Focused: All processing happens locally on your machine, ensuring complete control and privacy over your data.

Pros and Cons:

Pros:

- User-friendly interface, minimizing the technical barrier to entry.

- Enhanced privacy due to local processing.

- Support for a range of inference parameters and settings.

- Regular updates with new features and model compatibility.

Cons:

- Can be more resource-intensive than command-line alternatives.

- Some advanced features require a deeper technical understanding.

- Offers less customization compared to developer-focused tools.

- Performance can be limited by consumer hardware, especially with larger models.

Technical Requirements and Pricing:

While specific technical requirements can vary depending on the chosen models, LM Studio generally requires a modern computer with sufficient RAM and storage. LM Studio offers both a free Community Edition and a paid Pro version with additional features for advanced users. Check their website for the latest pricing details.

Implementation/Setup Tips:

- Download the latest version of LM Studio from the official website (https://lmstudio.ai/).

- Explore the available pre-trained models in the marketplace.

- Start with smaller models to familiarize yourself with the platform before experimenting with more resource-intensive ones.

- Refer to the LM Studio documentation for detailed instructions and troubleshooting tips.

LM Studio represents a significant step forward in making local AI models more accessible. Its user-friendly interface and robust feature set make it a valuable tool for anyone interested in exploring the potential of AI on their own terms, offline and privately. It serves as a strong alternative to cloud-based solutions, empowering users with greater control and flexibility in their AI endeavors.

3. LocalAI

LocalAI is a powerful open-source solution for running various AI models, including Large Language Models (LLMs), image generation tools, and audio processing software, directly on your local hardware. It functions as a drop-in replacement for the OpenAI API, offering a compatible interface that simplifies the transition from cloud-based AI services to local inference. This means you can leverage the power of AI without relying on external servers, potentially saving on costs and enhancing data privacy. This makes it a compelling choice for developers and businesses seeking more control over their AI workflows. For those already familiar with the OpenAI API, integrating LocalAI is remarkably straightforward.

One of LocalAI's key strengths is its support for a diverse range of model types. Whether you're working with text-based models like LLMs for chatbot development, image generation models for creative content, or audio processing models for speech recognition, LocalAI can handle it. This versatility makes it a valuable tool for a wide range of applications, from building custom chatbots and generating unique artwork to transcribing audio and analyzing speech patterns. Its built-in model management and downloading features further streamline the process, simplifying model selection and integration. Furthermore, LocalAI offers Docker support, facilitating easy deployment across different systems and environments. Learn more about LocalAI for a deeper dive into its self-hosting capabilities.

For developers accustomed to the OpenAI API, the switch to LocalAI is almost seamless thanks to its API compatibility. This eliminates the need for extensive code rewrites and allows existing applications to function with minimal adjustments. The benefits extend to cost savings as well, as running models locally eliminates ongoing API usage fees and potential data transfer costs. Perhaps most importantly, LocalAI offers complete data privacy. By processing data on your local machine, you retain full control and avoid sending sensitive information to external servers. This is crucial for applications dealing with confidential or proprietary data.

While LocalAI offers significant advantages, it’s essential to be aware of its potential drawbacks. Setting up LocalAI requires more technical expertise compared to user-friendly cloud-based alternatives. Performance is directly linked to your local hardware capabilities, meaning a powerful machine is necessary for optimal results. While it strives for complete compatibility, some less common OpenAI API features might not be fully supported. Finally, as an actively developing project, the documentation can sometimes be technical and fragmented, requiring a bit more effort to navigate.

Despite these challenges, LocalAI is a highly valuable tool for those prioritizing data privacy, cost efficiency, and control over their AI models. Its ability to serve as a local ai model solution is a game-changer for many AI professionals and developers, providing a robust and flexible platform for a wide range of AI tasks.

4. Text Generation WebUI

For those seeking deep control over their local AI models, Text Generation WebUI (often referred to as oobabooga) stands out as a powerful and versatile option. This comprehensive web interface allows you to run and experiment with a multitude of text generation models right on your own hardware. It's become a favorite within the open-source AI community for its extensive customization options, training capabilities, and support for a wide range of model architectures, making it a top choice for serious local AI model exploration. This positions Text Generation WebUI as an invaluable tool for anyone working with local AI models, from researchers pushing the boundaries of LLMs to developers integrating AI into their applications.

Text Generation WebUI excels in providing a highly customizable environment. You can fine-tune parameters for text generation, experiment with different model architectures (like Llama 2, GPT-J, and many others) and formats, and even train or fine-tune existing models to your specific needs. It offers built-in tools for model evaluation and comparison, empowering you to optimize performance. Advanced features like character and chat templates open doors to creative applications such as role-playing scenarios and interactive storytelling. The extensible nature of the platform, via its extension system, allows developers to continually add and enhance functionality. For example, you could integrate tools for specific tasks like code generation, translation, or content creation. This makes Text Generation WebUI an exceptionally adaptable platform for a wide array of local AI model use cases.

Compared to simpler alternatives, Text Generation WebUI offers unparalleled flexibility. While tools like GPT4All offer ease of use, they often lack the granular control and advanced features that Text Generation WebUI provides. This makes it particularly appealing to developers and AI professionals seeking deep customization and experimentation. However, this power comes at a cost. The interface, packed with options, can be overwhelming for beginners. The setup process requires some technical expertise, including navigating dependencies and potential troubleshooting. Moreover, fully utilizing the features, particularly training and running larger models, demands significant system resources (RAM, GPU).

Key Features and Benefits:

- Extensive Parameter Customization: Tailor text generation to your exact requirements.

- Multiple Model Architectures and Formats: Experiment with diverse models and easily switch between them.

- Training and Fine-tuning: Adapt existing models to specialized tasks and datasets.

- Character and Chat Templates: Create engaging interactive experiences and role-playing scenarios.

- Extension System: Expand functionality with community-developed extensions.

- Built-in Tools for Model Evaluation: Compare and optimize model performance.

- Support for Optimization Techniques: Leverage techniques like 4-bit quantization to reduce resource usage.

Pros:

- Extremely flexible and configurable.

- Strong community support and active development.

- Comprehensive tools for model analysis and comparison.

- Supports various optimization techniques.

Cons:

- Steeper learning curve than simpler alternatives.

- Interface can feel complex for beginners.

- Setup can require technical knowledge.

- Resource-intensive for advanced features and large models.

Website: https://github.com/oobabooga/text-generation-webui

Implementation Tips:

- Start with the official documentation: The GitHub repository provides detailed instructions for installation and setup.

- Join the community: The Text Generation WebUI community is active and helpful; don't hesitate to ask questions.

- Experiment with smaller models first: Get comfortable with the interface and workflow before tackling resource-intensive models.

- Consider using a dedicated GPU: While not strictly required, a GPU significantly accelerates performance, especially for larger models.

Text Generation WebUI stands out among local AI models for its comprehensive feature set, extensive customization options, and thriving community. While the initial learning curve might be steeper than other alternatives, the power and flexibility it offers make it a rewarding tool for anyone serious about working with local LLMs.

No spam, no nonsense. Pinky promise.

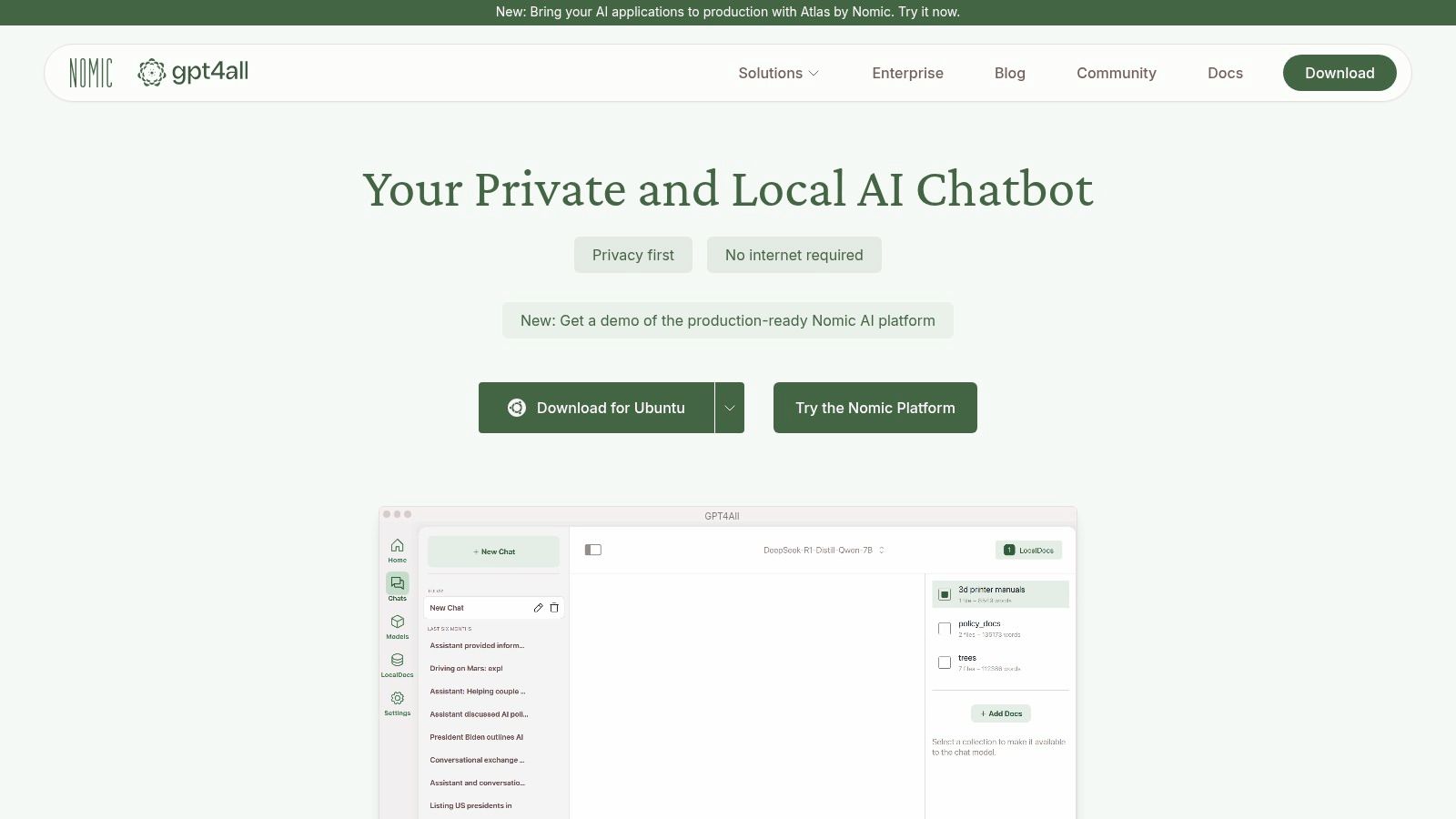

5. GPT4All

GPT4All offers a compelling ecosystem for running local Large Language Models (LLMs) directly on your personal computer, eliminating the need for powerful hardware or constant internet connectivity. This makes it a great option for those seeking a private and accessible entry point into the world of local AI models. It’s particularly well-suited for offline use cases and rapid prototyping without incurring cloud computing costs. Imagine having the power of an LLM readily available, even when you're off the grid.

The platform boasts a user-friendly desktop application with a chat interface, simplifying interaction with the models. This intuitive design makes it accessible to a broader audience, including those without extensive technical expertise. Beyond casual chatting, GPT4All provides a robust programming API for Python, C++, and other languages, enabling developers to seamlessly integrate these local AI models into their applications. This opens doors for creating personalized AI-powered tools and services. The inclusion of local Retrieval-Augmented Generation (RAG) capabilities further enhances its utility. By connecting the models to your own local documents and knowledge bases, you can create powerful, specialized AI assistants tailored to your specific data. Learn more about GPT4All and other methods for deploying AI models locally.

One of GPT4All's significant advantages is its ease of installation and minimal setup requirements. The platform provides a built-in model downloader with a curated collection, streamlining the process of getting started. These models are optimized to run efficiently on CPUs, delivering reasonable performance even on standard consumer-grade hardware. This commitment to accessibility democratizes access to local AI models, making them available to a much wider range of users. Furthermore, GPT4All operates completely offline, ensuring your data remains private and secure, a crucial consideration for sensitive applications.

However, it's important to acknowledge some limitations. While optimized for CPU usage, the models generally offer less power than their larger cloud-based counterparts. Customization options are also somewhat limited compared to more developer-focused frameworks. Additionally, while GPT4All performs admirably on many tasks, it may struggle with highly complex or resource-intensive operations. For example, running extensive data analysis or generating extremely long and nuanced texts might push the limits of its capabilities.

Despite these limitations, GPT4All remains a valuable tool for exploring and utilizing local AI models. Its accessibility, offline functionality, and ease of use make it an attractive option for developers, tech-savvy entrepreneurs, and even casual users interested in experiencing the power of AI on their own machines. For individuals seeking a private, offline, and readily available LLM solution, GPT4All deserves serious consideration in the landscape of local AI models. You can explore the platform and download the application from their website: https://gpt4all.io/.

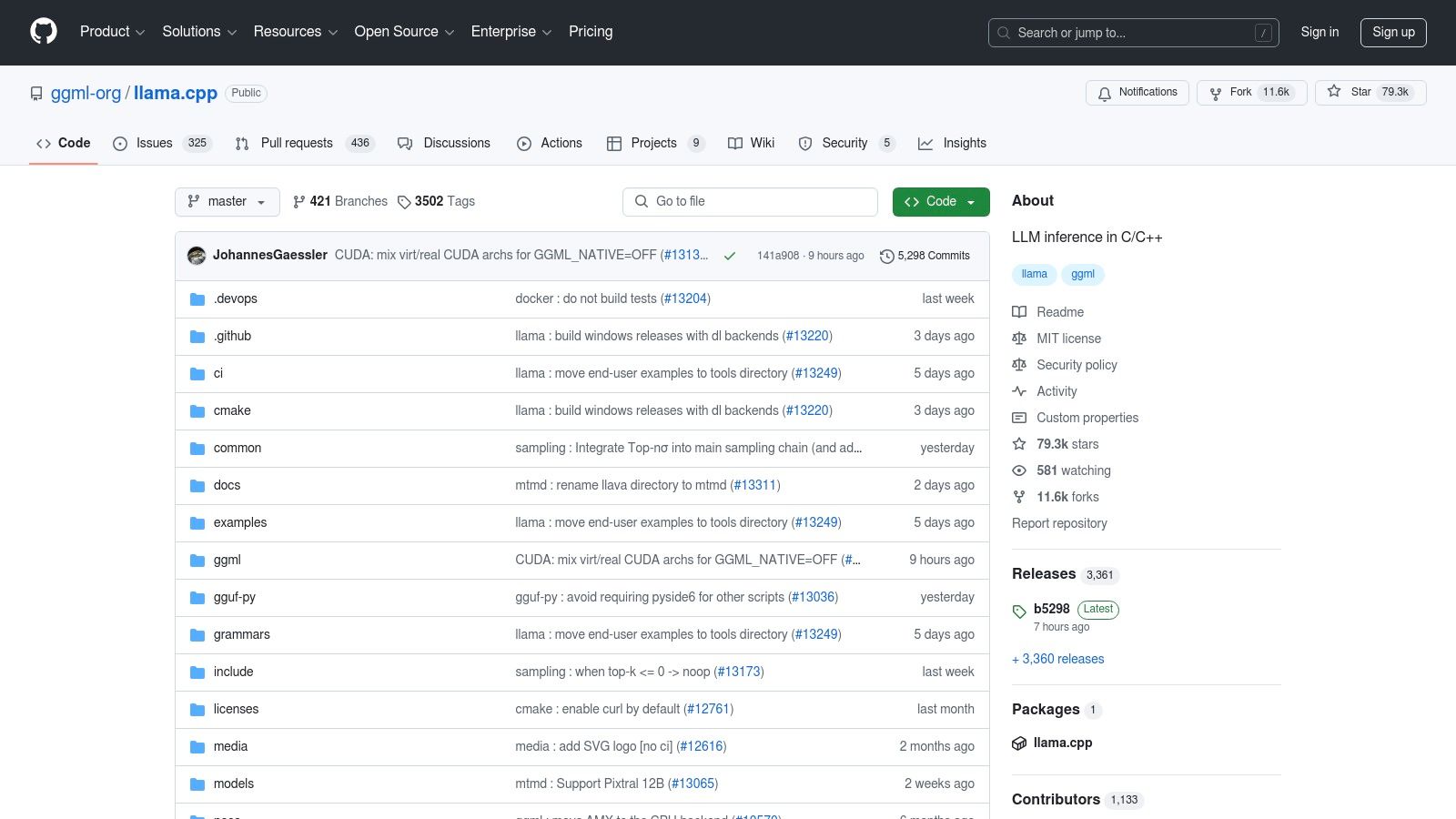

6. llama.cpp

llama.cpp stands out as a cornerstone in the world of local AI models. It provides highly optimized C/C++ implementations of large language models (LLMs) like LLaMA, Falcon, and Mistral, designed to run efficiently on both CPUs and GPUs. This isn't a user-friendly application with a graphical interface; instead, think of it as a powerful engine that drives many other local AI solutions. Its primary focus is on maximizing performance, enabling you to run large language models on consumer-grade hardware using techniques like quantization, which reduces the model's size and computational requirements. This is crucial for making powerful AI accessible to a wider audience.

For developers and AI professionals seeking to harness the power of LLMs locally, llama.cpp is a game-changer. Imagine running powerful AI models on your own laptop or a low-power server – this is what llama.cpp enables. Features like support for various quantization methods (4-bit, 5-bit, 8-bit) and cross-platform compatibility with CPU and GPU acceleration, including Metal support for Apple Silicon, make it a highly versatile tool. It even offers a server mode with an OpenAI-compatible API, allowing you to integrate it into your existing workflows. You can learn more about llama.cpp and its broader context within the open-source AI landscape. This makes it a powerful tool for local AI model deployment, allowing developers and even tech-savvy entrepreneurs to experiment with and implement LLMs for various applications.

One of llama.cpp's major strengths is its industry-leading performance optimization. This allows for efficient inference even on resource-constrained hardware. Its lightweight nature and minimal dependencies further contribute to its speed and efficiency. The project also benefits from extremely active development, ensuring ongoing improvements and support for new models and hardware. Indeed, llama.cpp serves as the foundation for many other local AI projects, highlighting its importance within the community.

However, it's important to be aware of its limitations. Being command-line based, llama.cpp requires technical knowledge to use effectively. The documentation primarily targets developers, and manual compilation is often necessary for optimal performance. This might pose a challenge for non-programmers or those new to the command-line interface.

Pros:

- Industry-leading performance optimization

- Very lightweight with minimal dependencies

- Extremely active development

- Foundation for many other local AI projects

- Cross-platform compatibility

Cons:

- Command-line based, requiring technical knowledge

- Documentation geared towards developers

- Manual compilation often needed for best performance

- No graphical user interface

Website: https://github.com/ggerganov/llama.cpp

This project's emphasis on performance, flexibility, and open-source nature makes it a valuable asset for anyone working with local AI models. While it requires some technical proficiency, the benefits of running large language models locally make llama.cpp a worthwhile tool for serious AI practitioners.

7. Jan AI

Jan AI stands out as a polished and user-friendly open-source platform for running local AI models. It simplifies the process of downloading, running, and interacting with various open-source models, making local AI more accessible to both developers and non-technical users. This platform provides a complete environment, incorporating features like document processing and an AI assistant interface reminiscent of commercial offerings, all while keeping your data private on your local machine. For those looking to leverage the power of local AI models without the complexities typically associated with setup and configuration, Jan AI offers a compelling solution.

Imagine effortlessly analyzing large documents and extracting key insights without uploading sensitive data to the cloud. With Jan AI, this becomes a reality. Its built-in document analysis and question-answering capabilities allow you to process information locally, ensuring complete privacy. The chat interface, with its multimodal support, provides a seamless and intuitive way to interact with your local AI models, much like popular commercial AI assistants. This makes Jan AI an excellent choice for tasks ranging from personal knowledge management to sensitive data analysis. For example, researchers can use Jan AI to analyze research papers without risking data breaches, while entrepreneurs can leverage it for market research and competitive analysis while maintaining confidentiality.

One of Jan AI’s key strengths lies in its modern and intuitive user interface. Compared to other local AI solutions that might require command-line proficiency or complex configurations, Jan AI offers a streamlined experience. Its built-in model explorer and downloader simplifies the process of getting started with different models. Regular updates with new models and features ensure that users have access to the latest advancements in the field. While projects like PrivateGPT offer similar document Q&A functionality, Jan AI distinguishes itself with its broader model support and more polished user experience.

While Jan AI offers significant advantages, it’s worth noting that it's a relatively newer project with a smaller community compared to more established tools. This means fewer community resources and potentially less extensive documentation. It might also consume more resources compared to more specialized, lightweight tools. Additionally, advanced configuration options are currently limited, and some features are still under development.

Key Features & Benefits:

- Modern, Intuitive Interface: Simplifies the complexities of local AI model management.

- Built-in Model Explorer & Downloader: Easy access to a variety of open-source models.

- Document Analysis & Q&A: Extract insights from documents privately and securely.

- Chat Interface with Multimodal Support: Interact with your models conversationally.

- Complete Privacy: All processing occurs locally on your machine.

- No Account or Internet Required (after setup): Work offline and independently.

Pros:

- Polished user experience.

- Regular updates and new features.

- Complete data privacy.

- Seamless document integration.

Cons:

- Newer project with a smaller community.

- Potentially higher resource consumption.

- Limited advanced configuration.

- Some features still in development.

Website: https://jan.ai/

Jan AI earns its place on this list by providing a user-friendly gateway to the world of local AI models. It empowers users to harness the power of AI while maintaining complete control and privacy over their data. Its focus on simplicity and accessibility makes it a valuable tool for anyone interested in exploring the potential of local AI, from seasoned developers to curious beginners.

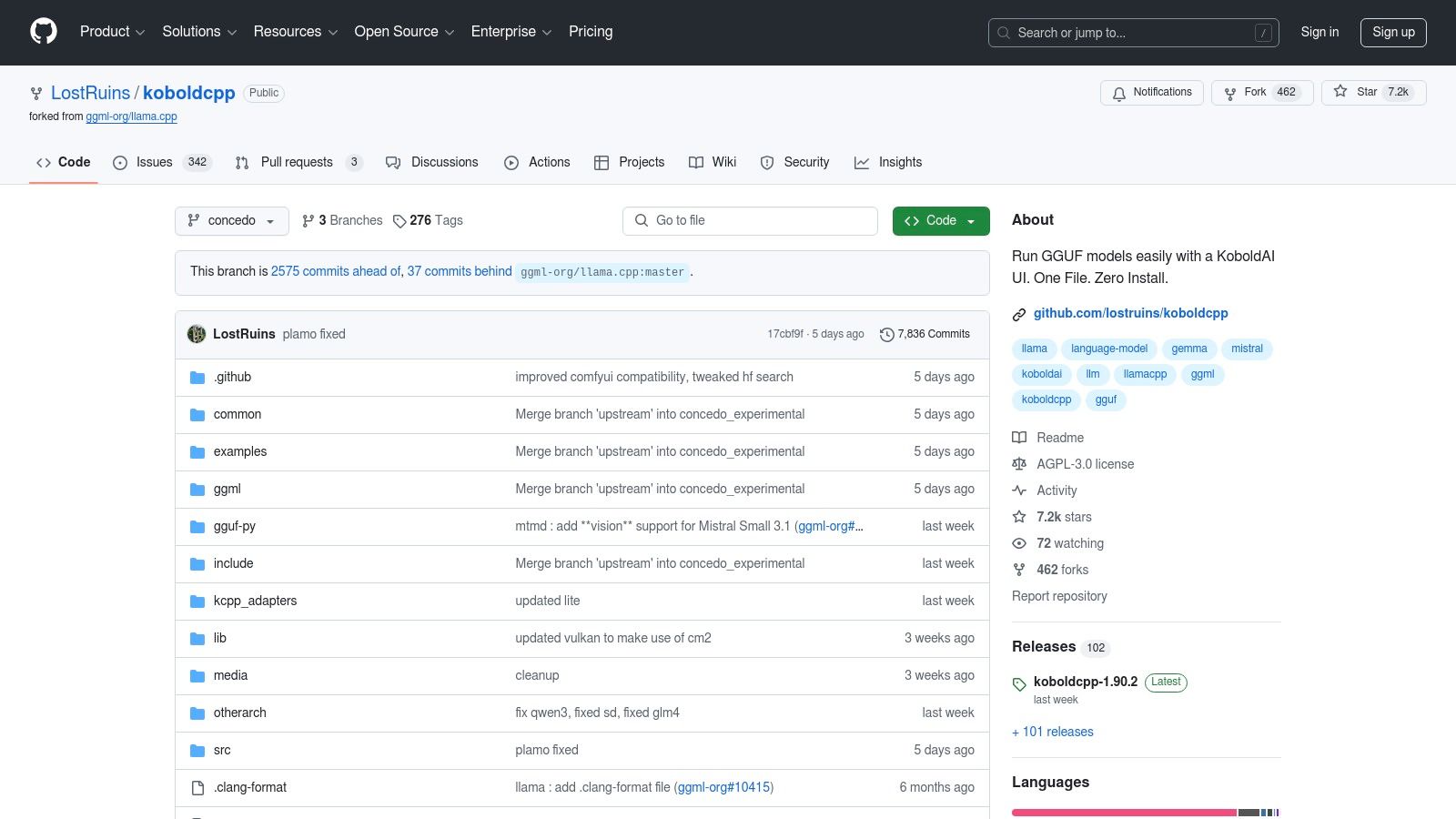

8. Koboldcpp

Koboldcpp stands out among local AI models as a powerful tool specifically designed for creative writing, interactive storytelling, and role-playing. Unlike more general-purpose language models, Koboldcpp focuses on narrative text generation, making it an ideal choice for writers, game developers, and anyone interested in exploring AI-powered storytelling. It leverages the capabilities of llama.cpp, providing a user-friendly web interface that simplifies the process of creating complex and engaging narratives. Its popularity within the AI storytelling and creative writing communities speaks to its effectiveness and specialized feature set.

Imagine crafting intricate interactive fiction experiences, developing dynamic character interactions, or simply generating creative text formats with ease. Koboldcpp empowers you to do just that. Its advanced context management features ensure coherent and consistent storytelling, while customizable AI personalities and characters bring your narratives to life. A built-in memory and world info system allows for persistent narratives, creating rich and evolving story worlds. Furthermore, its low resource mode makes it accessible even on older computers, expanding its reach to a wider audience. This focus on narrative generation makes it a valuable addition to the arsenal of any creative writer or game developer working with local AI models.

For those seeking a local AI solution tailored to creative writing, Koboldcpp presents a compelling option. While general-purpose frameworks may offer broader functionality, Koboldcpp's specialization in narrative text generation provides a distinct advantage for specific use cases. Features like specialized interfaces, advanced context management, and customizable AI personalities offer powerful tools for crafting compelling stories.

Pros:

- Specifically optimized for creative writing use cases: Koboldcpp's strength lies in its tailored approach to narrative generation, offering features directly relevant to writers and storytellers.

- Strong community around interactive fiction: A vibrant community provides support, resources, and shared experiences, making it easier to learn and utilize the tool effectively.

- Works well with smaller models on limited hardware: The low resource mode enables users with less powerful hardware to experience the benefits of local AI-powered storytelling.

- Extensive storytelling-specific configuration options: Fine-tune the AI's behavior and output to match your specific creative vision.

Cons:

- Less suitable for general-purpose AI tasks: Koboldcpp's specialization limits its applicability to tasks outside of creative writing and storytelling.

- More niche than general-purpose frameworks: Its focused feature set may not cater to the diverse needs of users seeking broader AI functionalities.

- Setup can be complex for beginners: Navigating the initial setup and configuration might pose a challenge for those new to local AI models.

- Limited documentation outside the core community: Finding comprehensive documentation beyond the core community can be difficult.

Koboldcpp is a free and open-source project available on GitHub. It requires some technical proficiency to set up and configure, especially for those unfamiliar with command-line interfaces and compiling code. However, the active community and available resources can assist users in overcoming these challenges.

Website: https://github.com/LostRuins/koboldcpp

Local AI Models Feature Comparison

| Product | Core Features / Capabilities | User Experience ★ | Privacy & Control 👥 | Unique Selling Points ✨ | Price & Value 💰 |

|---|---|---|---|---|---|

| Ollama | Local LLMs (Llama 2, Mistral), CLI + JSON API | ★★★★ | Full local, no cloud data | Easy one-line model install, cross-platform | Free, open-source 🏆 |

| LM Studio | GUI for local models, model marketplace | ★★★★★ | All local processing | User-friendly, built-in chat & benchmarking | Free with regular updates |

| LocalAI | OpenAI API compatible local server, multi-modal | ★★★★ | Fully offline, local API | Drop-in OpenAI replacement, Docker support | Free, open-source |

| Text Generation WebUI | Web UI for extensive customization & training | ★★★ | Complete local use | Training & fine-tuning, extensions system | Free, open-source |

| GPT4All | Desktop chat app, cross-platform, RAG support | ★★★★ | Offline operation | Optimized for consumer hardware CPUs | Free, open-source |

| llama.cpp | Highly optimized CPU/GPU inference engine | ★★★★ | Full local, no GUI | Performance-focused, quantization support | Free, open-source |

| Jan AI | Polished UI, document QA, multimodal chat | ★★★★★ | Fully local | Seamless document integration, intuitive UI | Free, open-source |

| Koboldcpp | Narrative AI writing, storytelling focused | ★★★★ | Local only | Creative writing features, memory systems | Free, open-source |

Embrace the Future of AI: Choosing the Right Local Model

The world of local AI models offers an exciting array of possibilities, from running powerful language models on your personal computer to experimenting with cutting-edge AI technology offline. This article explored a range of options, including versatile platforms like Ollama, LM Studio, and LocalAI; user-friendly interfaces like Text Generation WebUI; specialized tools like GPT4All and llama.cpp; enterprise-grade solutions like Jan AI; and highly efficient implementations like Koboldcpp. Each tool presents unique strengths and caters to different needs and technical expertise.

Key takeaways include the importance of understanding your hardware limitations, as local models can be resource-intensive. You should also consider the specific tasks you want to perform, whether it's text generation, code completion, or other AI-powered functionalities. Choosing the right local AI model depends on balancing ease of use with performance and your desired level of control.

For those interested in delving deeper into the technical aspects and latest advancements in local AI models, resources like Open Deep Research from AnotherWrapper provide valuable insights and keep you up-to-date with this rapidly evolving field.

As you embark on your local AI journey, remember to choose a tool that aligns with your specific goals and technical capabilities. Carefully evaluate factors like hardware requirements, community support, and the model's intended use case. If you're looking to manage multiple local and cloud-based models efficiently, consider incorporating a platform like MultitaskAI into your workflow.

The future of AI is increasingly decentralized, and local AI models empower you to participate directly in this exciting evolution. By choosing the right tools and embracing continuous learning, you can unlock the full potential of AI and shape its future applications.