2025 LLM Comparison: 7 Top AI Models Reviewed

Discover the best AI models in our 2025 LLM comparison. Find out which LLMs stand out and make an informed choice today!

Decoding the LLM Landscape: A 2025 Perspective

Choosing the right Large Language Model (LLM) is critical for success in many applications, from chatbots to content generation. This LLM comparison helps AI professionals, developers, and entrepreneurs select the best tool for their needs. Quickly compare the strengths and weaknesses of the top 7 LLMs of late 2025, including MultitaskAI, GPT-4, Claude 3 Opus, Gemini Pro, Llama 3, Mistral Large, and Claude 3 Sonnet. Discover which LLM best addresses your project requirements and unlock the power of AI.

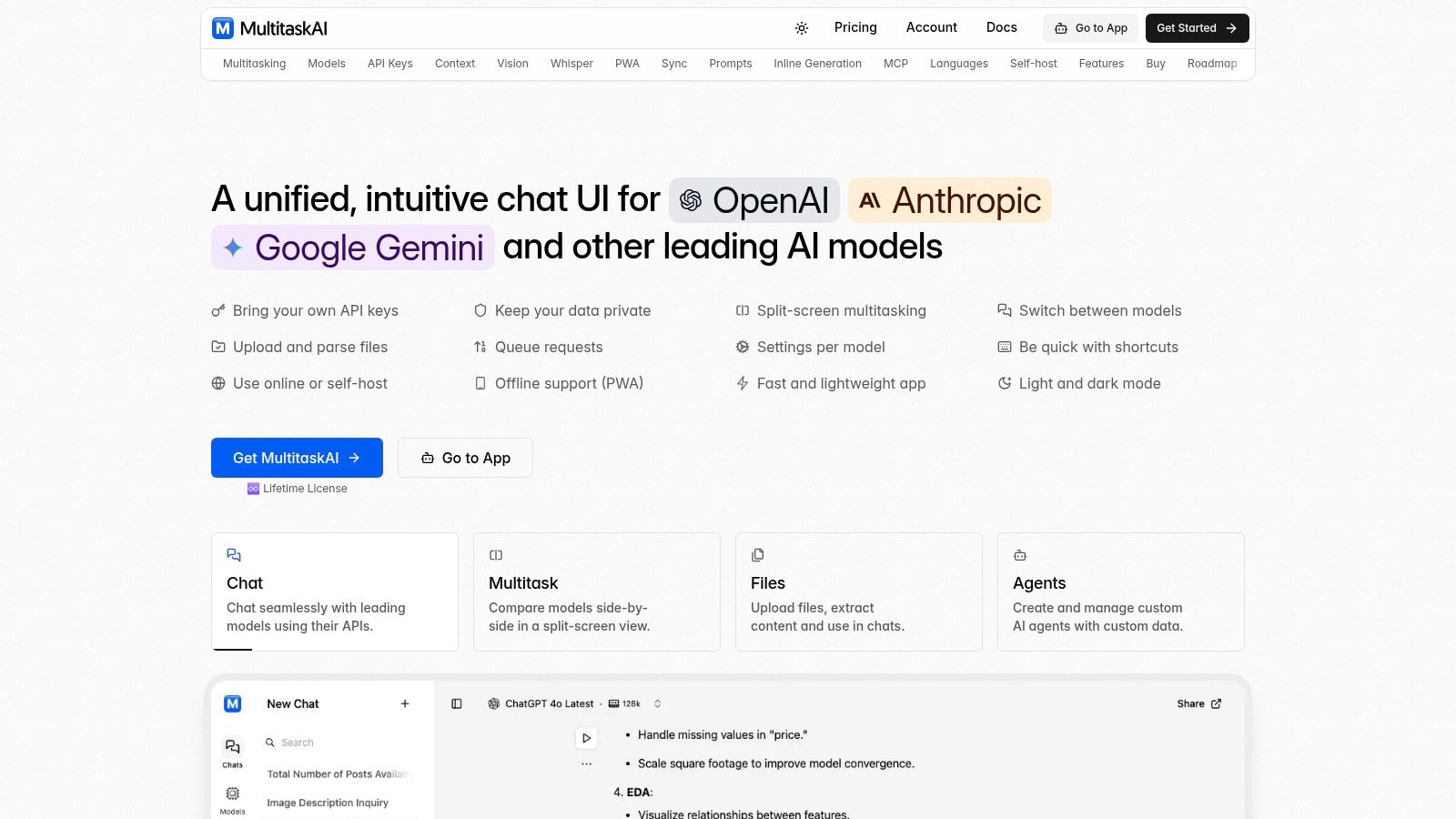

1. MultitaskAI

MultitaskAI stands out in the crowded field of LLM comparison tools by offering a truly unique, privacy-focused, and productivity-enhancing experience. It acts as a central hub, connecting you to various large language models (LLMs) like OpenAI, Anthropic, and Google Gemini, all within a single, sleek browser-based interface. This allows for direct LLM comparison by enabling you to run prompts through different models simultaneously and observe the variations in output, a crucial feature for anyone serious about understanding and leveraging the strengths of different AI ecosystems. Imagine comparing the creative writing capabilities of Anthropic's Claude with the coding prowess of OpenAI's GPT-4, side-by-side, in real-time – MultitaskAI makes this possible.

For developers, software engineers, and AI professionals engaged in LLM comparison, MultitaskAI becomes an indispensable tool. It facilitates rapid prototyping and testing of different models for specific tasks, helping identify the optimal LLM for a given project. Digital marketers exploring the potential of AI-generated content can leverage MultitaskAI to compare outputs across various LLMs, fine-tuning their strategies and maximizing campaign effectiveness. Tech-savvy entrepreneurs and indie hackers can harness its power to streamline workflows, automate tasks, and build AI-powered applications. Even ChatGPT and other LLM users will appreciate the centralized access and enhanced control that MultitaskAI provides.

One of the key differentiators of MultitaskAI is its commitment to user privacy. Unlike platforms that route your data through their own servers, MultitaskAI requires you to use your own API keys. This means your data remains entirely under your control, addressing a major concern for many users in the AI space. While requiring users to manage their own keys might pose a slight learning curve for non-developers initially, the added privacy and security are well worth the effort. The platform offers robust encrypted API key storage, further bolstering security.

Beyond basic chat functionality, MultitaskAI boasts a wealth of productivity features. True multitasking with split-screen conversations and background response generation significantly boosts efficiency. File upload and parsing capabilities (think analyzing contracts or research papers with AI assistance) further enhance its utility. Custom AI agents allow you to tailor the system to your specific needs, while dynamic, variable-based prompts enable complex and flexible interactions with the LLMs. Offline access through its Progressive Web App (PWA) ensures you can continue working even without an internet connection. The platform supports 29 languages, catering to a global user base.

MultitaskAI’s pricing model is remarkably straightforward. A one-time lifetime license (€99 EUR during the special launch, normally €149 EUR) grants access for up to 5 devices, including all future updates and features. You only pay for the actual API usage based on the provider’s pricing (e.g., OpenAI, Anthropic, Google), eliminating hidden fees and offering exceptional value.

Pros:

- True multitasking with split-screen conversations and background response generation boosts productivity.

- Full privacy and data control by connecting your own API keys with no third-party data handling.

- Versatile features including file parsing, custom AI agents, dynamic prompts, offline PWA support, and multi-language interface.

- Flexible deployment: use the hosted app or self-host anywhere with static hosting, including popular platforms and FTP.

- Cost-effective one-time lifetime license with no hidden fees, plus pay only for API usage; includes 5 device activations and lifetime updates.

Cons:

- Requires users to supply and manage their own API keys, which may be a technical hurdle for non-developers.

- Google Drive sync is upcoming but not yet available, limiting seamless multi-device data backup for now.

Website: https://multitaskai.com

Setting up MultitaskAI is relatively straightforward. After acquiring the license, you simply enter your API keys for the models you want to use. The platform guides you through the process, and detailed documentation is available. For self-hosting, the process involves downloading the app and placing it on your chosen static file server—a surprisingly simple task even for those familiar with basic FTP. MultitaskAI earns its place on this list by providing a potent combination of privacy, power, and affordability for anyone looking to harness the full potential of multiple LLMs.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

2. GPT-4 (OpenAI)

GPT-4 is OpenAI's most advanced large language model, offering significant improvements over its predecessors in reasoning, factuality, and adherence to user intent. It excels at understanding and generating natural language or code, demonstrating human-level performance on various professional and academic benchmarks. This makes it a powerful tool for a range of applications, from drafting emails and writing code to translating languages and answering complex questions. What sets GPT-4 apart is its multimodal capability, accepting both text and image inputs, although it only generates text outputs. This allows for innovative use cases like describing images, creating captions, and even generating recipes from photos of ingredients.

In an LLM comparison, GPT-4 stands out for its advanced reasoning and problem-solving capabilities. For example, developers can use it to debug code, generate creative text formats (like poems, code, scripts, musical pieces, email, etc.), and even build applications with its API. Its broader general knowledge and deeper domain expertise make it a valuable resource for researchers, writers, and anyone seeking information on a wide range of topics. Digital marketers can leverage GPT-4 for content creation, generating engaging social media posts, writing compelling ad copy, and performing SEO tasks. Learn more about GPT-4 (OpenAI) and other ChatGPT alternatives for a broader perspective.

One of the key advantages of GPT-4 is its enhanced instruction following and reduced hallucinations, meaning it’s more likely to produce accurate and relevant information while adhering to user prompts. Its expanded context window of up to 32k tokens (approximately 50+ pages of text) allows it to handle significantly longer pieces of text, enabling more complex and nuanced interactions.

Access to GPT-4 requires a subscription to ChatGPT Plus or API waitlist approval. Pricing for API access varies based on usage, with different rates for input and output tokens. The higher computational costs and potentially slower response times compared to smaller models should be considered, especially for resource-intensive applications. While GPT-4 significantly improves upon previous models, it is important to note that it still exhibits some biases and can occasionally hallucinate, and its knowledge is limited by its training cutoff date.

Features:

- Multimodal capabilities (accepts text and image inputs)

- Improved reasoning and problem-solving abilities

- Broader general knowledge and deeper domain expertise

- Enhanced instruction following and reduced hallucinations

- Context window of up to 32k tokens

Pros:

- Superior performance on complex reasoning tasks

- Better at understanding nuance and context

- More reliable factual information with reduced hallucinations

- Handles ambiguous instructions effectively

Cons:

- Access requires ChatGPT Plus subscription or API waitlist approval

- Higher computational costs and slower response times than smaller models

- Still exhibits some biases and can occasionally hallucinate

- Limited real-time knowledge (training cutoff date)

Website: https://openai.com/gpt-4

3. Claude 3 Opus (Anthropic)

Claude 3 Opus is Anthropic's flagship AI assistant and arguably their most powerful model in the ongoing LLM comparison. It's designed for complex reasoning, nuanced instruction following, and creative tasks. This makes it particularly attractive for professionals seeking advanced capabilities. Whether you're tackling intricate coding challenges, diving into complex mathematical problems, or demanding precise logical reasoning, Claude 3 Opus aims to deliver while maintaining a high level of factual accuracy. It's the top-tier option within the Claude 3 family, outshining its siblings, Claude 3 Haiku and Claude 3 Sonnet.

One of Claude 3 Opus's key strengths is its advanced multimodal capabilities, allowing it to process both text and vision inputs. This opens up a range of possibilities for applications like image analysis, document processing, and more. Its exceptional performance on complex reasoning and analytical tasks makes it a powerful tool for AI professionals, software engineers, and data scientists working on demanding projects. For instance, developers can leverage its enhanced coding capabilities and mathematical reasoning for tasks ranging from generating code in various programming languages to solving complex equations. Its substantial context window of 200K tokens (approximately 150 pages) allows it to maintain context over longer pieces of text, making it suitable for tasks like summarizing lengthy reports or analyzing extensive codebases. For tech-savvy entrepreneurs and digital marketers, Claude 3 Opus can be a game-changer for tasks requiring creative content generation, in-depth market analysis, and strategic planning.

While pricing information isn't publicly available, it's anticipated that Claude 3 Opus will have a higher cost compared to smaller, less powerful models. This is understandable given its enhanced capabilities. This also means it might be more resource-intensive than necessary for simpler tasks, making it important to choose the right tool for the job. Another limitation is its knowledge cutoff, which, unlike models with real-time information access, prevents it from browsing the internet directly. Therefore, it relies on the information it was trained on and can't provide answers on very recent events.

However, Claude 3 Opus's benefits often outweigh these drawbacks. Its commitment to constitutional AI principles makes it safer and more reliable. Its superior ability to understand and follow detailed instructions simplifies complex tasks and improves efficiency. Learn more about Claude 3 Opus (Anthropic) to explore further comparisons and potential alternatives. In the arena of LLM comparison, Claude 3 Opus stands out for its focus on reasoning, accuracy, and safety, making it a valuable tool for a wide range of professional applications. You can explore its capabilities further on the Anthropic website: https://www.anthropic.com/claude.

4. Gemini Pro (Google)

Gemini Pro is Google's flagship Large Language Model (LLM) and a powerful contender in the rapidly evolving landscape of AI. Designed as a multimodal system from the ground up, Gemini Pro handles not just text, but also images, audio, video, and code, making it a truly versatile tool for various applications. This comprehensive approach distinguishes Gemini Pro in the llm comparison arena, as it pushes the boundaries of what's possible with a single, unified model. It powers Google's AI chatbot Bard and demonstrates significant strength in natural language understanding, complex reasoning tasks, and efficient code generation.

For developers and businesses, Gemini Pro offers access via Google AI Studio and Vertex AI platforms. Its integration with Google's vast ecosystem, including Search, Gmail, and Docs, provides a seamless workflow for incorporating AI capabilities into existing applications. For example, developers can leverage Gemini Pro’s natural language understanding to build smarter chatbots for customer service, or utilize its code generation capabilities to automate repetitive coding tasks. Its impressive context window of up to 1 million tokens in certain configurations allows for processing and understanding extremely long pieces of text, a crucial feature for tasks like document summarization and complex analysis.

Compared to other LLMs, Gemini Pro boasts competitive performance on a variety of benchmarks and generally comes at a lower price point, making it a more accessible option for many. For instance, while models like GPT-4 might excel in specific areas like creative writing, Gemini Pro offers a balanced performance across a broader spectrum of tasks, making it a solid all-around choice in any llm comparison. Learn more about Gemini Pro (Google) to see how it stacks up against other alternatives.

However, Gemini Pro is not without its drawbacks. While it excels as a general-purpose model, its performance in certain specialized domains may lag behind more focused competitors. Some users have also noted inconsistencies in reasoning on particularly complex tasks. Initially, API availability is limited to specific regions, potentially hindering adoption for some developers. Finally, the third-party integration ecosystem surrounding Gemini Pro is currently less extensive compared to some of its competitors.

Key Features and Benefits:

- Multimodal Capabilities: Processes text, images, audio, video, and code.

- Strong Reasoning & Language Understanding: Excels in understanding and generating human-like text.

- Code Generation & Analysis: Supports multiple programming languages.

- Large Context Window: Up to 1 million tokens in some configurations.

- Google Ecosystem Integration: Seamlessly integrates with Google Search, Gmail, Docs, etc.

Pros:

- Competitive performance across benchmarks.

- Lower pricing compared to some competitors.

- Direct integration with the Google ecosystem.

- Availability via Google AI Studio and Vertex AI.

Cons:

- Performance can lag in specialized domains.

- Occasional inconsistencies in complex reasoning.

- Limited initial API availability.

- Smaller third-party integration ecosystem.

Website: https://deepmind.google/technologies/gemini/

Despite these limitations, Gemini Pro represents a significant step forward in the development of general-purpose LLMs. Its multimodal capabilities, strong performance, and tight integration with the Google ecosystem position it as a valuable tool for developers, businesses, and anyone seeking to harness the power of AI. Its continuous development and expanding features ensure its continued relevance in the evolving llm comparison landscape.

No spam, no nonsense. Pinky promise.

5. Llama 3 (Meta)

Llama 3 is Meta's latest offering in the open-source large language model (LLM) landscape, and a strong contender in any llm comparison. Available in various sizes, including 8B and 70B parameter versions, Llama 3 represents a significant leap forward from its predecessors. It boasts enhanced capabilities in reasoning, coding, and multilingual tasks, making it a versatile tool for a wide range of applications. Its open-source nature allows for local deployment or deployment on private infrastructure, giving developers and businesses greater control over their data and the ability to tailor the model to specific needs. This makes it particularly appealing for those prioritizing data privacy or seeking to fine-tune models for niche applications.

This open-source approach distinguishes Llama 3 in the llm comparison. Unlike some commercial LLMs, Llama 3 allows for both research and commercial use under the appropriate license. This flexibility empowers developers to experiment, build, and deploy applications without being constrained by restrictive licensing agreements. For example, a tech-savvy entrepreneur could fine-tune Llama 3 to create a specialized chatbot for their e-commerce platform, maintaining complete control over customer data. Similarly, a software engineer could leverage Llama 3's coding capabilities to build an internal code generation tool tailored to the company's specific coding practices.

Llama 3's competitive performance against similar-sized proprietary models further solidifies its place in this list. While it might not surpass the absolute performance of top-tier commercial giants, its free availability combined with the potential for customization makes it a powerful alternative. The multiple model sizes cater to different needs and resource constraints. If you need a smaller footprint for faster inference, the 8B parameter version might suffice. For more demanding tasks requiring greater accuracy and understanding, the 70B parameter version provides increased power.

Features:

- Open-source model available for research and commercial use

- Multiple model sizes (8B and 70B parameters)

- Strong performance on reasoning, coding, and language understanding tasks

- Multilingual support and improved instruction following

- Deployable locally or on private infrastructure

Pros:

- Free for research and commercial use (with appropriate license)

- Greater control over deployment and data privacy

- Fine-tuning capabilities for specific domains or applications

- Competitive performance against proprietary models of similar size

Cons:

- Requires significant computational resources for effective deployment

- Less optimized out-of-the-box experience compared to commercial services

- Limited official support compared to commercial alternatives

- Generally trails top commercial models in absolute performance

Website: https://ai.meta.com/llama/

Implementation/Setup Tips:

While open-source, deploying Llama 3 effectively requires technical expertise and substantial computing power. Users will need to be comfortable setting up and managing the model on their chosen infrastructure. Pre-built docker containers and community-developed tools can simplify this process, but a solid understanding of system administration and machine learning deployment practices is recommended.

In conclusion, Llama 3’s open-source nature, combined with its competitive performance and flexibility, makes it a valuable tool in the ever-evolving LLM landscape. Its inclusion in this llm comparison is crucial for developers and businesses looking for powerful, customizable, and cost-effective alternatives to proprietary models.

6. Mistral Large (Mistral AI)

Mistral Large is the flagship model from Mistral AI, a European company founded by former DeepMind and Meta AI researchers. This powerful LLM excels in reasoning, coding, and language understanding tasks, all while maintaining impressive efficiency. Its design balances potent capabilities with practical deployment considerations, making it an enterprise-ready solution that rivals the performance of larger models at a fraction of the cost. In an LLM comparison, Mistral Large stands out for its European roots, commitment to responsible AI, and strong performance in key areas like reasoning and code generation.

For AI professionals and developers seeking a performant yet efficient LLM, Mistral Large offers a compelling alternative. Its strength in reasoning makes it ideal for complex problem-solving and logical deduction tasks. Software engineers and programmers will appreciate its proficiency in code generation, potentially streamlining development workflows. Furthermore, its efficient architecture translates to reduced computational requirements, a significant advantage for cost-conscious tech-savvy entrepreneurs and indie hackers.

Mistral Large's 32k token context window (expandable with techniques like Recurrent Memory Transformer - RMT) is another key feature. This expanded context allows the model to process and understand larger chunks of text, benefiting applications like document summarization and long-form content generation. Multilingual support, particularly for major European languages, broadens its applicability for diverse user bases and international projects.

Features and Benefits:

- Strong Performance: Competitive performance with larger models in reasoning, math, and coding, making it suitable for demanding applications.

- Efficiency: Reduced computational needs compared to larger models, contributing to cost savings.

- Large Context Window: 32k token context window, expandable with RMT, enabling comprehensive text processing.

- Multilingual Support: Supports major European languages, facilitating international projects.

- API Access and Partner Deployments: Flexible access options cater to diverse integration needs.

Pros:

- Cost-Effective Performance: Delivers comparable performance to larger, more expensive models.

- European Focus: Provides a data sovereignty-focused alternative to US-based LLMs.

- Transparency and Openness: Mistral AI's commitment to transparency is reflected in the availability of smaller open models.

- Responsible AI Focus: Emphasizes responsible AI development practices.

Cons:

- Limited Multimodality: Currently offers fewer multimodal capabilities compared to competitors like Gemini or GPT-4.

- Developing Ecosystem: The ecosystem of integrations and tools is still growing.

- Newer to Market: Has a shorter track record in production environments compared to established players.

- Limited Global Presence: Primarily focused on the European market.

Implementation and Setup:

Mistral Large is accessible via API, simplifying integration into existing applications and workflows. Specific pricing details are available upon request through their website. Developers can leverage the provided documentation and examples to get started quickly.

Comparison:

Compared to models like GPT-4, Mistral Large prioritizes efficiency without significantly sacrificing performance in key areas like reasoning and code generation. While GPT-4 boasts more advanced multimodal capabilities and a larger ecosystem, Mistral Large presents a strong value proposition for users prioritizing cost-effectiveness and a European focus on data privacy. Similarly, compared to Anthropic's Claude, Mistral offers a competitive alternative, particularly for those working with European languages and seeking a more efficient solution.

Website: https://mistral.ai/

Mistral Large deserves a spot in any LLM comparison due to its compelling combination of performance, efficiency, and responsible AI focus. It offers a viable alternative to larger models, particularly for European users and those seeking a balance between capability and cost-effectiveness. As the model and its surrounding ecosystem continue to mature, it will likely play an increasingly significant role in the evolving LLM landscape.

7. Claude 3 Sonnet (Anthropic)

When conducting an LLM comparison, Claude 3 Sonnet by Anthropic emerges as a compelling option, especially for those seeking a balance between power and affordability. Positioned between the smaller Haiku and the larger Opus models within the Claude 3 family, Sonnet provides a robust solution for a diverse range of applications, from enterprise-grade tasks to consumer-facing tools. It excels in general-purpose use cases, offering a potent mix of intelligence, speed, and cost-effectiveness. This makes it a strong contender in any LLM comparison.

Sonnet's strength lies in its well-rounded capabilities. Its multimodal understanding allows it to process both text and images, opening doors for applications requiring analysis and generation across multiple media formats. The impressive 200,000-token context window (equivalent to roughly 150 pages of text) allows Sonnet to maintain coherence and understanding across lengthy documents and conversations, a crucial factor for tasks like summarizing complex reports or facilitating engaging chatbot interactions. Moreover, Anthropic's focus on "Constitutional AI" translates into responses that are not only helpful but also harmless, aligning with ethical considerations often paramount in AI development.

For developers and businesses, Sonnet offers several advantages. It provides faster inference speeds compared to the larger Opus model, making it more suitable for production applications where responsiveness is key. While pricing information isn't publicly available, Anthropic positions Sonnet as a more cost-effective alternative to Opus, potentially making it an attractive choice for budget-conscious projects. Accessibility is another strong point, with Sonnet available through both an API and the user-friendly Claude.ai interface. This dual access allows for seamless integration into existing workflows or direct interaction for experimentation and prototyping.

While Sonnet excels in numerous areas, an honest LLM comparison requires acknowledging its limitations. For extremely complex tasks demanding the highest level of reasoning and nuance, Opus might still be the preferred choice. Furthermore, Sonnet has a limited knowledge cutoff and doesn't offer real-time information access or the ability to browse the internet or execute code directly. Finally, while more efficient than Opus, Sonnet still requires substantial computational resources compared to smaller, more specialized LLMs.

Comparison with similar tools: When compared to models like Google's Gemini, Sonnet stands out with its focus on safety and helpfulness thanks to its Constitutional AI training. While Gemini might offer broader capabilities in areas like code execution, Sonnet provides a more controlled and predictable output, which can be crucial for applications where accuracy and reliability are paramount.

Implementation tips: For developers looking to integrate Sonnet, the provided API documentation offers comprehensive guides and code examples. Experimenting with the Claude.ai interface can also provide valuable insights into Sonnet's capabilities before committing to API integration.

In summary: Claude 3 Sonnet offers a compelling blend of performance, affordability, and safety features. It’s an excellent choice for developers and businesses seeking a versatile LLM for various applications without requiring the top-tier power (and cost) of models like Opus. Its inclusion in any LLM comparison is well-deserved, making it a worthy consideration for those navigating the ever-evolving landscape of large language models. You can explore Claude 3 Sonnet further on their website.

Top 7 LLMs Feature Comparison

| Product | Core Features/Capabilities | User Experience & Quality ★ | Value Proposition 💰 | Target Audience 👥 | Unique Selling Points ✨ |

|---|---|---|---|---|---|

| MultitaskAI 🏆 | Connects multiple AI models; split-screen multitasking; offline PWA | ★★★★☆ Fast, intuitive, multi-language, keyboard shortcuts | 💰 One-time lifetime license + pay API only | 👥 AI pros, devs, entrepreneurs | ✨ Full privacy via own API keys; flexible hosting; custom agents; offline use |

| GPT-4 (OpenAI) | Multimodal input; strong reasoning & context (32k tokens) | ★★★★★ Top-tier accuracy & nuance | 💰 Subscription/API pay | 👥 Professionals, researchers | ✨ Multimodal; advanced problem-solving |

| Claude 3 Opus (Anthropic) | Multimodal; 200K token context; strong coding & reasoning | ★★★★☆ Reliable & safe responses | 💰 Premium model cost | 👥 Enterprise, developers | ✨ Constitutional AI safety; extended context window |

| Gemini Pro (Google) | Multimodal (text, images, audio, code); 1M token context | ★★★★☆ Integrated with Google ecosystem | 💰 Competitive pricing | 👥 Enterprises, Google users | ✨ Seamless Google integration; broad multimodal |

| Llama 3 (Meta) | Open-source; multiple sizes; strong multilingual & coding | ★★★★☆ Flexible but less polished | 💰 Free (license); resource costs | 👥 Developers, researchers | ✨ Open-source, local deploy, fine-tuning enabled |

| Mistral Large (Mistral AI) | Efficient architecture; 32k tokens; multilingual support | ★★★★☆ Efficient, responsible AI | 💰 API-based; European data focus | 👥 Enterprises, European markets | ✨ Efficiency & sovereignty focus; transparent models |

| Claude 3 Sonnet (Anthropic) | Balanced mid-tier multimodal; 200K token context | ★★★★☆ Cost-effective, fast inference | 💰 More affordable Anthropic option | 👥 Enterprises, mid-size users | ✨ Good balance speed/capability; constitutional AI |

Choosing Your Ideal LLM: Key Takeaways

This LLM comparison has explored a range of powerful language models, from established players like GPT-4 and Claude 3 Opus to newer entrants like Mistral Large and Llama 3. Navigating the evolving world of LLMs can feel complex, but by focusing on your specific needs, you can pinpoint the ideal tool for your projects. Whether you prioritize cost-effectiveness, specific capabilities like multi-modal processing (Gemini Pro), or the control offered by open-source models like Llama 3, the right LLM is out there.

Key takeaways from our exploration include:

- Cost vs. Capability: While powerful models like GPT-4 offer cutting-edge performance, they often come with higher costs. Open-source alternatives like Llama 3 can provide significant value, especially for those comfortable with fine-tuning and customization.

- Specialized vs. General Purpose: Some LLMs, like Claude 3 Sonnet, excel in specific areas like creative writing, while others, like GPT-4, aim to be more general-purpose tools. Carefully consider your primary use cases.

- Ease of Integration: Implementing an LLM effectively requires considering API accessibility, documentation, and available support resources. Tools like MultitaskAI can help streamline workflow management and maximize the effectiveness of your chosen LLM(s).

Next steps in your LLM journey:

- Define your needs: Clearly outline your project requirements and prioritize the essential features in an LLM.

- Explore and experiment: Leverage free trials or free tiers to test different LLMs and assess their performance firsthand.

- Consider your budget: Balance performance with cost-effectiveness and explore different pricing models offered by various providers.

- Optimize your workflow: Explore tools like MultitaskAI to efficiently manage and interact with multiple LLMs, potentially saving you significant time and resources.

The landscape of LLMs is constantly evolving. By staying informed about the latest advancements and focusing on your specific needs through careful LLM comparison, you can harness the power of these incredible tools to unlock new possibilities and achieve your goals. Don't be afraid to experiment and iterate – the future of AI is in your hands.