AI privacy concerns: Top Issues & Protection Tips

Unpack ai privacy concerns—from data leaks to algorithmic bias—and learn key strategies to protect your privacy.

Unveiling the Privacy Implications of AI

This listicle examines 10 critical AI privacy concerns you need to know. From data collection and algorithmic bias to facial recognition and data security vulnerabilities, we'll cover the key privacy risks associated with artificial intelligence. Understanding these concerns is essential for responsible AI development and usage, and for protecting individual privacy in an AI-driven world. This list will help AI professionals, developers, and users alike navigate the complexities of AI privacy concerns.

1. Data Collection and Surveillance

One of the most pressing AI privacy concerns revolves around data collection and surveillance. AI systems, particularly machine learning models, are data-hungry. They require massive datasets to train and function effectively. This inherent need for data creates a significant risk of excessive data collection and the potential for unchecked surveillance. Companies and governments can leverage AI's analytical power to identify behavior patterns, track individuals across different platforms, and build incredibly detailed profiles, often without explicit consent or even awareness. This can range from seemingly innocuous data points like online shopping preferences to highly sensitive information like location data and private communications.

This concern deserves a top spot on any list of AI privacy issues because it strikes at the heart of individual autonomy and freedom. Features like mass data collection, passive surveillance through always-on monitoring systems, and sophisticated behavioral tracking mechanisms create a digital surveillance infrastructure with the potential for misuse and abuse.

Examples of this in action:

Smart home devices: While convenient, these devices are often constantly listening for wake words, potentially capturing and storing private conversations.

Social media platforms: These platforms track user browsing behavior not just within their own apps, but across the internet, building detailed profiles for targeted advertising and other purposes.

Facial recognition technology: Companies like Clearview AI have scraped billions of images from the web to build massive facial recognition databases, raising serious concerns about privacy and potential for misuse by law enforcement and other entities.

Government surveillance: China's extensive use of AI-powered surveillance systems, including facial recognition and social credit scoring, provides a chilling example of how this technology can be used for social control.

Pros and Cons:

While increased data collection can improve AI system effectiveness and enable personalized services (like recommending products you might like), and even enhance security systems (like fraud detection), the cons are substantial. The lack of transparent consent, the chilling effect on free expression, and the potential for "function creep" – where data collected for one purpose is used for another, unrelated purpose – are all significant drawbacks.

Actionable Tips for AI Professionals and Developers:

Data Minimization: Collect only the data absolutely necessary for the intended purpose. Avoid collecting data "just in case" it might be useful later.

Privacy by Design: Implement privacy considerations from the very beginning of the design process, not as an afterthought.

Data Sunset Policies: Establish clear policies for how long data will be stored and when it will be deleted.

Meaningful Opt-Out Mechanisms: Provide users with genuine control over their data and ensure that opt-out mechanisms are easy to find and actually work.

When and Why to Address Data Collection:

Addressing data collection and surveillance concerns is not just ethically important; it's also crucial for building trust with users. For AI professionals, developers, and entrepreneurs, proactively addressing these concerns is essential for long-term success. By adopting privacy-enhancing technologies and practices, you can demonstrate a commitment to responsible AI development and mitigate the risks associated with excessive data collection. This is especially critical in the current climate of increasing public awareness and scrutiny of AI privacy practices, influenced by events like Edward Snowden's revelations, the Cambridge Analytica scandal, and Shoshana Zuboff's work on "Surveillance Capitalism." By prioritizing privacy, you can build more sustainable and trustworthy AI systems that benefit both individuals and society.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

2. Algorithmic Bias and Discrimination

AI systems learn from the data they are trained on. If that data reflects existing societal biases, the AI will inevitably learn and perpetuate those biases. This creates significant AI privacy concerns when these systems are used to make decisions about individuals, particularly in areas like loan applications, hiring processes, or even criminal justice. These biased algorithms can lead to discriminatory outcomes that violate the privacy rights of marginalized groups, denying them opportunities or unfairly categorizing them based on protected characteristics like race, gender, or religion.

Algorithmic bias manifests in several ways. It can involve the encoding of historical biases directly from the data. For example, if historical data shows that a certain demographic group has been historically denied loans, the AI might learn to associate that group with higher risk and unfairly deny them loans in the future. Another form is proxy discrimination, where the AI uses seemingly neutral criteria that correlate with protected characteristics to discriminate. For instance, using zip codes as a factor in loan applications can indirectly discriminate against certain racial or ethnic groups concentrated in specific areas. This bias also leads to uneven accuracy across demographic groups, where the system performs better for some groups than others. Finally, the often black-box nature of AI decision-making processes makes it difficult to understand how these biased outcomes are generated, adding another layer to the privacy concern. Learn more about Algorithmic Bias and Discrimination to understand the complexities of this issue.

This issue deserves a prominent place on any list of AI privacy concerns because it represents a fundamental challenge to fairness and equality in an increasingly AI-driven world. While AI offers tremendous potential, its ability to amplify existing societal biases poses a serious threat to individual privacy rights, especially for vulnerable populations.

Features of Algorithmic Bias and Discrimination:

Encoding of historical biases: AI models learn from historical data, which may contain existing societal biases.

Proxy discrimination: AI models may use seemingly neutral variables that correlate with protected characteristics, leading to indirect discrimination.

Uneven accuracy across demographic groups: AI models may perform better for some demographic groups than others.

Black-box decision processes: The lack of transparency in AI decision-making makes it difficult to identify and address bias.

Pros of Addressing Algorithmic Bias:

Increased awareness leads to improved auditing practices: Recognizing the issue encourages developers to scrutinize their data and models for bias.

Measurable and mitigatable: Bias can be quantified and reduced with appropriate techniques.

Drives the development of fairness criteria: The need to address bias is pushing the development of standards and metrics for fairness in AI.

Cons of Unchecked Algorithmic Bias:

Systematically disadvantages vulnerable groups: Bias can perpetuate and exacerbate inequalities.

Difficult to detect without specific testing: Bias is not always obvious and requires rigorous testing to uncover.

May violate anti-discrimination laws: Biased AI systems can lead to legal challenges.

Creates unequal privacy protections: Certain groups may have their privacy violated more frequently due to biased AI systems.

Examples of Algorithmic Bias:

The COMPAS recidivism algorithm demonstrating racial bias in predicting re-offending rates.

Amazon's abandoned recruiting tool that penalized resumes containing the word "women's."

Facial recognition systems exhibiting higher error rates for individuals with darker skin tones.

Credit scoring algorithms perpetuating historical lending disparities.

Tips for Mitigating Algorithmic Bias:

Conduct regular algorithmic impact assessments: Evaluate the potential impact of AI systems on different demographic groups.

Test systems across diverse populations: Ensure that AI models perform accurately and fairly for all groups.

Implement fairness constraints in model development: Incorporate fairness metrics and constraints during the model training process.

Create diverse data science teams: Diversity in teams can help identify and address potential biases.

The work of researchers like Joy Buolamwini and Timnit Gebru with their Gender Shades project, Cathy O'Neil's book Weapons of Math Destruction, and ProPublica's analysis of the COMPAS algorithm have been instrumental in popularizing the issue of algorithmic bias and bringing it to the forefront of public discussion. By understanding and addressing these challenges, we can work towards building more ethical and equitable AI systems that respect individual privacy rights for all.

3. Lack of Transparency and Explainability

One of the most pressing AI privacy concerns revolves around the lack of transparency and explainability, often referred to as the "black box" problem. This issue arises when even the creators of advanced AI systems cannot fully explain how their algorithms arrive at specific decisions. This opacity has significant implications for individual privacy, as users are left in the dark about how their data is being processed and used to make decisions that can impact their lives. This directly contributes to rising AI privacy concerns, making it a crucial aspect for developers and users alike to understand.

Many AI systems, particularly those based on complex neural networks, function by identifying patterns within massive datasets. While incredibly powerful, these patterns can be so intricate and multi-layered that they are effectively incomprehensible to humans. Furthermore, the proprietary nature of many AI systems, often protected as trade secrets, further exacerbates the transparency issue. This lack of interpretability creates a situation where individuals cannot understand how their data influences outcomes, potentially violating their rights to information and recourse.

Features contributing to the black box problem:

Black-box algorithms: Algorithms whose internal workings are hidden or difficult to understand.

Complex neural networks: Deep learning models with numerous layers and intricate connections that obscure decision-making processes.

Proprietary systems protected as trade secrets: AI models kept confidential for competitive advantage, hindering external scrutiny.

Limited interpretability: Even with access to the code, understanding the complex relationships learned by the AI can be challenging.

Pros of (limited) opacity:

Protects intellectual property of AI developers: Keeps valuable algorithms confidential.

Some complex patterns may require opaque systems: Certain intricate relationships may be difficult to model with fully transparent methods.

Can prevent gaming of transparent systems: Opacity can make it harder for individuals to manipulate inputs to achieve desired outcomes.

Cons of opacity:

Prevents meaningful consent: Users cannot truly consent to data usage if they don't understand how it will be used.

Makes auditing difficult or impossible: Assessing fairness and bias in opaque systems is a significant challenge.

Undermines due process and contestability: Individuals have limited ability to challenge decisions made by systems they don't understand.

Violates 'right to explanation' principles: Conflicts with emerging legal frameworks like the GDPR that emphasize individuals' right to understand automated decisions.

Examples of black box AI systems raising privacy concerns:

Deep learning credit approval systems: Determining creditworthiness based on opaque criteria.

Insurance pricing algorithms: Setting insurance premiums based on complex and potentially discriminatory factors.

Content recommendation engines: Personalizing content feeds without transparency into the underlying logic.

Healthcare diagnosis systems with unexplainable outputs: Providing medical diagnoses without clear justifications.

Tips for mitigating transparency and explainability issues:

Develop explainable AI (XAI) when used for consequential decisions: Prioritize transparency for systems with significant impacts on individuals.

Create model cards documenting system limitations: Provide documentation outlining known biases, limitations, and intended use cases.

Implement layered explanation interfaces: Offer different levels of explanation detail based on user needs and technical expertise.

Provide counterfactual explanations: Explain how inputs would need to change to achieve a different outcome, empowering user understanding and control.

Influence and Popularization:

The importance of transparency and explainability in AI has been highlighted by several influential sources, including:

EU GDPR's right to explanation: Grants individuals the right to receive meaningful information about automated decision-making.

DARPA's Explainable AI (XAI) program: Funds research and development of more transparent and interpretable AI systems.

Frank Pasquale's 'Black Box Society': Examines the societal implications of opaque algorithmic decision-making.

Addressing the black box problem is critical for building trust and ensuring responsible AI development. By prioritizing transparency and explainability, we can mitigate AI privacy concerns and empower individuals to understand and control how their data is used.

4. Data Security and Breach Vulnerabilities

One of the most pressing AI privacy concerns revolves around data security and the potential for breaches. AI systems, particularly machine learning models, are data-hungry. They require massive datasets to train effectively, and these datasets often contain highly sensitive personal information. This creates significant security challenges, making these large data repositories attractive targets for hackers. Furthermore, the complexity of AI systems themselves introduces new and evolving attack vectors, exacerbating existing vulnerabilities. A breach in an AI system isn't just about losing data; it's about exposing sensitive personal information, potentially leading to identity theft, financial loss, reputational damage, and even physical harm. This is why data security deserves a prominent place on any list of AI privacy concerns.

The very nature of AI development contributes to this vulnerability. Features like high-value data targets (e.g., medical records, financial transactions, biometric data) and complex technical safeguards required to protect them create a complex landscape. Multiple vulnerability points exist throughout the AI lifecycle, from data collection and storage to model training and deployment. Even attempts to anonymize data can be circumvented through techniques like re-identification, where attackers exploit seemingly innocuous data points to link back to individuals.

Several high-profile breaches highlight these risks. The 2019 Biostar 2 biometric data breach exposed 28 million records, including fingerprints and facial recognition data. The Clearview AI breach compromised its client list and facial recognition data, raising serious concerns about the potential for misuse. Healthcare AI systems, holding vast amounts of patient data, are increasingly targeted, with breaches exposing sensitive medical information. Even more concerning are sophisticated attacks like model inversion, where malicious actors can reconstruct training data from the model itself, potentially revealing private information used to train the AI.

While the challenges are significant, AI also offers tools to enhance security. It can power advanced intrusion detection systems and drive innovation in privacy-enhancing technologies. The growing awareness of these risks is creating a market demand for secure AI solutions, pushing developers to prioritize security and privacy.

Pros:

AI can enhance security detection systems.

Drives innovation in privacy-enhancing technologies.

Creates market demand for secure AI solutions.

Cons:

Scale of potential breaches keeps increasing.

Technical complexity makes complete security difficult.

Cascading effects when systems are interconnected.

Advanced persistent threats specifically targeting AI systems.

Actionable Tips for mitigating data security risks in AI:

Implement zero-trust architecture for AI systems: Assume no user or device is inherently trustworthy, requiring verification at every access point.

Use differential privacy techniques: Add carefully calibrated noise to datasets, allowing useful analysis while protecting individual privacy.

Conduct regular penetration testing: Simulate real-world attacks to identify vulnerabilities and strengthen defenses.

Employ federated learning where possible: Train models on decentralized datasets without directly sharing sensitive data.

When dealing with sensitive data in AI, understanding and addressing these security vulnerabilities is crucial. Learn more about Data Security and Breach Vulnerabilities to further enhance your understanding of best practices. This proactive approach is essential for building trust, protecting user privacy, and ensuring the responsible development and deployment of AI technologies. For AI professionals, software engineers, and anyone working with sensitive data, these security considerations should be paramount. Whether you are a ChatGPT user, an LLM developer, working with Anthropic, Google Gemini, or are a digital marketer leveraging AI, understanding these risks is essential. The increasing prevalence of data breach notification laws, regulations like the HIPAA Security Rule, and the EU NIS2 Directive underscores the legal and ethical imperative to prioritize data security in AI.

No spam, no nonsense. Pinky promise.

5. Consent and Control Issues

One of the most pressing AI privacy concerns revolves around consent and control. AI systems, by their nature, often collect and process vast amounts of personal data, making genuine informed consent difficult, if not impossible, to obtain. This poses a significant challenge to individual autonomy and control over personal information, deserving its place high on the list of AI privacy concerns.

The complexity of AI, coupled with its evolving data usage patterns, strains traditional notice-and-consent models. Imagine a simple voice assistant: it might collect snippets of conversation, ostensibly to improve its functionality. But this data could also be used for targeted advertising or even shared with third parties, often without explicit user knowledge or agreement. This lack of transparency and control is a core component of the consent and control issue.

Several factors contribute to this problem:

Incomprehensible privacy policies: Dense legalese and technical jargon make it nearly impossible for the average user to understand what data is being collected and how it's used.

Forced consent mechanisms: "Agree to all or nothing" buttons are commonplace, effectively coercing users into giving up their data if they want to use a service.

Bundled permissions: Requesting access to multiple data points (e.g., location, contacts, camera) under a single umbrella permission obscures the specific data needs of the application.

Dynamic data uses beyond original consent: The purpose for which data is collected can evolve over time, potentially exceeding the scope of the initial consent given by the user. This is especially true with machine learning models that adapt and learn from new data inputs.

This dynamic data use is a key differentiator from traditional software, where the functionality and data requirements are relatively static. AI’s capacity to repurpose data for unforeseen applications makes ongoing, meaningful consent even more crucial.

Examples of these issues in action:

Voice assistants recording conversations without clear user indication.

Dark patterns in consent interfaces that nudge users towards accepting broad data collection practices.

Complex AI recommendation systems operating invisibly in the background, collecting data on browsing habits and preferences.

Third-party data sharing without transparent disclosure.

While the challenges are significant, there's growing attention focused on improving consent models:

Pros:

Growing attention leading to improved consent models: Increased awareness of these issues is driving the development of more user-centric approaches.

New regulatory focus on meaningful consent: Legislation like the California Privacy Rights Act (CPRA) and the GDPR's consent requirements are pushing for more stringent and transparent data practices.

Development of progressive disclosure approaches: This involves providing users with information in a layered format, starting with the most essential details and allowing them to delve deeper if they choose.

Cons:

Cognitive limitations make informed consent difficult: Even with simplified explanations, understanding the intricacies of AI data processing can be challenging for many users.

Power imbalances limit meaningful choice: Users often feel compelled to accept unfavorable data practices due to the dominance of certain tech platforms.

Technological complexity exceeds user understanding: The “black box” nature of some AI systems makes it difficult for users to grasp how their data is being used.

Data uses evolve beyond original consent parameters: The adaptive nature of AI means that data collected for one purpose might later be used for another, potentially violating the spirit of the original consent.

Actionable Tips for AI Professionals and Developers:

Implement contextual just-in-time consent: Request access to data only when it's needed, and clearly explain the specific purpose for the request.

Create layered privacy notices: Provide concise summaries of data practices upfront, with the option to access more detailed information.

Offer granular permission controls: Allow users to choose which data points they are comfortable sharing, rather than forcing bundled permissions.

Provide easy mechanisms to revoke consent: Users should be able to easily withdraw their consent at any time.

By adopting these best practices, developers can build more trustworthy and respectful AI systems. Frameworks like Helen Nissenbaum’s contextual integrity framework offer further guidance on navigating the complex landscape of privacy in the digital age. These considerations are critical for all stakeholders in the AI ecosystem, from developers and entrepreneurs to everyday users of AI-powered products and services. Addressing these concerns head-on will be essential for building a future where AI benefits society while respecting individual privacy.

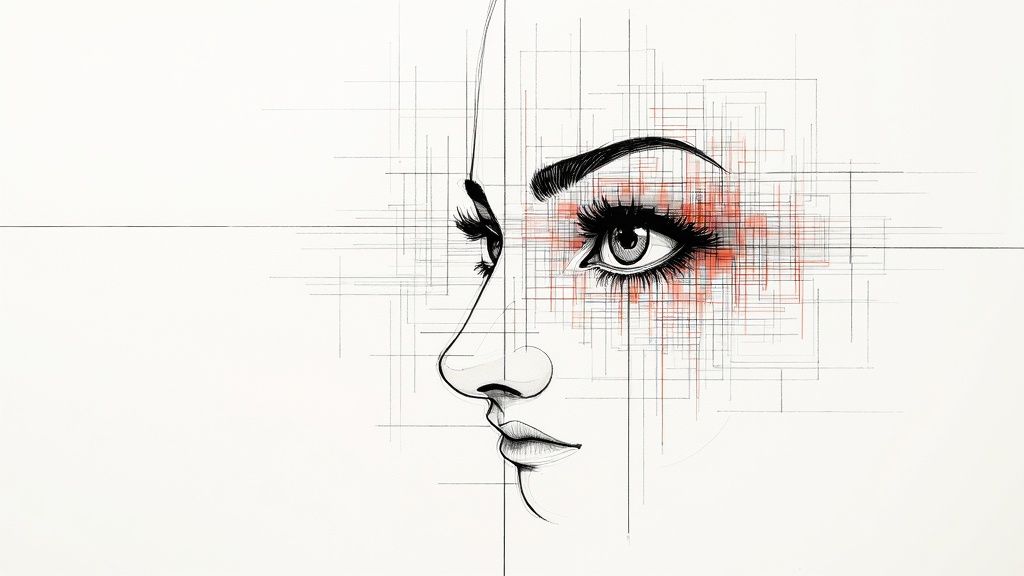

6. Facial Recognition and Biometric Privacy

Facial recognition and biometric privacy is a critical AI privacy concern, deserving its place on this list due to the potential for misuse and far-reaching consequences. This technology uses AI-powered algorithms to analyze unique biological traits, primarily facial features, to identify individuals. It works by mapping facial landmarks, creating a digital representation (a "faceprint"), and comparing it to existing databases to find a match. The increasing accuracy of these algorithms, combined with the proliferation of cameras and data collection points, creates a powerful and potentially intrusive surveillance tool.

Features and Implications:

Remote Identification Capabilities: Facial recognition can identify individuals from a distance, often without their knowledge. This raises significant ai privacy concerns as it enables covert surveillance.

Persistent Tracking Potential: Coupled with widely deployed camera networks, this technology enables the tracking of individuals across physical spaces, creating a detailed record of their movements and activities.

Immutable Personal Characteristics: Unlike passwords or access cards, facial features are immutable. Once compromised, this biometric data cannot be easily changed, increasing the risks associated with data breaches.

Increasingly Accurate Matching Algorithms: Advancements in AI are constantly improving the accuracy of facial recognition, further amplifying the potential for misuse and impacting ai privacy concerns.

Pros and Cons:

While facial recognition offers some benefits, its potential for abuse raises significant ai privacy concerns.

Pros:

Enhanced Security: In specific controlled contexts, like building access control, facial recognition can offer convenient and robust security measures.

Convenient Authentication: It can simplify authentication processes, replacing passwords and other less convenient methods.

Locating Missing Persons/Identifying Criminals: Facial recognition has assisted in locating missing persons and identifying criminals, demonstrating potential benefits for law enforcement.

Cons:

Chilling Effect on Public Participation: The fear of being tracked and identified can discourage individuals from exercising their rights to free speech and assembly, directly impacting ai privacy concerns.

Disproportionate Impact on Marginalized Communities: Studies have shown that facial recognition systems exhibit higher error rates for individuals with darker skin tones and other marginalized groups, leading to discriminatory outcomes.

Enables Mass Surveillance: The potential for widespread, indiscriminate surveillance poses a grave threat to privacy and civil liberties, making ai privacy concerns paramount.

Often Deployed Without Consent or Knowledge: Many instances of facial recognition deployment occur without the knowledge or consent of the individuals being surveilled, raising serious ethical and legal questions.

Examples:

Clearview AI's controversial database, scraping billions of images from social media for facial recognition purposes, highlights the potential for abuse.

Aspects of the Chinese social credit system utilize facial recognition for surveillance and social control.

Police use of facial recognition at protests has raised concerns about the chilling effect on free speech.

Retail stores tracking shoppers' movements and demographics without notice demonstrate the erosion of privacy in commercial settings.

Actionable Tips for AI Professionals and Developers:

Implement Strong Biometric Data Protection Policies: Prioritize robust data security measures to protect collected biometric data from unauthorized access and breaches.

Require Opt-In Consent for Facial Recognition: Implement clear opt-in mechanisms, ensuring individuals are fully informed and provide explicit consent before their biometric data is collected or used.

Prohibit Use in Public Spaces Without Safeguards: Advocate for strict regulations and policies that prohibit or severely restrict the use of facial recognition in public spaces without robust safeguards and oversight.

Create Time Limitations on Data Retention: Implement policies that limit the duration for which biometric data is retained, minimizing the potential for misuse and addressing long-term ai privacy concerns.

Popularized By:

The Illinois Biometric Information Privacy Act (BIPA), the "Ban Facial Recognition" movement, Fight for the Future's advocacy campaigns, and San Francisco's ban on government use of facial recognition all highlight the growing awareness and concern surrounding this technology and its impact on ai privacy concerns. These efforts underscore the need for continued discussion, regulation, and responsible development within the AI community.

7. Automated Decision-Making Risks

One of the most pressing AI privacy concerns revolves around automated decision-making (ADM). This involves AI systems making significant decisions about individuals, often with limited or no human oversight. These decisions can impact fundamental rights, affecting areas like employment, loan applications, housing access, and even healthcare. This raises serious questions about fairness, transparency, and accountability when AI systems, rather than humans, hold the reins. This is a key AI privacy concern because these automated processes often rely on personal data, creating a direct link between data privacy and the potential for biased or unfair outcomes.

Here's how it works: ADM systems use algorithms to analyze vast amounts of data, often including personal information, to identify patterns and make predictions. For example, an automated hiring system might analyze resumes and social media profiles to predict a candidate's suitability for a role. Similarly, a loan application system might use credit scores and spending patterns to assess an applicant's creditworthiness.

While some argue that ADM can reduce human bias, the reality is more nuanced. These systems can perpetuate and even amplify existing societal biases present in the data they are trained on. This can lead to discriminatory outcomes, such as qualified candidates being rejected for jobs or individuals being denied loans based on unfair criteria.

Features of ADM Systems Raising Privacy Concerns:

Fully automated decision processes: Decisions are made entirely by algorithms with little to no human intervention.

Limited human oversight: Even when humans are involved, their role might be limited to reviewing the AI's output rather than actively participating in the decision-making process.

Decisions affecting fundamental rights: ADM is increasingly used in areas that have significant consequences for individuals' lives and opportunities.

Use of proxy variables and correlations: AI systems may rely on indirect measures (proxies) that correlate with, but don't directly represent, the qualities they are trying to assess. These proxies can perpetuate discrimination.

Pros:

Can reduce human bias in some contexts: If designed and trained carefully, AI can reduce human biases related to explicit prejudice. However, this requires meticulous data curation and algorithm design.

Potentially increases efficiency and consistency: ADM can process large volumes of applications quickly and apply criteria consistently.

May identify patterns humans would miss: AI can uncover complex relationships in data that humans might overlook.

Cons:

May violate due process rights: Individuals may not have the opportunity to understand why a decision was made or to challenge it effectively.

Often lacks contestability mechanisms: There are often no clear channels for appealing or contesting automated decisions.

Can entrench systematic disadvantages: Biases in data can lead to discriminatory outcomes, further disadvantaging already marginalized groups.

Difficult to identify causality vs. correlation: AI systems often identify correlations, but these don't necessarily indicate causal relationships, leading to inaccurate or unfair decisions.

Examples of ADM Risks in Action:

Automated hiring systems rejecting qualified candidates based on biased data, such as gaps in employment history that disproportionately affect women.

Insurance premium algorithms charging higher rates to individuals living in certain zip codes, effectively discriminating based on location.

Benefits determination systems denying essential services due to errors or biased criteria.

Automated tenant screening tools using opaque criteria that perpetuate housing discrimination.

Tips for Mitigating ADM Risks:

Maintain meaningful human oversight for consequential decisions: Humans should play an active role in the decision-making process, particularly when fundamental rights are at stake.

Create accessible appeals processes: Individuals should have a clear and accessible mechanism for challenging automated decisions.

Document decision criteria clearly: Transparency is crucial. The factors used in automated decision-making should be clearly documented and explained.

Test systems for disparate impacts before deployment: Rigorous testing is essential to identify and mitigate potential biases before they harm individuals.

Why this Deserves its Place on the List: Automated decision-making has the potential to significantly impact individuals' lives and fundamental rights. The lack of transparency, potential for bias, and difficulty in contesting outcomes make it a critical AI privacy concern that requires careful consideration and proactive mitigation strategies. Legislation like the EU AI Act's high-risk AI categories, Article 22 of the GDPR, and the Virginia Consumer Data Protection Act's automated decision protections all highlight the growing recognition of the risks posed by ADM and the need for robust safeguards.

8. Re-identification and Anonymization Failures

One of the most pressing AI privacy concerns revolves around the failure of traditional data anonymization techniques. While datasets are often stripped of obvious identifiers like names and social security numbers, sophisticated AI algorithms can exploit subtle patterns and combine information from multiple sources to re-identify individuals. This defeats the purpose of anonymization and exposes sensitive data to unintended disclosure, making it a critical issue for anyone working with personal information. This vulnerability deserves its place on this list due to the increasing sophistication of re-identification attacks and the erosion of trust in data privacy promises.

How it Works:

Re-identification leverages the power of AI, particularly in areas like machine learning and deep learning, to piece together seemingly unrelated bits of information. Think of it like a digital jigsaw puzzle. Features that enable this include:

Mosaic Effect Across Multiple Datasets: Combining publicly available datasets (like voter registration records) with supposedly anonymized data can reveal individual identities.

Advanced Pattern Recognition Capabilities: AI excels at identifying complex patterns in data, even those invisible to the human eye. These patterns can be unique to an individual and used for re-identification.

Probabilistic Matching Techniques: Even without a perfect match, AI can calculate the probability of a particular individual belonging to a specific data point, increasing the risk of accurate identification.

Synthetic Data Generation Vulnerabilities: While synthetic data aims to protect privacy by generating artificial datasets that mimic real data, vulnerabilities in the generation process can inadvertently leak information about individuals from the original dataset.

Examples of Re-identification:

Harvard Researchers Re-identifying Individuals from 'Anonymous' DNA Samples: Researchers demonstrated that genetic information, even when anonymized, could be linked back to individuals using publicly available genealogical databases.

Netflix Prize Dataset Re-identification: Researchers successfully re-identified individuals in the anonymized Netflix Prize dataset by correlating it with publicly available movie ratings on IMDb.

New York City Taxi Data De-anonymization: Researchers de-anonymized taxi trip data by linking seemingly anonymous pickup and drop-off locations with external data sources.

AOL Search Data Re-identification Scandal: AOL released a supposedly anonymized search dataset, but individuals were quickly re-identified based on their unique search queries.

Pros and Cons:

Pros:

Growing awareness of re-identification risks is leading to the development of improved anonymization techniques.

Differential privacy, a technique that adds carefully calibrated noise to data, offers mathematical guarantees of privacy protection.

New synthetic data approaches with built-in privacy safeguards show promise for future applications.

Cons:

Traditional anonymization methods (like simply removing identifiers) are increasingly ineffective against sophisticated AI-driven attacks.

Few legal penalties exist for re-identification, creating a lack of deterrence.

Growing computational power makes these attacks easier and more accessible.

Reliance on claimed anonymization often provides a false sense of security.

Tips for Mitigation:

Implement Differential Privacy Techniques: Differential privacy adds noise to datasets while preserving statistical utility, making re-identification significantly more difficult.

Use Formal Privacy Risk Assessments: Conduct thorough assessments to identify potential vulnerabilities and guide anonymization strategies.

Apply k-anonymity and l-diversity Principles: These techniques ensure that individuals within a dataset cannot be distinguished from a group of a certain size (k) and that sensitive attributes are diverse within these groups (l).

Consider Synthetic Data with Privacy Guarantees: Explore the use of synthetic datasets generated with rigorous privacy-preserving methods.

When and Why to Use These Approaches:

These approaches are crucial whenever dealing with sensitive personal data that needs to be shared or analyzed while preserving individual privacy. This is particularly important in fields like healthcare, finance, and research. Addressing re-identification risks is not just a technical necessity but also a crucial step in building trust and maintaining ethical data practices.

Popularized By:

The importance of re-identification risks has been highlighted by prominent figures and research, including:

Latanya Sweeney's Data Re-identification Research: Dr. Sweeney's groundbreaking research demonstrated the vulnerability of seemingly anonymized data.

Paul Ohm's 'Broken Promises of Privacy' Paper: This influential paper explored the limitations of anonymization in the face of powerful data analysis techniques.

Apple's Differential Privacy Implementations: Apple's adoption of differential privacy in its products has brought the concept into mainstream awareness.

9. Third-Party Data Sharing and AI Ecosystems

This aspect of AI privacy concerns revolves around the intricate web of data sharing that fuels many AI systems. These systems often operate within complex ecosystems where personal information flows between multiple parties, frequently without transparent disclosure. This lack of transparency creates significant AI privacy concerns as data collected for one purpose can be shared, combined, and repurposed across networks of companies, leading to the creation of detailed profiles and inferences that go far beyond what users would reasonably expect. This data sharing can enrich AI models and enable innovative services, but it also raises critical questions about user control and data protection.

How it Works:

AI systems often rely on vast datasets to function effectively. These datasets can be enriched and expanded through data sharing agreements between different organizations. For example, a health app might share anonymized user data with a research institution to help develop new treatments. Alternatively, an e-commerce platform could share browsing history with advertising networks to personalize ads. These data flows, facilitated by APIs, data brokers, and cross-platform tracking technologies, create interconnected data ecosystems. Within these ecosystems, AI algorithms can analyze combined datasets to generate inferences about individual users, predicting their behaviors, preferences, and even future actions.

Examples:

Several real-world examples illustrate the potential risks of third-party data sharing within AI ecosystems:

Facebook's integration of WhatsApp data: Despite initial promises to keep user data separate, Facebook eventually integrated WhatsApp data into its advertising platform, raising concerns about the scope of data sharing within its ecosystem.

Credit bureaus selling alternative data to lenders: Credit bureaus are increasingly incorporating non-traditional data points, such as social media activity and online shopping habits, into credit scoring models, impacting lending decisions without users' explicit consent.

Ad networks sharing behavioral profiles across sites: Ad networks track users' browsing behavior across multiple websites, building detailed profiles that are then shared with advertisers to target personalized ads, often without full user awareness or control.

Health apps sharing sensitive data with dozens of third parties: Studies have revealed that some health apps share sensitive user data, including medical conditions and treatment information, with numerous third-party companies, raising concerns about data security and privacy.

Pros and Cons:

Pros:

Enables innovative services through data collaboration: Data sharing can facilitate the development of new and improved AI-powered services, such as personalized medicine and targeted advertising.

Can improve functionality through broader data access: Access to larger and more diverse datasets can improve the accuracy and effectiveness of AI models.

May create economic efficiencies: Data sharing can create economic benefits for businesses by enabling more efficient advertising and personalized services.

Cons:

Lack of transparency about data flows: Users often lack clarity about how their data is being shared and used within these complex ecosystems.

Limited user control over downstream uses: Once data is shared, users have limited control over how it is used by third parties.

Privacy policies rarely disclose full extent of sharing: Existing privacy policies often fail to adequately disclose the full scope of data sharing practices within AI ecosystems.

Regulatory gaps around derived and inferred data: Current regulations often struggle to address the privacy implications of derived and inferred data generated by AI systems within these ecosystems.

Tips for AI Professionals and Developers:

Create comprehensive data maps of information flows: Document and understand the flow of personal data within your AI system and across any third-party integrations.

Implement just-in-time notifications for sharing: Notify users when their data is being shared with third parties and provide them with meaningful choices about how their data is used.

Conduct vendor privacy assessments: Thoroughly assess the privacy practices of any third-party vendors with whom you share data.

Use data processing agreements with strong protections: Ensure that data processing agreements with third parties include strong data protection clauses.

Learn more about Third-Party Data Sharing and AI Ecosystems

Why it Deserves its Place on the List:

Third-party data sharing within AI ecosystems represents a significant AI privacy concern because it often occurs without users' knowledge or consent. The lack of transparency and control, coupled with the potential for creating highly detailed and sensitive profiles, makes this a crucial issue to address. Reports like the Norwegian Consumer Council's 'Out of Control' report and investigations by Privacy International, as well as FTC enforcement actions against deceptive data sharing, highlight the growing concern around this practice. This issue directly impacts a wide range of users, from everyday consumers to individuals interacting with healthcare systems and financial institutions. Therefore, understanding and mitigating the risks associated with third-party data sharing is essential for building trust and ensuring responsible AI development.

10. Emotion Recognition and Affective Computing Privacy

Emotion recognition, also known as affective computing, is a branch of AI that aims to detect and interpret human emotions. These systems analyze various signals, including facial expressions, voice tone, text, and even behavioral patterns, to infer an individual's emotional state. This technology raises serious AI privacy concerns because it delves into deeply personal information – our inner feelings – often without our explicit knowledge or consent. This intrusion into our psychological privacy creates significant risks of manipulation and misuse.

Specific features used in emotion recognition include facial expression analysis (detecting smiles, frowns, etc.), voice sentiment detection (analyzing tone and pitch), text-based emotion recognition (interpreting word choice and sentiment in written communication), and behavioral pattern analysis (tracking online activity and mouse movements to infer emotional states). While proponents argue that such technology can improve human-computer interaction and even assist in mental health diagnostics, the reality is that these systems often operate on contested scientific theories about universal emotions and make claims that go far beyond what's scientifically validated.

One example of a seemingly beneficial implementation is in customer service, where AI could analyze caller emotions to prioritize urgent or distressed individuals. However, this same technology could be easily misused to manipulate customers based on their perceived vulnerability. Similarly, educational software analyzing student engagement might sound helpful, but it could lead to unfair labeling and biased treatment of students based on their emotional responses. Other concerning examples include workplace monitoring systems tracking employee moods and social media sentiment analysis used for targeted advertising and manipulation.

Pros:

Improved Human-Computer Interaction: In specific contexts, emotion recognition might enhance user experience by allowing systems to adapt to user emotions.

Mental Health Support (with safeguards): With appropriate privacy protections and ethical guidelines, emotion recognition could potentially contribute to early detection of mental health concerns.

Accessibility Benefits: For individuals with communication difficulties, emotion recognition might offer new ways to interact with technology.

Cons:

Contested Scientific Basis: The underlying science of universal emotion detection is still debated, and many systems make claims that are not scientifically substantiated.

Highly Sensitive Inferences: Emotion recognition deals with deeply personal and private information, creating significant privacy risks.

Potential for Manipulation: This technology can be used to manipulate individuals based on their emotional vulnerabilities.

Tips for Responsible Use (When Absolutely Necessary):

Require Explicit Consent: Always obtain clear and informed consent before using any form of emotion recognition technology.

Avoid Coercive Contexts: Never deploy emotion recognition in coercive environments like employment or education, where individuals might feel pressured to comply.

Transparency and Disclosure: Clearly inform users about how the technology works, its limitations, and how the collected data will be used.

Data Control and Deletion: Allow users to review and delete their emotional profile data.

Emotion recognition deserves a place on this list of AI privacy concerns because it represents a significant potential threat to individual autonomy and psychological well-being. The inherent intimacy of the data being collected, combined with the often-unproven scientific basis of the technology, makes it ripe for misuse and manipulation. The work of organizations like the AI Now Institute and individuals like Kate Crawford, highlighting the risks of emotion AI, along with regulatory efforts like the EU AI Act's classification of emotion recognition as high-risk, underscore the urgency of addressing these AI privacy concerns. Microsoft's Responsible AI guidelines limiting emotion recognition also demonstrate a growing awareness of the ethical challenges posed by this technology. Developers, businesses, and policymakers must prioritize privacy and ethical considerations when developing and deploying emotion recognition systems. The potential benefits, while intriguing, must be carefully weighed against the considerable risks to individual privacy and autonomy.

10-Point AI Privacy Concerns Comparison

Issue | Complexity (🔄) | Outcomes (📊) | Ideal Use Cases (⭐) | Advantages/Insights (💡) |

|---|---|---|---|---|

Data Collection and Surveillance | High data handling and continuous monitoring complexity | Enables personalized services and enhanced security but raises surveillance risks | Smart devices and security systems | Improves system effectiveness when paired with privacy-by-design and data minimization |

Algorithmic Bias and Discrimination | Challenging bias detection and mitigation processes | Drives fairness improvements but risks systematic discrimination | Decision-making tools, risk assessments, and credit scoring | Spurs fairness audits and model refinement when bias is actively addressed |

Lack of Transparency and Explainability | Opaque design with difficult-to-audit processes | Protects proprietary methods yet undermines user trust and contestability | Proprietary systems with less regulatory exposure | Encourages balance using explainable AI methods and model cards for clarity |

Data Security and Breach Vulnerabilities | Involves complex integration of robust security safeguards | Enhances threat detection but risks large-scale data breaches | High-value data systems requiring strict security | Promotes innovation in secure architectures with zero-trust and regular testing |

Consent and Control Issues | Complicated by dynamic data uses and convoluted consent mechanisms | Supports personalized services but may confuse users on data control | Consumer apps needing granular permission controls | Fosters progressive disclosure and meaningful consent through layered notices |

Facial Recognition and Biometric Privacy | Advanced matching algorithms with significant regulatory challenges | Offers convenient authentication while posing mass surveillance risks | Security authentication in controlled environments | Enhances identification if paired with strict opt-in consent and data retention policies |

Automated Decision-Making Risks | Fully automated processes with limited human oversight | Delivers efficiency and consistency but may violate due process rights | High-volume decision environments with human review checkpoints | Boosts operational consistency while requiring accessible appeals and oversight |

Re-identification and Anonymization Failures | Evolving techniques make robust anonymization increasingly complex | Risk of re-identification undermines privacy guarantees | Data settings demanding strong anonymization safeguards | Encourages differential privacy and formal risk assessments for improved protection |

Third-Party Data Sharing and AI Ecosystems | Intricate integrations causing opaque data flow processes | Facilitates innovative services but compromises data transparency | Collaborative multi-party data environments | Stimulates comprehensive data mapping and vendor privacy assessments for clearer accountability |

Emotion Recognition and Affective Computing Privacy | Real-time analysis with high sensitivity and unique complexity | Enhances human-computer interaction yet may intrude on personal privacy | User experience enhancement in consent-based, controlled settings | Improves accessibility when paired with explicit consent and clear disclosure policies |

Navigating the Future of AI Privacy

AI's rapid advancement presents incredible opportunities, but also significant AI privacy concerns. From data collection and surveillance to algorithmic bias and the risks of facial recognition technology, this article has explored ten crucial areas where AI can impact our privacy. We've discussed challenges related to data security, transparency, consent, and the potential for misuse through automated decision-making and third-party data sharing. Understanding these core issues is the first step towards mitigating the risks.

The key takeaway is that responsible AI development and deployment are paramount. We must prioritize ethical considerations alongside innovation. For developers and engineers, this means building AI systems with privacy by design, incorporating robust security measures, and striving for transparency and explainability. For users, it means being informed about how your data is being used and advocating for greater control over your digital footprint. As AI continues to evolve, it's crucial to stay informed about its impact on privacy. For students navigating the digital landscape, understanding the capabilities and limitations of AI tools is essential. Check out this resource on the best AI tools for students from Documind to explore options that can enhance your studies.

Mastering these concepts and choosing privacy-preserving AI solutions is not just about protecting individual data; it's about shaping a future where AI genuinely benefits everyone. Tools like MultitaskAI, which prioritizes user privacy through features like direct API connections and self-hosting, offer a glimpse into how we can maintain control in the age of AI. By actively participating in the conversation around AI privacy and supporting responsible development, we can ensure that the future of AI is one of innovation, progress, and respect for individual rights. Let's build a future where technology empowers us without compromising our fundamental right to privacy.