AI Model Comparison: Top Picks for 2025

Dive into our exclusive ai model comparison for 2025. Uncover detailed reviews and features to find your perfect AI tool.

Unlocking the Power of AI: A 2025 Guide to Choosing the Right Model

Finding the optimal AI model for your project can be challenging. This AI model comparison helps you navigate the complexities of selecting the right tool for your needs. Whether you're building a cutting-edge application or streamlining your workflow, this list of the top 10 models of 2025—including MultitaskAI, GPT-4, Claude 3 Opus, Gemini 1.5 Pro, Llama 3, Mistral Large, PaLM 2, Claude 3 Sonnet, GPT-3.5 Turbo, and Cohere Command—provides the insights you need. Discover which model best suits your specific requirements and unlock the true potential of AI.

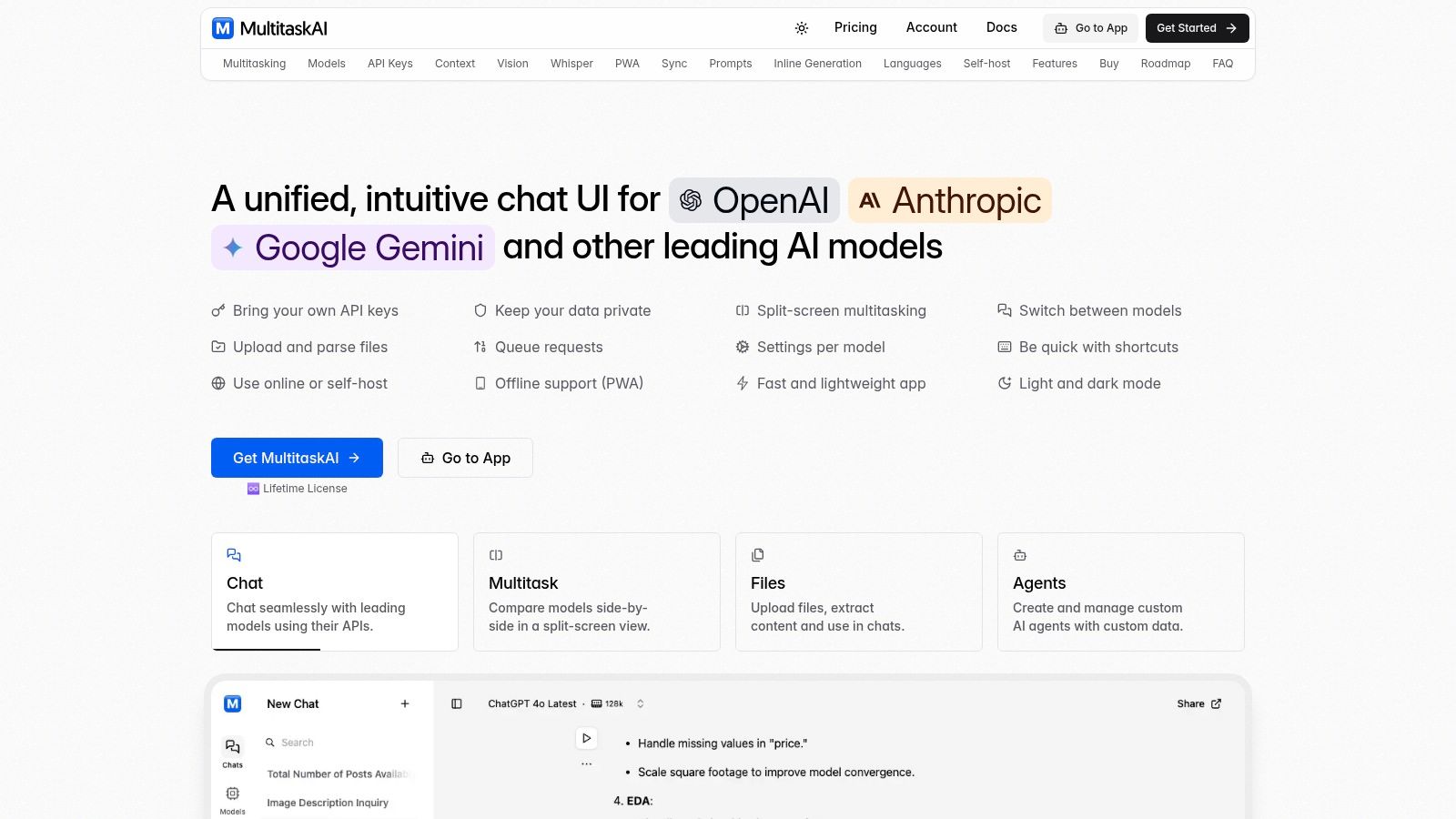

1. MultitaskAI

MultitaskAI is a powerful browser-based platform designed for efficient AI model comparison and streamlined workflow management. Unlike single-model interfaces, MultitaskAI allows you to connect and interact with leading large language models (LLMs) like OpenAI, Anthropic, and Google, all within a single, unified interface. This makes it incredibly valuable for comparing outputs, testing different prompts, and ultimately choosing the best model for specific tasks. Whether you're an AI professional fine-tuning model parameters, a developer integrating LLMs into your application, or a tech-savvy entrepreneur exploring different AI solutions, MultitaskAI provides a centralized hub to manage your entire AI workflow. Imagine effortlessly comparing how different models respond to the same prompt side-by-side, or queuing up a series of prompts to run in the background while you focus on other tasks—MultitaskAI empowers you to do just that. For those seeking the best tool for ai model comparison, MultitaskAI emerges as a strong contender.

One of the key advantages of MultitaskAI is its focus on data privacy. You use your own API keys for direct access to the models, eliminating the need to share your data with a third-party platform. This also gives you granular control over usage and costs. Further enhancing privacy is the option to self-host MultitaskAI on your own static file server. This level of control is crucial for sensitive projects and businesses with strict data governance policies. For added flexibility, a hosted version is also available for those who prefer a hassle-free setup.

MultitaskAI shines in its ability to streamline your AI workflow through robust features. True multitasking is achieved with split-screen conversations, allowing for real-time ai model comparison and analysis. Background processing and queueable prompts keep your workflow moving, even with complex tasks. Beyond comparisons, MultitaskAI provides a comprehensive suite of tools, including file integration for processing documents, the ability to create custom AI agents for specific tasks, dynamic prompts for complex interactions, and offline Progressive Web App (PWA) support for uninterrupted access. These features combine to create a highly productive environment for anyone working with LLMs.

MultitaskAI offers a cost-effective one-time lifetime license that includes unlimited access, 5 device activations, and free future updates. This is a significant advantage compared to subscription-based models, offering predictable long-term costs. While the platform requires managing your own API keys, which might present a slight learning curve for absolute beginners, the detailed documentation and intuitive interface make the setup process straightforward. The extensive feature set might initially seem overwhelming for new users, but the benefits quickly become apparent as you integrate MultitaskAI into your workflow.

Key Features & Benefits:

- Multi-Model Interface: Connect to OpenAI, Anthropic, Google, and other leading LLMs.

- Split-Screen Comparisons: Compare model outputs side-by-side.

- Data Privacy: Use your own API keys; self-hosting option available.

- Multitasking: Background processing and queueable prompts.

- Advanced Features: File integration, custom agents, dynamic prompts, offline PWA support.

- Cost-Effective: One-time lifetime license with updates and multiple device activations.

Pros:

- Total data privacy with direct API key integration and self-hosting options.

- Efficient multitasking with split-screen chats, background processing, and queueable prompts.

- Robust feature set including file uploads, custom agents, dynamic prompts, and offline PWA support.

- Cost-effective one-time payment model with lifetime updates and multiple device activations.

- Highly customizable settings per model for fine-tuned AI responses.

Cons:

- Requires managing your own API keys.

- The extensive feature set could be overwhelming for beginners.

Website: https://multitaskai.com

MultitaskAI stands out as a valuable tool for anyone serious about leveraging the power of multiple AI models. Its unique combination of multi-model access, robust features, data privacy focus, and a cost-effective licensing model makes it a compelling choice for AI professionals, developers, and tech-savvy entrepreneurs looking to optimize their AI workflows.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

2. GPT-4 (OpenAI)

GPT-4 is OpenAI's most advanced large language model and a significant contender in any AI model comparison. It boasts multimodal capabilities, meaning it can process both text and images, setting it apart from many text-only models. This opens up a wide array of applications not possible with previous generations. GPT-4 demonstrates human-level performance on various professional and academic benchmarks, showing marked improvements over its predecessor, GPT-3.5, in reasoning, following instructions, and safety. Its enhanced ability to understand context and generate more accurate and nuanced responses makes it a powerful tool for various tasks.

For AI professionals and developers, GPT-4's advanced reasoning and problem-solving abilities are a game-changer. Software engineers and programmers can leverage its improved contextual understanding for tasks like code generation and debugging. Tech-savvy entrepreneurs can explore new possibilities with its creative content generation capabilities, potentially streamlining content creation and marketing efforts. ChatGPT and LLM users will appreciate the higher accuracy on complex tasks and instructions. Even those familiar with other models, like Anthropic, Google Gemini, or those simply identified as "Indie Hackers," will find GPT-4's performance impressive. Digital marketers can utilize GPT-4's enhanced capabilities for generating marketing copy, analyzing market trends, and personalizing customer interactions.

One of the key features distinguishing GPT-4 in an AI model comparison is its multimodal processing. While models like GPT-3.5 are limited to text, GPT-4 can analyze images and incorporate visual information into its responses. This allows for innovative applications such as image captioning, visual question answering, and even generating website layouts from hand-drawn sketches. Its expanded knowledge cutoff date compared to previous versions ensures access to more up-to-date information.

However, GPT-4's advanced capabilities come at a cost. It's more expensive than other models like GPT-3.5, and its high computational requirements might be a barrier for some users. Learn more about GPT-4 (OpenAI) to understand the pricing structure and available options. While significantly improved, GPT-4 is still prone to hallucinations and factual errors, a common challenge with large language models. Being aware of these limitations is crucial for responsible implementation.

Pros:

- Superior performance on complex reasoning tasks

- Excellent creative content generation

- Higher factual accuracy than previous models

- Strong ability to follow nuanced instructions

- Multimodal processing (text and images)

Cons:

- Expensive compared to other models

- Still prone to hallucinations and factual errors

- High computational requirements

- Limited by knowledge cutoff date

Website: https://openai.com/gpt-4

GPT-4 earns its place on this AI model comparison list because of its groundbreaking multimodal capabilities and significant advancements in reasoning and accuracy. Despite the higher cost and computational needs, its potential to revolutionize various fields, from software development to digital marketing, is undeniable. Its inclusion is essential for anyone looking to understand the current state-of-the-art in large language models.

3. Claude 3 Opus (Anthropic)

When conducting an AI model comparison, Claude 3 Opus from Anthropic demands serious consideration, especially for tasks requiring intricate analysis and robust reasoning. This powerful AI assistant, the most capable in the Claude 3 family, is engineered for complex challenges, excelling in areas like nuanced instruction following, creative content generation, and tackling intricate reasoning problems with impressive accuracy and a thoughtful approach. This makes it an ideal choice for professionals and developers looking for a reliable and powerful AI solution.

Claude 3 Opus stands out in the crowded field of AI models due to its superior reasoning and analytical capabilities. It shines in scenarios demanding deep understanding and precise execution of complex instructions. Think high-stakes situations like legal document analysis, financial modeling, or scientific research where accuracy and nuanced understanding are paramount. For instance, it can analyze lengthy legal contracts, identify key clauses, and summarize complex legal concepts with clarity. In a financial context, it can process market data, identify trends, and assist with investment strategies. Learn more about Claude 3 Opus (Anthropic) and explore its potential in diverse applications.

Its multimodal understanding (processing both text and image inputs) opens doors to innovative applications. Imagine feeding it a complex scientific diagram alongside a research paper; Claude 3 Opus can potentially synthesize information from both sources, generating insights that wouldn't be possible with text-only models. Its extended context window of up to 200K tokens allows it to handle substantially longer pieces of text, making it suitable for processing entire books or extensive codebases for analysis, summarization, or translation. This feature significantly enhances its utility for tasks requiring a comprehensive understanding of lengthy documents.

While Anthropic hasn't publicly disclosed specific pricing for Claude 3 Opus, it's positioned as a premium offering, so expect a higher cost compared to smaller models like Claude Instant. This premium is justified by its advanced features and superior performance. Accessing Claude 3 Opus is currently limited to specific platforms and APIs, so developers should check Anthropic's website for the latest availability information.

Pros:

- Exceptional Reasoning: Excels in tasks requiring intricate logic and nuanced understanding.

- Nuanced Instruction Following: Handles ambiguous instructions with remarkable accuracy.

- Enhanced Safety: Built-in safety measures minimize harmful outputs.

- Clear Explanations: Articulates complex concepts in an easily understandable manner.

Cons:

- Higher Cost: Expect a premium price point compared to smaller models.

- Knowledge Cutoff: Limited by the data it was trained on.

- Processing Time: Complex requests can take longer to process.

- Limited Availability: Access may be restricted on certain platforms.

In conclusion, Claude 3 Opus earns its place in any AI model comparison for its exceptional reasoning capabilities, advanced features, and focus on safety. While cost and availability might be factors to consider, its superior performance makes it a compelling option for professionals and developers seeking a powerful and reliable AI assistant for demanding tasks. Its potential for groundbreaking applications across various fields solidifies its position as a leading contender in the evolving landscape of AI.

4. Gemini 1.5 Pro (Google)

In the rapidly evolving landscape of AI models, Google's Gemini 1.5 Pro stands out as a powerful contender. This advanced multimodal model is designed for a comprehensive understanding and processing of diverse data types, including text, images, audio, video, and code. Its key differentiator is its impressive 1 million token context window, equivalent to roughly 700,000 words, enabling it to handle vast amounts of information within a single prompt. This makes Gemini 1.5 Pro particularly well-suited for complex analytical tasks that demand a deep understanding of extensive contexts. Think analyzing lengthy legal documents, conducting comprehensive literature reviews, or processing intricate codebases. In an AI model comparison, this extensive context window truly sets it apart.

Built on a mixture-of-experts architecture, Gemini 1.5 Pro offers efficient processing and strong performance, especially in coding and mathematical reasoning. Its multimodal capabilities open doors to innovative applications, allowing for real-time reasoning across multiple input types simultaneously. Imagine analyzing a video lecture, summarizing its key points, and generating corresponding code examples all in one process. This is the power of multimodal AI brought to life. For developers, the model's strength in code and mathematical reasoning makes it a valuable tool for tasks ranging from code generation and debugging to complex problem-solving. If you're interested in exploring alternatives, you can learn more about Gemini 1.5 Pro (Google).

Gemini 1.5 Pro earns its spot in this ai model comparison because of its groundbreaking capabilities. Its extensive context window, multimodal proficiency, and strength in technical domains are truly compelling. However, it's important to be aware of its current limitations. As the model is still under development, some features are being rolled out gradually, and performance can be inconsistent across specialized domains. Additionally, access to Gemini 1.5 Pro is currently more limited than some established competitors, and leveraging its full capabilities requires significant computational resources. Specific pricing and technical requirements have yet to be publicly released by Google.

Pros:

- Exceptional context handling for analyzing large documents and complex tasks.

- Superior multimodal understanding across text, images, audio, and video.

- Strong performance on technical tasks, including coding and mathematical reasoning.

- More efficient resource utilization than many competitors thanks to its architecture.

Cons:

- Still in development with ongoing feature rollouts and potential inconsistencies.

- Inconsistent performance in some specialized domains requires further refinement.

- Limited availability restricts broader access for some users.

- Higher computational requirements for full functionality may pose a barrier.

No spam, no nonsense. Pinky promise.

5. Llama 3 (Meta)

Llama 3 is Meta's latest offering in the open-source large language model (LLM) arena, and a strong contender in any AI model comparison. It comes in various sizes, ranging from 8 billion to 70 billion parameters, catering to diverse needs and computational resources. This flexibility makes it suitable for both researchers exploring the frontiers of AI and commercial applications seeking powerful yet cost-effective solutions. Llama 3 aims to democratize access to cutting-edge LLM technology, offering an alternative to proprietary models that often come with hefty price tags or restricted access. Its improved instruction-following and reasoning capabilities make it a versatile tool for a wide range of tasks.

One of the key advantages of Llama 3 in the AI model comparison landscape is its open-source nature. This allows developers to customize and fine-tune the model to their specific needs, unlike closed-source alternatives. For example, a digital marketer could fine-tune Llama 3 on a dataset of marketing copy to generate more effective ad campaigns. Similarly, software engineers can integrate Llama 3 into applications ranging from chatbots to code generation tools. Its open-source license also permits commercial use with proper attribution, making it a viable option for businesses looking to leverage the power of LLMs without significant upfront costs.

Llama 3's flexibility extends to deployment options as well. Developers can choose to run it on local hardware, which offers enhanced data privacy, or leverage cloud-based solutions for easier scalability. This is particularly relevant for industries with strict data security regulations, such as healthcare. While the larger variants require substantial computing power, the availability of smaller parameter sizes allows users with limited resources to experiment and deploy Llama 3 effectively. This scalability makes it a compelling choice for indie hackers or startups looking to incorporate AI capabilities without massive infrastructure investments.

Features and Benefits:

- Open-source with various parameter sizes (8B-70B): Offers flexibility and scalability for diverse applications and resources.

- Improved instruction-following: Leads to more accurate and predictable outputs, simplifying prompt engineering.

- Strong performance on reasoning and language understanding benchmarks: Enables complex tasks such as text summarization, question answering, and code generation.

- Responsible AI principles: Designed with ethical considerations in mind, mitigating potential risks associated with AI deployment.

- Flexible deployment options (local or cloud): Caters to varying needs for privacy, security, and scalability.

Pros:

- Open-source nature allows customization and fine-tuning.

- Can be deployed on local hardware for privacy.

- Free for research and commercial applications (with proper licensing).

- Competitive performance with many proprietary models.

Cons:

- Requires significant computing resources for larger variants.

- Less optimized out-of-the-box compared to some commercial alternatives.

- Knowledge cutoff limitations similar to other LLMs.

- May require more prompt engineering to achieve optimal results, though this has improved over previous versions.

Website: https://ai.meta.com/llama/

Llama 3's combination of strong performance, open accessibility, and flexible deployment options makes it a valuable tool in the rapidly evolving AI landscape. Its inclusion in any AI model comparison is justified by its potential to empower both researchers and developers in pushing the boundaries of what's possible with large language models. Whether you’re a ChatGPT user exploring alternatives, a Google Gemini user seeking open-source options, or an Anthropic user interested in different architectures, Llama 3 offers a compelling proposition worth investigating.

6. Mistral Large (Mistral AI)

Mistral Large is Mistral AI's flagship large language model (LLM), designed to tackle complex reasoning, coding, and general language understanding tasks. It distinguishes itself through a combination of strong performance and efficient resource utilization, making it a compelling option in the competitive AI model landscape. Developed using Direct Preference Optimization (DPO), Mistral Large is fine-tuned to align with human preferences, leading to more natural and helpful interactions. This focus makes it particularly adept at following detailed instructions and handling specialized tasks within specific domains. Its presence in this AI model comparison is well-deserved, offering a powerful yet efficient alternative to other established players.

Practical Applications and Use Cases:

- Advanced Coding Assistance: Mistral Large excels at code generation and debugging across various programming languages, making it a valuable tool for software engineers and programmers. It can assist with tasks ranging from writing simple scripts to developing complex software components.

- Complex Reasoning and Problem Solving: From analyzing intricate datasets to generating creative solutions, Mistral Large can be leveraged for tasks demanding robust reasoning capabilities. This makes it suitable for research, data analysis, and strategic planning applications.

- Content Creation and Marketing: The model's strong language understanding and generation skills can be applied to content creation, marketing copywriting, and other tasks requiring natural and engaging text. Digital marketers can leverage it for crafting compelling campaigns and personalized content.

- Chatbots and Conversational AI: Mistral Large's human preference alignment through DPO makes it well-suited for developing sophisticated chatbots and conversational AI agents. This is particularly valuable for businesses looking to enhance customer service and automate interactions.

- Research and Development: Its efficient architecture makes it an attractive option for researchers exploring new AI applications and pushing the boundaries of LLM capabilities, especially those working with limited computational resources.

Features and Benefits:

- Efficient Architecture: Mistral Large provides an excellent performance-to-parameter ratio, meaning it achieves high performance with relatively fewer parameters than some competitors. This translates to lower computational costs and faster processing times.

- Strong Reasoning and Instruction Following: The model excels at following complex instructions and performing intricate reasoning tasks, making it highly adaptable to various use cases.

- Human Preference Alignment (DPO): Trained with DPO, Mistral Large is more likely to generate outputs aligned with human expectations and preferences.

- Multilingual Support: It offers robust performance across multiple languages, expanding its accessibility and utility for global users.

Pros:

- Excellent performance relative to resource consumption.

- Balances strong reasoning with factual accuracy.

- More resource-efficient than many comparable LLMs.

- Presents a European-based alternative in a largely US-dominated AI landscape.

Cons:

- Smaller developer ecosystem compared to more established models like OpenAI or Google's offerings.

- Less comprehensive documentation and available resources.

- Knowledge limitations based on its training data cutoff date.

- May encounter challenges with some highly specialized domains.

Pricing and Technical Requirements:

Pricing and specific technical requirements for Mistral Large are not publicly available at this time. Refer to the Mistral AI website for the most up-to-date information.

Comparison with Similar Tools:

Mistral Large competes with other prominent LLMs like OpenAI's GPT models, Google's Gemini, and Anthropic's Claude. While it might not have the same extensive ecosystem or readily available resources as these established models, Mistral Large stands out with its impressive performance-to-parameter ratio, making it a strong contender for users prioritizing efficiency and cost-effectiveness. Its European origins also offer an alternative for those seeking diversity in the AI provider landscape.

Implementation and Setup Tips:

As detailed documentation and public APIs are still developing, refer to the official Mistral AI website (https://mistral.ai/) for the latest instructions on accessing and implementing Mistral Large. Staying updated with their official channels will be crucial for effectively utilizing this evolving LLM.

7. PaLM 2 (Google)

PaLM 2 is Google's powerful large language model (LLM) and a significant contender in the bustling AI model comparison landscape. It serves as the foundation for many of Google's AI products, offering improved multilingual capabilities, reasoning skills, and code generation compared to its predecessor. Trained on a massive dataset encompassing code, mathematical content, and multilingual text, PaLM 2 is a versatile tool suitable for various applications like translation, summarization, and question answering. Its strength lies in its ability to understand and generate text in over 100 languages, making it a valuable asset for global communication and content creation. This makes it a strong choice for developers and businesses seeking a robust and multilingual LLM for a wide range of tasks.

PaLM 2’s enhanced logical reasoning capabilities make it particularly well-suited for tasks requiring complex problem-solving. For example, it can be used to analyze data, draw inferences, and generate solutions for scientific, mathematical, or business challenges. Furthermore, its efficient architecture and improved inference speed contribute to a smooth and responsive user experience, even with complex prompts. This efficiency is crucial for time-sensitive applications and high-volume processing. Software engineers and programmers will find PaLM 2's superior coding capabilities in multiple programming languages particularly useful for tasks like code generation, debugging, and code completion. Its proficiency in understanding and generating code makes it a valuable tool for accelerating software development workflows.

Features and Benefits:

- Advanced multilingual support: Supports over 100 languages, facilitating communication and content creation across diverse linguistic landscapes.

- Strong logical reasoning: Excels in complex problem-solving and analytical tasks, making it suitable for scientific research, data analysis, and more.

- Efficient architecture: Improved inference speed provides a responsive user experience.

- Superior coding capabilities: Supports multiple programming languages, aiding developers in code generation, debugging, and completion.

- Enhanced factual knowledge: Demonstrates strong understanding across various domains, improving accuracy and reliability in information retrieval and generation.

- Tight integration with the Google ecosystem: Seamlessly integrates with other Google services and tools, enhancing workflow efficiency.

Pros:

- Excellent multilingual understanding and generation.

- Strong mathematical and scientific reasoning capabilities.

- Well-integrated with Google's ecosystem of tools.

- Good performance on code-related tasks.

Cons:

- Less accessible for independent developers compared to open-source models.

- Being progressively replaced by Google's newer Gemini models. This means future development and support might be prioritized for Gemini.

- Limited customization options for specific use cases.

- Restricted access through Google's APIs only. This can be a barrier for those seeking direct access to the model's architecture.

Pricing and Technical Requirements:

Pricing information for PaLM 2 is available through Google AI's platform. Access is primarily provided through Google's APIs, and technical requirements vary depending on the specific implementation and use case.

Comparison with Similar Tools:

Compared to other large language models like GPT-3 or Anthropic's Claude, PaLM 2 distinguishes itself with its superior multilingual capabilities and strong integration within the Google ecosystem. While open-source alternatives offer greater flexibility for customization, PaLM 2's tight integration with Google services provides a streamlined experience within that environment.

Implementation/Setup Tips:

Accessing PaLM 2 is typically done through Google AI's platform and APIs. Consult Google's official documentation for the most up-to-date information on API access, integration procedures, and specific usage guidelines.

Why PaLM 2 Deserves its Place in this List:

PaLM 2 earns its spot in this AI model comparison due to its versatility, strong performance across multiple domains (including multilingualism, reasoning, and coding), and seamless integration within the Google ecosystem. While access limitations and the rise of Gemini might influence future considerations, PaLM 2 remains a powerful and relevant tool for a variety of AI applications. Its broad range of capabilities and robust performance make it a valuable resource for AI professionals, developers, and businesses seeking a reliable LLM solution. You can explore more about PaLM 2 on the official Google AI website.

8. Claude 3 Sonnet (Anthropic)

Claude 3 Sonnet is Anthropic's mid-range model, slotting neatly between the powerhouse Claude 3 Opus and the nimble Claude 3 Haiku. This positioning makes it an attractive option in the bustling AI model comparison landscape, offering a compelling balance of performance and efficiency. Sonnet is designed for a broad range of business and personal use cases, providing strong capabilities in reasoning, coding, and creative tasks without the hefty price tag or latency of its larger sibling, Opus. This makes it an ideal choice for users who need a robust yet practical AI solution.

Sonnet boasts a 100,000-token context window, meaning it can process and retain information from significantly longer texts, making it well-suited for tasks like summarizing lengthy documents, analyzing complex codebases, or generating extended creative content. Its multimodal capabilities allow it to understand both text and images, opening up possibilities for applications like image captioning, visual question answering, and content creation incorporating both modalities. Anthropic has also focused on improving safety mechanisms in Claude 3 Sonnet, reducing the likelihood of generating harmful or misleading outputs – a crucial factor for businesses and developers prioritizing responsible AI deployment.

One of Sonnet's key strengths is its balanced performance profile. While not as powerful as Opus on highly complex reasoning tasks, it delivers excellent performance across a wide range of general tasks, making it a versatile tool for various applications. This versatility, combined with faster response times and a better price-to-performance ratio than larger models, solidifies its position as a strong contender in any AI model comparison.

Practical Applications and Use Cases:

- Content creation: Generating marketing copy, blog posts, articles, scripts, and other creative text formats.

- Code generation and debugging: Assisting developers with coding tasks, identifying bugs, and generating code in various programming languages.

- Data analysis and summarization: Extracting key insights and summarizing large datasets or lengthy documents.

- Chatbots and conversational AI: Powering conversational interfaces for customer support, virtual assistants, and other interactive applications.

- Research and knowledge synthesis: Assisting with research tasks by synthesizing information from multiple sources and answering complex questions.

Comparison with Similar Tools:

Compared to models like OpenAI's GPT-4, Claude 3 Sonnet offers a compelling alternative with a focus on safety and a wider context window. While GPT-4 may excel in certain specialized tasks, Sonnet's balanced capabilities and robust safety features make it a compelling choice for many general-purpose applications. Similarly, compared to smaller, faster models like Claude 3 Haiku or other lightweight LLMs, Sonnet offers a significant performance upgrade for tasks demanding more complex reasoning and a longer context window.

Implementation and Setup Tips:

Access to Claude 3 Sonnet is currently provided through Anthropic's API. Developers can integrate Sonnet into their applications by following the documentation and utilizing available client libraries. While specific pricing details aren't publicly available, Anthropic offers tiered pricing based on usage. Developers should consult Anthropic's website for the most up-to-date pricing information and API documentation.

Pros:

- Better price-to-performance ratio than larger models.

- Faster response times than Opus while maintaining quality.

- Strong safety features and reduced harmful outputs.

- Good performance across a wide range of general tasks.

- 100K token context window.

Cons:

- Less capable than Opus on complex reasoning tasks.

- More expensive than smaller models like Haiku.

- Limited by training data cutoff date.

- May struggle with highly specialized domain knowledge.

Website: https://www.anthropic.com/claude

9. GPT-3.5 Turbo (OpenAI)

GPT-3.5 Turbo is OpenAI's workhorse language model, offering a compelling blend of performance and affordability. It's the engine behind many ChatGPT interactions and a popular choice for developers seeking a robust yet cost-effective solution for various AI-driven applications. In any AI model comparison, GPT-3.5 Turbo stands out as a versatile option suitable for a wide range of tasks. From powering conversational AI chatbots and generating creative content to summarizing text and performing basic reasoning, GPT-3.5 Turbo delivers impressive results with good accuracy and speed. Its position in this list is solidified by its accessibility, both in terms of cost and ease of integration.

Specifically, GPT-3.5 Turbo excels in conversational applications. Its optimized architecture allows for fast response times and efficient processing, crucial for creating dynamic and engaging user experiences. It performs admirably on general knowledge tasks, making it a valuable tool for information retrieval and question-answering systems. For high-volume applications, its cost-effectiveness is a significant advantage compared to more resource-intensive models like GPT-4. OpenAI also provides regular updates, ensuring the model continues to improve and adapt to evolving needs.

For developers, GPT-3.5 Turbo comes with extensive documentation and developer resources, streamlining the integration process. While pricing can vary based on usage, it's significantly more affordable than GPT-4, making it an attractive option for startups, indie hackers, and developers working on budget-conscious projects. Technical requirements are minimal, and the API is designed for seamless integration into various platforms and applications.

Comparing GPT-3.5 Turbo with similar models like Google's Gemini or Anthropic's Claude reveals its strengths and weaknesses. While GPT-3.5 Turbo might not possess the same level of complex reasoning capabilities as GPT-4 or some newer models, it offers a faster and more affordable alternative for tasks where those advanced capabilities are not essential. Compared to Gemini, GPT-3.5 Turbo has a more established track record and wider community support.

Implementation and Setup Tips:

- Start with the OpenAI API documentation: Familiarize yourself with the available endpoints, parameters, and best practices.

- Define your use case: Clearly outlining your specific requirements will help you optimize prompts and parameters for best performance.

- Experiment with different prompt structures: Fine-tuning your prompts can significantly impact the quality and relevance of the generated output.

- Monitor usage and costs: Keep track of your API usage to avoid unexpected expenses and optimize your application's cost-effectiveness.

- Leverage community resources: The OpenAI community offers a wealth of knowledge and support, providing valuable insights and solutions.

Pros:

- Significantly more affordable than GPT-4

- Faster response times than more complex models

- Suitable for most common AI assistant tasks

- Well-documented with extensive developer resources

Cons:

- Less capable on complex reasoning compared to GPT-4

- More prone to hallucinations than newer models

- Limited context window compared to premium alternatives

- Less nuanced understanding of ambiguous instructions

Website: https://openai.com/blog/chatgpt

In conclusion, GPT-3.5 Turbo holds a crucial place in the current AI landscape, bridging the gap between powerful performance and affordability. Its versatile nature makes it a valuable tool in any AI professional's arsenal, providing a practical and accessible entry point into the world of large language models. It deserves its spot in this AI model comparison due to its balanced capabilities, speed, and cost-effectiveness, making it ideal for various common use cases.

10. Cohere Command (Cohere)

When conducting an AI model comparison, Cohere Command stands out as a robust option specifically designed for enterprise applications. Unlike models geared towards general chat or creative writing, Cohere Command prioritizes factual accuracy, reduced hallucinations, and consistent performance – crucial factors for businesses. This makes it highly suitable for tasks like content generation for business reports, summarizing complex documents, and extracting key information from large datasets. It excels in situations demanding reliability and integration with existing business systems.

Cohere recognizes that different businesses have unique needs. Therefore, they offer specialized versions of Command tailored for specific use cases. This allows companies to select a model optimized for their particular tasks, further enhancing efficiency and performance. Moreover, Cohere Command boasts strong multilingual capabilities, supporting over 100 languages, making it a powerful tool for global businesses.

In an AI model comparison, Cohere Command's focus on enterprise-grade features becomes readily apparent. While models like OpenAI's GPT models or Google's Gemini are strong contenders in the general-purpose space, Cohere sets itself apart with its emphasis on enterprise needs. This dedication is reflected in superior enterprise support and comprehensive documentation, making integration and troubleshooting smoother for businesses. They also provide strong guarantees around data privacy and security, a critical consideration for businesses handling sensitive information.

Implementation and Setup Tips:

While specific technical requirements and pricing aren't publicly listed, Cohere provides detailed documentation and support for businesses interested in integrating Command. Reaching out to their sales team is the recommended approach to discuss specific needs and receive tailored guidance. Generally, implementation involves API integration, allowing your business systems to interact directly with the Cohere Command model.

Pros:

- Superior enterprise support and documentation

- Strong guarantees around data privacy and security

- Excellent multilingual performance for global businesses

- Specialized versions for various business needs, driving efficiency and performance.

- Focus on reducing hallucinations and improving factuality

Cons:

- Less well-known in consumer applications compared to models like ChatGPT or Gemini.

- Pricing is geared towards enterprise users and might be more expensive than alternatives for individual users.

- Smaller developer community compared to OpenAI or Google.

- Less suitable for creative or highly dynamic applications that prioritize imaginative outputs over factual accuracy.

Website: https://cohere.com/models/command

Cohere Command earns its place in this AI model comparison list by offering a robust, enterprise-focused solution prioritizing reliability, accuracy, and security. For businesses seeking an LLM tailored to their specific needs and offering robust support, Cohere Command deserves serious consideration. Its focus on practical applications and reducing hallucinations makes it a valuable tool in the rapidly evolving landscape of AI-driven business solutions.

10 AI Models: Core Features & Performance Comparison

| Model | Core Features ✨ | User Experience ★ | Value 💰 | Target Audience 👥 |

|---|---|---|---|---|

| 🏆 MultitaskAI | Browser-based chat, split-screen multitasking, file integration | Efficient & highly customizable ★★★★ | One-time license, cost-effective 💰 | Developers, AI professionals 👥 |

| GPT-4 (OpenAI) | Multimodal inputs, advanced reasoning and creativity | Top-tier performance ★★★★★ | Premium pricing 💰💰 | Enterprises, research teams 👥 |

| Claude 3 Opus (Anthropic) | Deep analysis, long context, nuanced instruction following | Reliable and nuanced ★★★★ | High cost 💰💰 | AI experts, enterprises 👥 |

| Gemini 1.5 Pro (Google) | Multimodal, million-token window, mixture-of-experts | Robust technical performance ★★★★ | Requires high compute, premium 💰💰 | Technical users, analysts 👥 |

| Llama 3 (Meta) | Open-source, flexible deployment, improved tuning | Practical with tuning effort ★★★ | Cost-effective, free option 💰 | Researchers, DIY developers 👥 |

| Mistral Large (Mistral AI) | Efficient architecture, human preference alignment (DPO) | Balanced and resource-efficient ★★★★ | Competitive pricing 💰 | Developers, EU market users 👥 |

| PaLM 2 (Google) | Advanced multilingual, coding & reasoning support | Integrated and fast ★★★★ | Enterprise focused, moderate cost 💰💰 | Businesses, Google ecosystem users 👥 |

| Claude 3 Sonnet (Anthropic) | Balanced performance, multimodal, enhanced safety | Reliable & smooth ★★★★ | Better price-to-performance 💰 | Business and personal applications 👥 |

| GPT-3.5 Turbo (OpenAI) | Chat-optimized, fast processing, efficient handling | Accessible and responsive ★★★★ | Highly affordable 💰 | General users, developers 👥 |

| Cohere Command (Cohere) | Enterprise-grade reliability, factual outputs, multilingual | Stable and secure ★★★★ | Higher cost for enterprise 💰💰 | Global businesses, enterprise users 👥 |

Navigating the AI Landscape: Making the Best Choice for Your Needs

This AI model comparison has explored a range of powerful tools, from established players like GPT-4 and Claude 3 Opus to emerging solutions like Mistral Large and Llama 3. Each model offers unique strengths and caters to specific needs. Whether you prioritize cost-effectiveness, performance on specific tasks, or access to advanced features, understanding these nuances is key to making the right choice. Key takeaways include the continued dominance of OpenAI's GPT models, the impressive capabilities of Anthropic's Claude models, and the exciting potential of newer models like Google's Gemini and Meta's Llama 3.

Choosing the ideal AI model depends heavily on your project requirements. Consider factors such as performance on specific tasks (e.g., text generation, code generation, reasoning), cost per API call, ease of integration, and the specific features offered by each provider. For example, if you're developing a complex application that requires seamless integration with other tools, a model with a robust API and comprehensive documentation like GPT-4 or PaLM 2 might be a suitable choice. Alternatively, if cost is a primary concern, exploring open-source options like Llama 3 or more affordable closed-source models like GPT-3.5 Turbo could be beneficial. For teams working collaboratively on these projects, especially on platforms like GitHub, robust project management is essential. Leveraging GitHub checklist templates can significantly improve code quality and streamline workflows, as highlighted in The Ultimate GitHub Checklist Template Guide: Proven Strategies for Development Excellence from Pull Checklist.

Don't be afraid to experiment with different models to see which best fits your workflow. MultitaskAI can be an invaluable tool in this process, enabling you to compare and contrast responses from multiple models side by side, ultimately streamlining your workflow and informing your decision-making. By carefully considering your needs and leveraging the resources available, you can harness the power of AI to drive innovation and achieve your goals in 2025 and beyond. The future of AI is bright, and with the right tools at your disposal, you can be a part of shaping it.