AI Data Privacy: Key Strategies for Secure Machine Learning

Learn top AI data privacy tips to protect sensitive info and stay compliant. Discover expert insights to secure your AI projects effectively.

The AI Data Privacy Challenge: What's Really at Stake

Artificial intelligence (AI) is rapidly advancing, but this progress presents a significant challenge: balancing AI's need for data with growing public concern about personal privacy. This core tension is the heart of the AI data privacy challenge. Organizations need huge datasets, often containing sensitive user information, to train and improve their AI models. At the same time, users are increasingly cautious about how their data is collected, used, and potentially misused.

The Growing Tension Between AI and Privacy

This increasing awareness of AI data privacy is well-founded. Incidents involving AI and data breaches are rising. A recent report showed a 56.4% increase in AI-related incidents in 2024 compared to 2023, with a total of 233 reported cases. This highlights the growing risks linked to AI systems and the critical need for strong data governance and privacy measures. As AI adoption accelerates, so does the potential for misuse and security breaches. Learn more about these risks: AI Data Privacy Risks.

Traditional data protection methods often fall short when applied to the complexities of AI. Conventional approaches mainly focus on securing data at rest and in transit. But AI systems introduce a new dimension: data in use. This means data is actively being processed and analyzed, creating new vulnerabilities and requiring specialized privacy safeguards. For instance, an AI model could unintentionally reveal sensitive data through its outputs or be vulnerable to attacks that manipulate its function.

Rethinking Data Protection for the Age of AI

This shift demands a fundamental change in how we approach data protection. Simply anonymizing data, a standard practice in traditional data protection, may not suffice. AI's powerful pattern recognition can sometimes reconstruct anonymized data, exposing it to vulnerabilities. This necessitates more advanced techniques like differential privacy. This method adds carefully measured noise to datasets, safeguarding individual privacy while preserving the data's usefulness for AI training.

The rise of AI also emphasizes the importance of data minimization and purpose limitation. Collecting only the data absolutely necessary for the intended purpose and ensuring its use remains aligned with that purpose are key to responsible AI development. This often requires organizations to carefully review their data collection practices and establish clear data governance frameworks. For further information on these concerns, see: AI Data Privacy Concerns.

The ethical implications of AI data privacy also require careful consideration. AI systems can reinforce and amplify existing biases found in their training data, potentially leading to discriminatory outcomes. This underscores the need for continuous monitoring and assessment of AI systems to ensure fairness and accountability. Ethical principles that prioritize human well-being and societal benefit should guide the development and deployment of AI.

Navigating the Regulatory Maze With Confidence

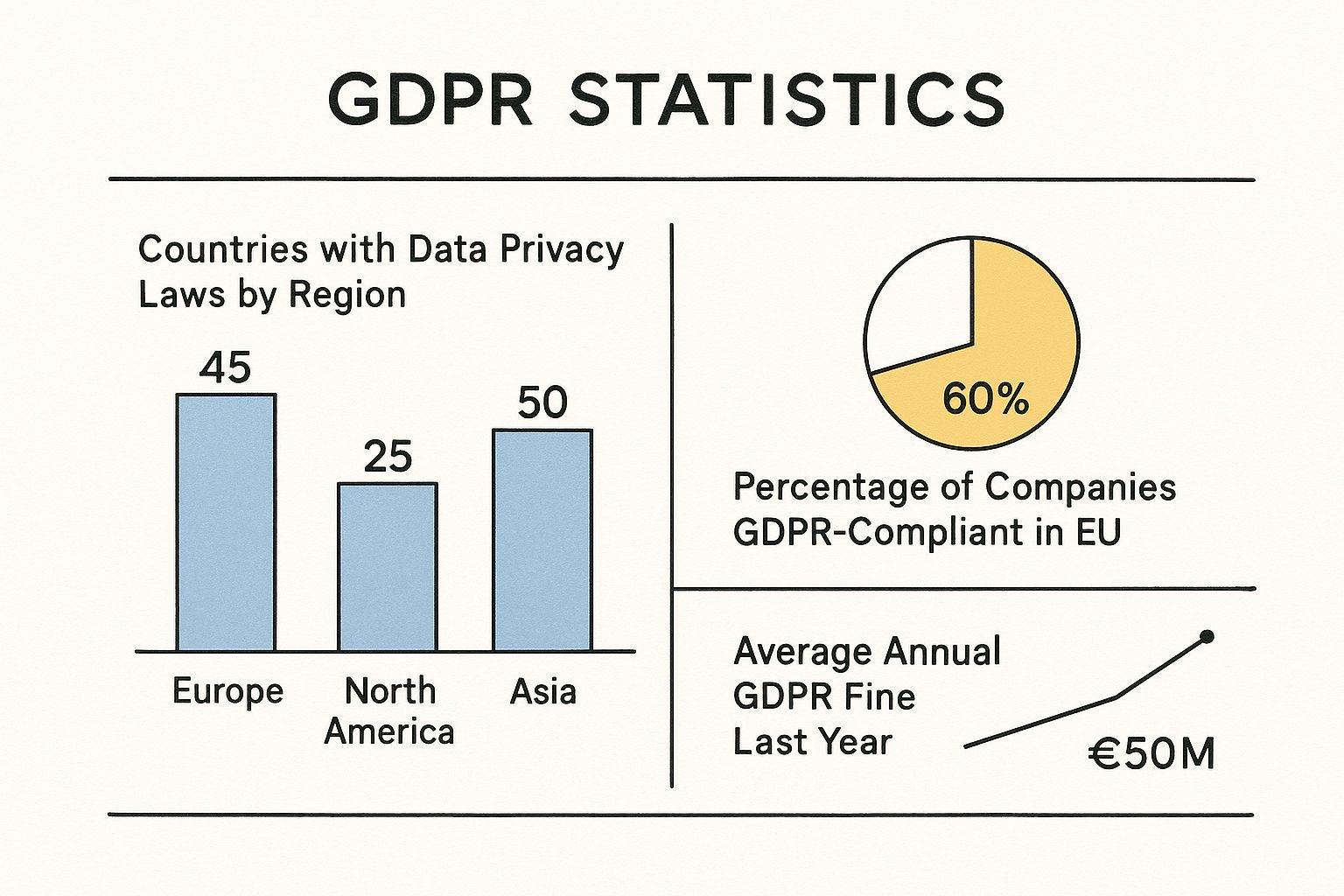

Feeling lost in the world of AI data privacy regulations? It's understandable. Keeping up with rules like the EU's AI Act and various U.S. state laws can be a real challenge. This section simplifies these complex rules, helping you build AI projects that protect privacy.

Understanding the Core Principles

AI and data privacy require careful consideration. AI systems need data, often personal information, to function. This raises important questions about user consent, data security, and ethical use. The European Union's AI Act aims to balance innovation and privacy. In the U.S., regulations like the CCPA and CDPA create a complex situation for businesses. Staying on top of these regulations is crucial for maintaining customer trust. Learn more: Data Privacy in 2025.

Actionable Guidelines for AI Data Privacy

How can businesses manage these regulations? Successful companies build compliance into their AI projects from the beginning. Here's how:

- Understanding the Specific Requirements: Know which rules apply to your business and the data your AI uses.

- Implementing Data Minimization: Collect only the data you absolutely need. This reduces the risk of managing sensitive information.

- Prioritizing Purpose Limitation: Don't use data for purposes other than what you originally stated, without getting consent. Be transparent about data usage.

- Building Transparency and Explainability: Make your AI's decision-making process clear to users. This builds trust and accountability.

- Conducting Regular Privacy Risk Assessments: Check for privacy risks throughout your AI project, from data collection to model use and monitoring.

When handling medical images, anonymization is crucial. A DICOM anonymizer software can help. For more tips, see: How to master data privacy best practices.

Turning Compliance Into a Competitive Advantage

Smart companies see compliance as a strength. By prioritizing data privacy, businesses build customer trust, stand out from competitors, and attract skilled employees.

Staying Ahead of the Curve

Data privacy regulations are always evolving. Stay updated on new rules and best practices. Connect with industry experts, attend relevant events, and follow updates from regulators. Being proactive helps businesses succeed with AI while protecting user privacy.

Get started with your lifetime license

Enjoy unlimited conversations with MultitaskAI and unlock the full potential of cutting-edge language models—all with a one-time lifetime license.

Demo

Free

Try the full MultitaskAI experience with all features unlocked. Perfect for testing before you buy.

- Full feature access

- All AI model integrations

- Split-screen multitasking

- File uploads and parsing

- Custom agents and prompts

- Data is not saved between sessions

Lifetime License

Most Popular€99€149

One-time purchase for unlimited access, lifetime updates, and complete data control.

- Everything in Free

- Data persistence across sessions

- MultitaskAI Cloud sync

- Cross-device synchronization

- 5 device activations

- Lifetime updates

- Self-hosting option

- Priority support

Loved by users worldwide

See what our community says about their MultitaskAI experience.

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Finally found a ChatGPT alternative that actually respects my privacy. The split-screen feature is a game changer for comparing models.

Sarah

Been using this for months now. The fact that I only pay for what I use through my own API keys saves me so much money compared to subscriptions.

Marcus

The offline support is incredible. I can work on my AI projects even when my internet is spotty. Pure genius.

Elena

Love how I can upload files and create custom agents. Makes my workflow so much more efficient than basic chat interfaces.

David

Self-hosting this was easier than I expected. Now I have complete control over my data and conversations.

Rachel

The background processing feature lets me work on multiple conversations at once. No more waiting around for responses.

Alex

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Switched from ChatGPT Plus and haven't looked back. This gives me access to all the same models with way more features.

Maya

The sync across devices works flawlessly. I can start a conversation on my laptop and continue on my phone seamlessly.

James

As a developer, having all my chats, files, and agents organized in one place has transformed how I work with AI.

Sofia

The lifetime license was such a smart purchase. No more monthly fees, just pure productivity.

Ryan

Queue requests feature is brilliant. I can line up my questions and let the AI work through them while I focus on other tasks.

Lisa

Having access to Claude, GPT-4, and Gemini all in one interface is exactly what I needed for my research.

Mohamed

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

The file parsing capabilities saved me hours of work. Just drag and drop documents and the AI understands everything.

Emma

Dark mode, keyboard shortcuts, and the clean interface make this a joy to use daily.

Carlos

Fork conversations feature is perfect for exploring different ideas without losing my original train of thought.

Aisha

The custom agents with specific instructions have made my content creation process so much more streamlined.

Thomas

Best investment I've made for my AI workflow. The features here put other chat interfaces to shame.

Zoe

Privacy-first approach was exactly what I was looking for. My data stays mine.

Igor

The PWA works perfectly on mobile. I can access all my conversations even when I'm offline.

Priya

Support team is amazing. Quick responses and they actually listen to user feedback for improvements.

Nathan

Privacy-by-Design: Building Protection From the Ground Up

Protecting user privacy isn't something you can just tack on at the end. Leading organizations understand this, and they're building privacy directly into their AI systems from day one. This proactive approach, known as Privacy by Design, works to anticipate and prevent privacy issues before they even arise. Think of it like building a house with a strong foundation, rather than patching up cracks later.

Core Principles of Privacy by Design in AI

Privacy by Design isn't just a trendy term; it's a structured approach. This involves incorporating core principles like data minimization, purpose limitation, and transparency throughout the entire AI lifecycle, starting from the very beginning of the design phase. For example, data minimization means only collecting the data absolutely essential for the AI to function, which minimizes potential privacy risks right from the get-go. Purpose limitation also plays a vital role, ensuring data is used only for the specific reason it was originally collected.

When building a privacy-by-design strategy, it's a good idea to leverage a strong framework. A clearly defined Data Governance Framework can help you structure how you manage and protect your data. Transparency is also essential: clearly communicating how the AI system operates and how data is handled builds trust with users.

Implementing Privacy by Design: Practical Steps

Successful companies are actively incorporating Privacy by Design into their AI development processes. They do this by conducting comprehensive privacy risk assessments during each phase of development. They're also using automated tools to perform privacy checks, ensuring they maintain compliance every step of the way. This preventative approach saves time and resources, avoiding expensive fixes later.

Here are a few practical methods successful companies are using:

- Data Mapping and Inventorying: This involves understanding exactly what data you have, where it's located, and how it moves within your AI system.

- Differential Privacy Integration: These techniques involve adding noise to datasets, effectively masking individual data points while still preserving overall trends.

- Federated Learning Exploration: This method trains AI models across several decentralized datasets, allowing for collaborative development without requiring the sharing of sensitive information.

To further clarify how these principles can be put into action within AI development, let's look at a practical breakdown.

The following table, "Privacy-by-Design Principles for AI Development," offers a closer look at how these core principles are implemented throughout the development process.

| Privacy Principle | Traditional Application | AI-Specific Implementation | Benefits |

|---|---|---|---|

| Data Minimization | Collecting only necessary information for a given purpose. | Training AI models on the minimal data required to achieve the desired accuracy, discarding irrelevant or redundant data points. | Reduces storage costs, minimizes risk exposure, and simplifies compliance. |

| Purpose Limitation | Using collected data only for the specified purpose. | Ensuring AI models are trained and used only for the task they were designed for, avoiding repurposing data without explicit consent. | Increases user trust, reduces the potential for misuse, and strengthens ethical practices. |

| Transparency | Providing clear information about data collection and use practices. | Explaining how the AI system functions, including data usage and decision-making processes, in a way that's understandable to the average user. | Fosters accountability, empowers users with control over their data, and supports informed consent. |

This table demonstrates how traditional privacy principles find specific applications in AI development, leading to tangible benefits. By focusing on these practical steps, organizations can ensure their AI systems are both effective and respectful of user privacy.

Overcoming Implementation Challenges

While Privacy by Design has many advantages, it's not without its challenges. One common obstacle is the perceived conflict between privacy and model performance. However, studies show that techniques designed to protect privacy can actually enhance the resilience and broader applicability of AI models. This means that prioritizing privacy can create AI systems that are both ethically sound and more powerful.

Another challenge is weaving these privacy practices into existing development processes. This requires a change in how teams think and operate, necessitating collaboration between privacy specialists, developers, and other key players. The long-term rewards, however, are worth the effort: increased user trust, reduced legal risks, and a better brand reputation. Embracing Privacy by Design is ultimately an investment in building responsible and sustainable AI for the future.

The Privacy Tech Revolution: Solutions That Actually Work

Beyond the marketing buzz, what AI privacy tools really protect user data? This section examines the technologies effective organizations are using today. We'll explore how these technologies balance privacy with performance, and we'll also address implementation and resource needs.

Cutting Through the Hype: Real-World Privacy Tools

The demand for robust AI data privacy solutions has led to a surge in the development of privacy-enhancing technologies (PETs). For example, the global data privacy software market is predicted to explode from $2.76 billion in 2023 to an astounding $30.31 billion by 2030. That's a CAGR of 40.9%! This impressive growth demonstrates a clear market need for AI-driven businesses to prioritize and invest in PETs. Data Privacy Statistics offer a more detailed look at this trend.

Furthermore, Gartner projects that 60% of large organizations will use privacy-enhancing computation (PEC) techniques by 2025, representing a 46% increase in adoption over the next three years. This shift highlights how businesses are increasingly building privacy directly into their operations.

Differential Privacy: Protecting Individuals Within the Aggregate

Differential privacy adds "noise" to datasets. This noise makes it difficult to identify individual users while still allowing AI models to learn from overall data trends. Think of it like blurring faces in a crowd photo. You can still understand the scene, but individual identities remain protected. This is especially useful for training machine learning models on sensitive data.

Federated Learning: Collaborative AI Without Data Sharing

Federated learning enables AI models to train on multiple decentralized servers. This eliminates the need to centralize sensitive data. Imagine several hospitals collaborating to train a diagnostic AI. Each hospital keeps its patient data secure while contributing to a shared, improved model. This method is gaining traction in healthcare, finance, and other fields that place a premium on data security.

Homomorphic Encryption: Computing on Encrypted Data

Homomorphic encryption allows computations to be performed directly on encrypted data. This means data never needs to be decrypted during processing. It’s like working with a locked box. You can manipulate the contents without ever seeing them directly. This technology is complex, but it offers potentially groundbreaking security for AI applications.

Evaluating Privacy Technologies: A Comparative Look

Different AI applications have different privacy needs. The following data chart offers a comparison of these leading privacy-enhancing technologies. It visualizes implementation complexity, the level of privacy protection, and the impact on AI model performance.

Privacy-Enhancing Technologies for AI Applications

| Technology | Implementation Complexity | Privacy Benefit Level | Performance Impact |

|---|---|---|---|

| Differential Privacy | Moderate | High | Low to Moderate |

| Federated Learning | High | Very High | Low |

| Homomorphic Encryption | Very High | Highest | Moderate to High |

As the chart reveals, homomorphic encryption provides the highest level of privacy, but it’s also the most challenging to implement and can impact performance. Differential privacy offers a solid balance of strong privacy and manageable implementation. Federated learning provides very high privacy with a low-performance impact, but it demands significant resources for deployment. Consider these trade-offs to choose the best tools for your specific requirements.

Integrating Privacy Tools With Existing Infrastructure

Successfully implementing these technologies requires integrating them with your existing data protection systems. This includes data governance frameworks, access controls, and incident response procedures. This comprehensive, layered approach strengthens defenses against privacy breaches. By carefully evaluating and strategically deploying these tools, businesses can harness the full potential of AI while safeguarding individual privacy and building public trust.

Innovation Without Compromise: Balancing Progress and Privacy

Protecting user data doesn't have to mean sacrificing AI advancement. Many leading organizations are demonstrating that strong data privacy can actually fuel innovation. This section explores how they are achieving this delicate balance, turning privacy considerations into opportunities instead of roadblocks.

Synthetic Data: Unlocking AI’s Potential Without the Privacy Risks

One powerful strategy gaining traction is the use of synthetic data. This involves creating artificial datasets that statistically mirror real-world data without containing any actual personal information. It's like using a realistic movie set instead of filming in a real, populated city; you get the visual fidelity you need without disturbing anyone's life.

Synthetic data allows AI models to be trained on high-quality data without exposing sensitive user information. This opens up new possibilities for AI development in privacy-sensitive areas like healthcare and finance. For example, synthetic data can be used to train diagnostic algorithms without accessing real patient records.

Privacy-Preserving Machine Learning: Collaboration Across Boundaries

Privacy-preserving machine learning techniques are also transforming how organizations collaborate on AI development. Methods like federated learning allow multiple parties to contribute to a shared model without directly exchanging their data.

This approach unlocks the potential of collective intelligence while safeguarding the privacy of each participant's data. This has major implications for fields like medical research, where sharing data is often restricted due to privacy regulations.

Ethical AI Governance: Guiding Responsible Innovation

Robust ethical AI governance frameworks are essential for navigating the complex landscape of AI data privacy. These frameworks provide clear guidelines and processes for data usage, ensuring consistency and accountability within organizations. They function as an internal compass, guiding decision-making and promoting responsible innovation.

These frameworks often address issues like data minimization, purpose limitation, and transparency. This helps organizations build trust with users and stay ahead of evolving regulations. A strong governance structure also fosters a culture of ethical AI development, empowering teams to identify and address potential privacy risks proactively.

Case Studies: Turning Privacy Into a Catalyst for Creativity

Real-world examples showcase how organizations are successfully integrating these principles. One company used synthetic data to train a fraud detection model, significantly improving accuracy without compromising customer privacy.

Another organization employed federated learning to develop a predictive maintenance system across its network of manufacturing plants. This enhanced operational efficiency while keeping sensitive data secure at each location. These success stories demonstrate that respecting privacy doesn't stifle innovation; it can actually drive the development of more creative and effective solutions.

Building a Culture of Privacy-Conscious Innovation

The most successful organizations view data privacy not as a constraint but as a driver of innovation. By embedding privacy considerations into every stage of the AI development lifecycle, they're building a culture of privacy-conscious innovation. This proactive approach allows them to create AI systems that are both powerful and trustworthy, fostering stronger customer relationships and gaining a competitive edge in a world where privacy is increasingly valued. MultitaskAI , for example, prioritizes privacy by allowing users to connect directly to leading AI models using their own API keys, ensuring complete control over their data. This approach ensures that sensitive information is never handled by third-party servers. For AI professionals seeking a privacy-focused solution, MultitaskAI offers a faster, smarter, and more secure way to work with AI. This focus on privacy ultimately leads to more robust, sustainable AI solutions that benefit both businesses and individuals.

No spam, no nonsense. Pinky promise.

Turning AI Data Privacy Into Your Competitive Advantage

In today's world, privacy is a big deal. Organizations that make AI data privacy a priority aren't just following the rules – they're building stronger relationships with their customers and gaining a competitive edge. Let's explore how your commitment to data privacy can become a real business advantage.

Communicating Your Privacy Commitments Effectively

Transparency is key to building trust with your users. Clearly explaining your AI data privacy practices goes a long way in building confidence. This means explaining how your AI systems work and how you protect user data in a way that everyone can understand. Think about using simple analogies and visuals to explain complex concepts like differential privacy or federated learning. Open communication makes users feel secure and strengthens your brand reputation.

Clear and concise privacy policies are also essential. Skip the dense legal jargon and use plain English to outline what data you collect, how you use it, and what rights users have regarding their data. This straightforward approach shows respect for your users and reinforces your dedication to data protection.

Obtaining Meaningful Consent: Respecting User Autonomy

Getting user consent isn't just about checking a box. It's about building an ongoing, transparent relationship. Give users granular control over their data, letting them choose what they share and how it's used. This means offering specific options related to different data uses, going beyond general consent forms.

For example, let users opt in or out of specific data collection practices, like personalized advertising or data analytics. This nuanced approach respects user preferences and strengthens the bond of trust between you and your users. For more on data governance, check out this article: How to master enterprise data governance.

Showcasing Transparency: Building Trust Through Action

Leading organizations are showing their commitment to AI data privacy through concrete actions. Publicly releasing transparency reports that detail data collection practices, algorithm audits, and privacy initiatives builds credibility.

Participating in industry initiatives and supporting privacy-focused regulations further demonstrates your dedication to responsible AI development. These actions speak volumes and position your organization as a leader in AI data privacy.

Measuring The Impact Of Your Privacy Initiatives

To truly benefit from your AI data privacy efforts, you need to measure their impact. Track key metrics like user trust scores, data breach incidents, and customer churn rates to see how effective your privacy program is. This data-driven approach helps you identify areas for improvement and optimize your strategies.

Analyzing customer feedback and conducting user surveys also provides valuable insights into how users perceive your privacy practices. Continuous monitoring and evaluation allows you to adapt and stay ahead of evolving privacy concerns.

Turning Compliance Into A Differentiator

Successfully navigating the complex world of AI data privacy regulations can set you apart. Organizations that proactively comply with regulations like GDPR and the CCPA aren't just avoiding penalties – they’re building a foundation of trust. This can attract privacy-conscious customers and give you a competitive edge.

Attracting and Retaining Top Talent

A strong commitment to AI data privacy can also help you attract and retain talented employees. Skilled professionals, particularly in tech, are increasingly looking for organizations that share their values. Prioritizing ethical AI development and data protection makes your company a desirable place to work, giving you an advantage in the talent market.

By implementing these strategies, you can transform AI data privacy from a compliance burden into a powerful driver of growth. In a world where trust is paramount, investing in privacy isn't just ethical – it's good business.